Operational Upgrade Helps Fuel Oil Exploration Surveyor

Petroleum Geo-Services increases its capabilities using innovative data center design

By Rob Elder and Mike Turff

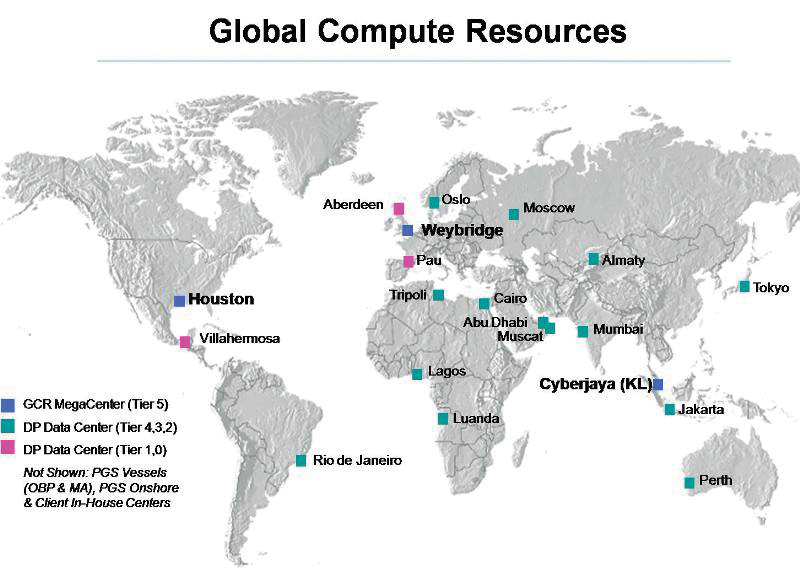

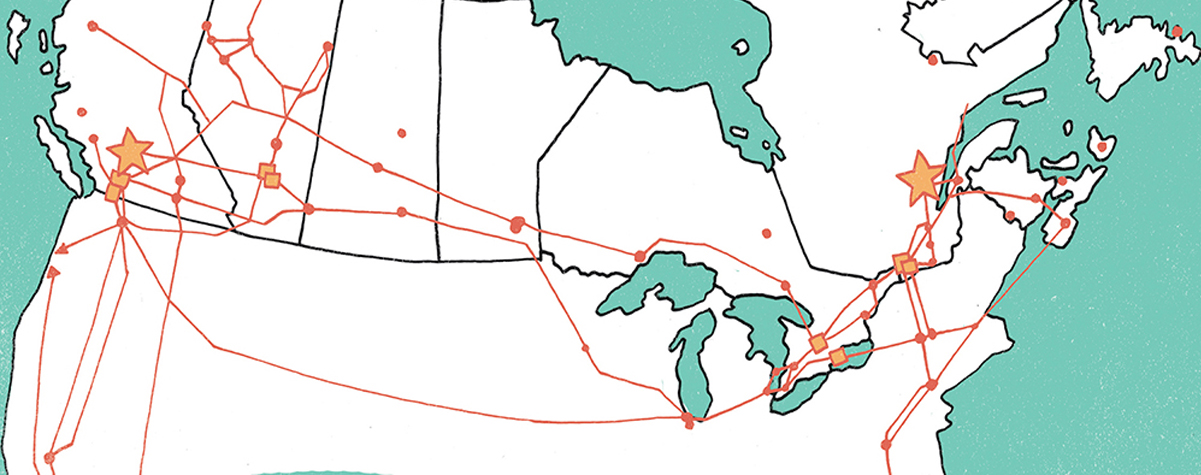

Petroleum Geo-Services (PGS) is a leading oil exploration surveyor that helps oil companies find offshore oil and gas reserves. Its range of seismic and electromagnetic services, data acquisition, processing, reservoir analysis/interpretation, and multi-client library data all require PGS to collect and process vast amounts of data in a secure and cost-efficient manner. This all demands large quantities of compute capacity and deployment of a very high-density configuration. PGS operates 21 data centers globally, with three main data center hubs located in Houston, Texas; Kuala Lumpur, Malaysia; and Weybridge, Surrey (see Figure 1).

Weybridge Data Center

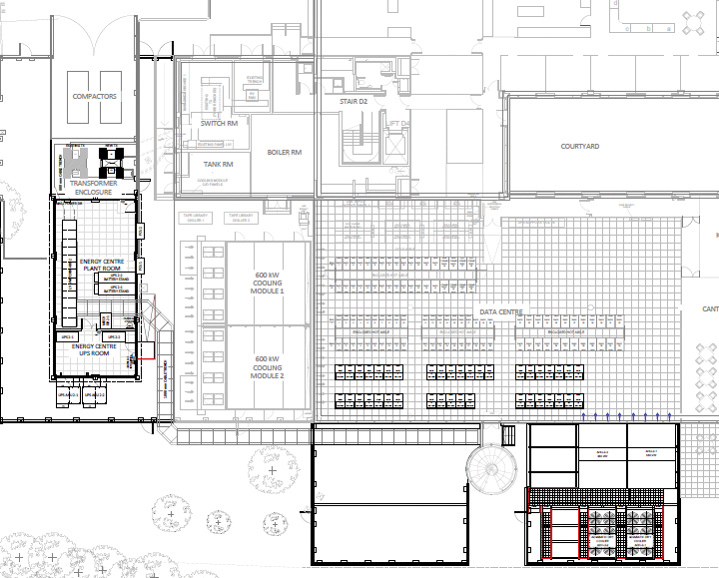

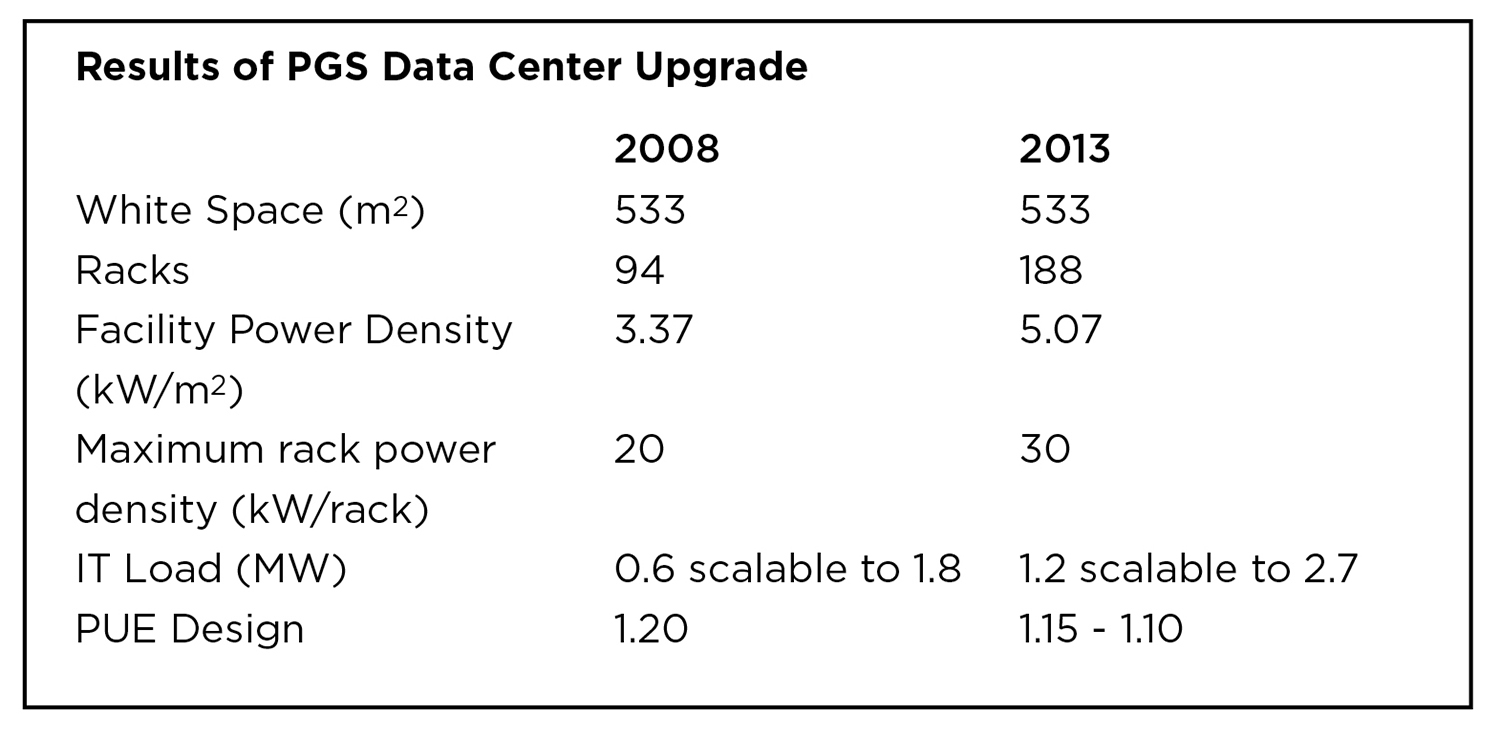

Keysource Ltd designed and built the Weybridge Data Center for PGS in 2008. The high-density IT facility, won a number of awards and saved PGS 6.2 million kilowatt-hours (kWh) annually compared to the company’s previous UK data center. The Weybridge Data Center is located in an office building, which poses a number of challenges to designers and builders of a high-performance data center. The initial project phase in 2008 was designed as the first phase of a three-phase deployment (see Figure 2).

Phase one was designed for 600 kW of IT load, which was scalable up to 1.8 megawatts (MW) across two future phases if required. Within the facility, power rack densities of 20 kW were easily supported, exceeding the 15-kW target originally specified by the IT team at PGS.

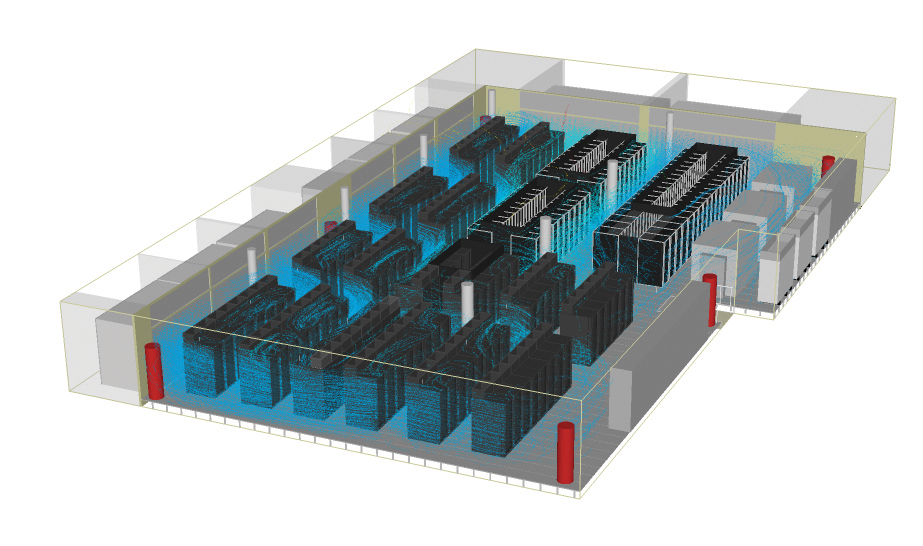

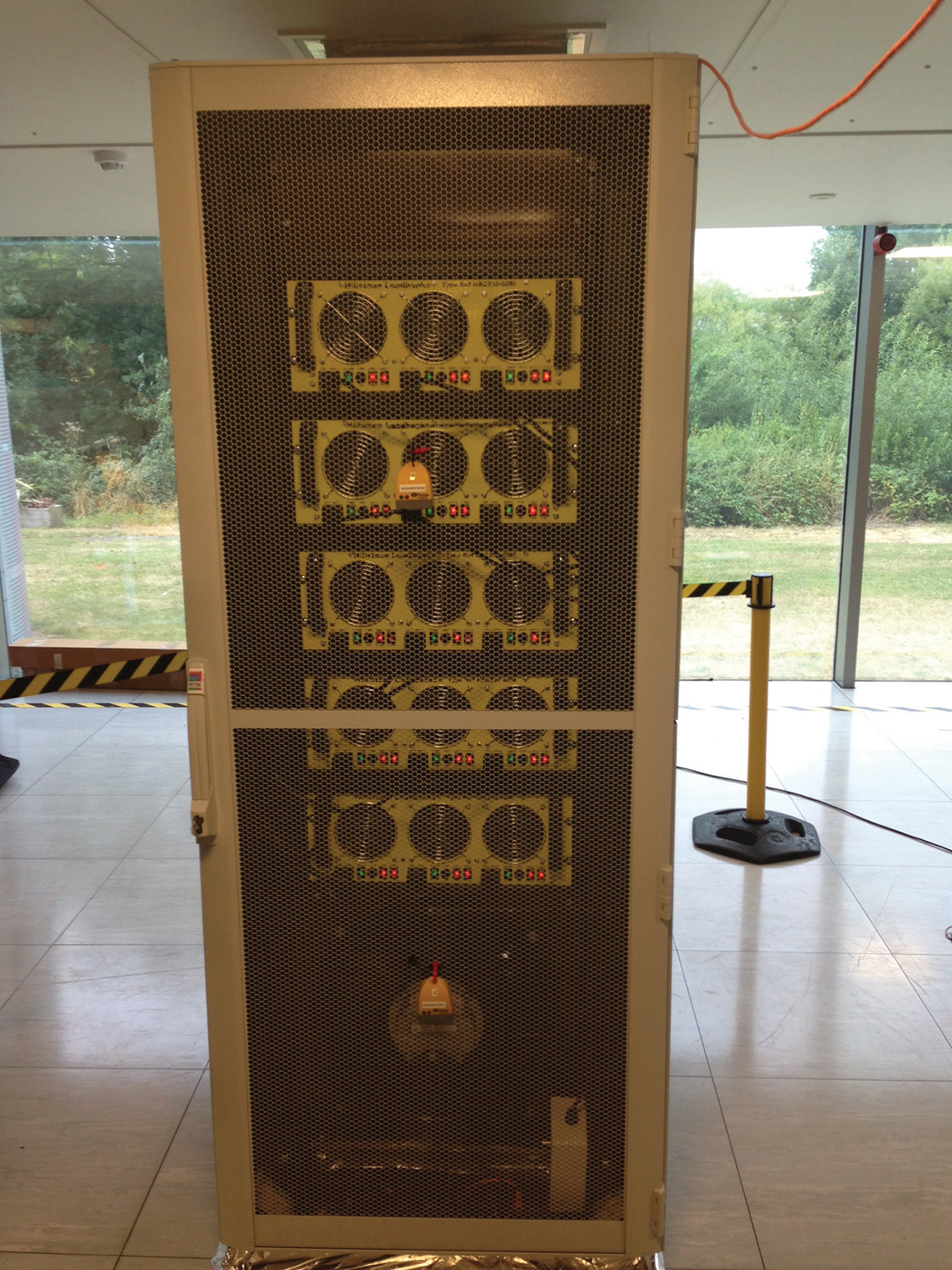

The data center houses select mission-critical applications supporting business systems, but it primarily operates the data mining and analytics associated with the core business of oil exploration. This IT is deployed in full-height racks and requires up to 20 kilowatts (kW) per rack anywhere in the facility and at any time (see Figure 3).

In 2008, PGS selected Keysource’s ecofris solution for use at its Weybridge Data Center (see Figure 4), which became the first facility to use the technology. Ecofris recirculates air within a data center without using fresh air. Instead air is provided to the data center through the full height of a wall between the raised floor and suspended ceiling. Hot air from the IT racks is ducted into the suspended ceiling and then drawn back to the cooling coils of air handling units (AHU) located at the perimeter walls. The system makes use of adiabatic technology for external heat rejection when external temperatures and humidity do not allow 100% free cooling.

Figure 4. Ecofris units are part of the phase 1 (2008) cooling system to support PGS’s high-density IT.

Keysource integrated a water-cooled chiller into the ecofris design to provide mechanical cooling when needed to supplement the free cooling system (see Figure 5). As a result PGS ended up with two systems, each having a 400-kW chiller, which run for only 50 hours a year on average when external ambient conditions are at their highest.

As a result of this original design the Weybridge data center used outside air for heat rejection but without allowing that air into the building. Airflow design, a comprehensive control system, and total separation of hot and cold air means that the facility could accommodate 30 kW in any rack and deliver a PUE L2,YC (Level 2, Continuous Measurement) of 1.15 while maintaining a server inlet temperature consistent across the entire space of 72°F (22°C) +/-1°. Adopting an indirect free cooling design rather than direct fresh air eliminated the need for major filtration or mechanical back up (see the sidebar).

Surpassing the Original Design Goals

When PGS needed additional compute capacity, the Weybridge Data Center was a prime candidate for expansion because it had the flexibility to deploy high-density IT anywhere within the facility and a low operating cost. However, while the original design anticipated two future 600-kW phases, PGS wanted even more capacity because of the growth of its business and its need for the latest IT technology. In addition, PGS wanted to make a huge drive to reduce operating costs through efficient design of cooling systems and to maximize power capacity at the site.

When the recent project was completed at the end of 2013, the Weybridge Data Center housed the additional high-density IT within the footprint of the existing data hall. The latest ecofris solution was deployed which utilized a chillerless design, which limited the increased power demand.

Keysource undertook the design by looking at ways to maximize the use of white space for IT as well as to remove the overhead cost of power to run mechanical cooling, even for a very limited number of hours a year. This would ensure maximum availability of capacity of power for the IT equipment. With a marginal improvement in operating efficiency (annualized PUE) the biggest design change was the reduced peak PUE. This change enabled an increase in IT design load from 1.8 MW to 2.7 MW within the same footprint. With just over 5 kW/square meter (m2), PGS can deploy 30 kW in any cabinet up to the maximum total installed IT capacity (see Figure 6).

Disruptive Cooling Design

Developments in technology and the wider allowable range of temperatures per ASHRAE TC9.9 enabled PGS to adopt higher server inlet temperatures when ambient temperatures are higher. This change allows PGS to operate at the optimum temperature for the equipment most of the time (normally 72°F (22°C) lowering the IT part of the PUE metric (see Figure 7).

In this facility, elevating server inlet temperatures increases the supply inlet temperatures only when ambient outside air is too warm to maintain 72°F (22°C). Running at higher temperatures at other times actually increases server fan power across different equipment, which also increases UPS loads. Running the facility at optimal efficiency all of the time reduces the overall facility load, even though PUE may rise as a result of the decrease of server fan power. With site facilities management (FM) teams trained in operating the mechanical systems, this is fine-tuned through operation and as additional IT equipment is commissioned within the facility, ensuring performance is maintained at all times.

With innovation central to the improved performance of the data center, in addition to the cooling, Keysource also delivered modular, highly efficient UPS systems providing 96% efficiency from >25% facility load, plus facility controls, which provide automated optimization.

A Live Environment

Working in a live data center environment within an office building was never going to be risk free. Keysource built a temporary wall within the existing data center to divide the live operational equipment from the live project area (see Figure 8). Cooling, power, and data for the live equipment isn’t on a raised floor and is delivered from the same end of the data center. Therefore, the dividing screen had limited impact to the live environment, with only some minor modifications needed to the fire detection and suppression systems.

Keysource also manages the data center facility for PGS, which meant that the FM and projects teams could work closely together in the planning the upgrade. As a result facilities management considerations were included in all design and construction planning to minimize risk to the operational data center as well as helping to reduce the impact to other business operations at the site.

Upon completing the project, a full integrated-system test of the new equipment was undertaken ahead of removing the dividing partition. This test not only covered the function of electrical and mechanical systems but also tested the capability of the cooling to deliver the 30 kW/rack and the target design efficiency. Using rack heaters to simulate load allowed detailed testing to be carried out ahead of the deployment of the new IT technology (see Figure 9).

Results

Phase two was completed in April 2014, and as a result the facility’s power density improved by approximately 50%, with the total IT capacity now scalable up to 2.7 MW. This has been achieved within the same internal footprint. The facility now has the capability to accommodate up to 188 rack positions, supporting up to 30 kW per rack. In addition, the PUEL2,YC of 1.15* was maintained (see the Figure 10).

The data center upgrade has been hailed as a resounding success, earning PGS and Keysource a Brill Award for Efficient IT from Uptime Institute. PGS is absolutely delighted to have the quality of its facility recognized by a judging panel of industry leaders and to receive a Brill Award.

Direct and Indirect Cooling Systems

Keysource hosted an industry expert roundtable that provides additional insights and debate on two pertinent cooling topics highlighted by the PGS story. Copies of these whitepapers can be obtained at http://www.keysource.co.uk/data-centre-white-papers.aspx

An organization requiring high availability is unlikely to install a direct fresh air system without 100% backup on the mechanical cooling. This is because the risks associated with the unknowns of what could happen outside, however infrequent, are generally out of the operator’s control.

Density of IT equipment does not make any impact to direct or indirect designs. It is the control of air and the method of air delivery within the space that dictates capacity and air volume requirements. There may be additional considerations for how backup systems and the control strategy between switching cooling methods works in high-density environments due to the risk of thermal increase in very short periods, but this is down to each individual design.

Following the agreement of the roundtable that direct fresh air is going to require some sort of a backup system in order to meet availability and customer risk requirements, it is worth considering what benefits might exist for opting for either this or indirect design.

Partly due to the different solutions in these two areas and partly because there are other variables on a site-specific basis, there are not many clear benefits either way, but there are a few considerations included:

• Indirect systems pose less or no risk from external pollutants and

contaminants.

• Indirect systems do not require integration into the building

fabric, where a direct system often needs large ducts or

modifications to the shell. This can increase complexity and cost

if, due to space or building height, it is even achievable.

• Direct systems often require more humidity control, depending on

which ranges are to be met.

With most efficient systems, there is some form of adiabatic cooling. With direct systems there is often a reliance on water to provide capacity rather than simply improve efficiency. In this case there is a much greater reliance on water for normal operation and to maintain availability, which can lead to the need for water storage or other measures. The metric of water usage effectiveness (WUE) needs to be considered.

Many data center facilities are already built with very inefficient cooling solutions. In such cases direct fresh air solutions provide an excellent opportunity to retrofit and run as the primary method of cooling, with the existing inefficient systems as back up. As the backup system is already in place this is often a very affordable option with a clear ROI.

One of the biggest advantages for an indirect system is the potential for zero refrigeration. Half of the U.S. could take this route, and even places people would never consider such as Madrid or even Dubai could benefit. This inevitably requires the use of and reliance on lots of water, as well as the acceptance of increasing server inlet temperatures during warmer periods.

Mike Turff is global compute resources manager for the Data Processing division of Petroleum Geo-Services (PGS), a Norwegian-based leader in oil exploration and production services. Mr. Turff has responsibility for building and managing the PGS supercomputer centers in Houston, TX; London, England; Kuala Lumpur, Malaysia and Rio De Janeiro, Brasil as well as the smaller satellite data centers across the world. He has worked for over 25 years in high performance compute, building and running supercomputer centers in places as diverse as Nigeria and Kazakhstan and for Baker Hughes, where he built the Eastern Hemisphere IT Services organization with IT Solutions Centers in Aberdeen, Scotland; Dubai, UAE; and Perth, Australia.

As Sales and Marketing director, Rob Elder is responsible for setting and implementing the strategy for Keysource. Based in Sussex in the United Kingdom, Keysource is a data center design, build, and optimization specialist. During his 10 years at Keysource, Mr. Elder has also held marketing and sales management positions and management roles in Facilities Management and Data Centre Management Solutions Business Units.

UI 2020

UI 2020