Too big to fail? Facebook’s global outage

The bigger the outage, the greater the need for explanations and, most importantly, for taking steps to avoid a repeat.

By any standards, the outage that affected Facebook on Monday, October 4th, was big. For more than six hours, Facebook and its other businesses, including WhatsApp, Instagram and Oculus VR, disappeared from the internet — not just in a few regions or countries, but globally. So many users and machines kept retrying these websites, it caused a slowdown of the internet and issues with cellular networks.

While Facebook is large enough to ride through the immediate financial impact, it should not be dismissed. Market watchers estimate that the outage cost Facebook roughly $60 million in revenues over its more than six-hour period. The company’s shares fell 4.9% on the day, which translated into more than $47 billion in lost market cap.

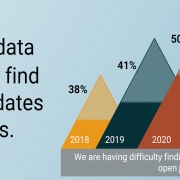

Facebook may recover those losses, but the bigger ramifications may be reputational and legal. Uptime Institute research shows that the level of outages from hyperscale operators is similar to that experienced by colocation companies and enterprises — despite their huge investments in distributed availability zones and global load and traffic management. In 2020, Uptime Institute recorded 21 cloud/internet giant outages, with associated financial and reputational damage. With antitrust, data privacy and, most recently, children’s mental health concerns swirling about Facebook, the company is unlikely to welcome further reputational and legal scrutiny.

What was the cause of Facebook’s outage? The company said there was an errant command issued during planned network maintenance. While an automated auditing tool would ordinarily catch an errant command, there was a bug in the tool that didn’t properly stop it. The command led to configuration changes on Facebook’s backbone routers that coordinate network traffic among its data centers. This had a cascading effect that halted Facebook’s services.

Setting aside theories of deliberate sabotage, there is evidence that Facebook’s internet routes (Border Gateway Protocol, or BGP) were withdrawn by mistake as part of these configuration changes.

BGP is a mechanism for large internet routers to constantly exchange information about the possible routes for them to deliver network packets. BGP effectively provides very long lists of potential routing paths that are constantly updated. When Facebook stopped broadcasting its presence — something observed by sites that monitor and manage internet traffic — other networks could not find it.

One factor that exacerbated the outage is that Facebook has an atypical internet infrastructure design, specifically related to BGP and another three-letter acronym: DNS, the domain name system. While BGP functions as the internet’s routing map, the DNS serves as its address book. (The DNS translates human-friendly names for online resources into machine-friendly internet protocol addresses.)

Facebook has its own DNS registrar, which manages and broadcasts its domain names. Because of Facebook’s architecture — designed to improve flexibility and control — when its BPG configuration error happened, the Facebook registrar went offline. (As an aside, this caused some domain tools to erroneously show that the Facebook.com domain was available for sale.) As a result, internet service providers and other networks simply could not find Facebook’s network.

How did this then cause a slowdown of the internet? Billions of systems, including mobile devices running a Facebook-owned application in the background, were constantly requesting new “coordinates” for these sites. These requests are ordinarily cached in servers located at the edge, but when the BGP routes disappeared, so did those caches. Requests were routed upstream to large internet servers in core data centers.

The situation was compounded by a negative feedback loop, caused in part by application logic and in part by user behavior. Web applications will not accept a BGP routing error as an answer to a request and so they retry, often aggressively. Users and their mobile devices running these applications in the background also won’t accept an error and will repeatedly reload the website or reboot the application. The result was an up to 40% increase in DNS request traffic, which slowed down other networks (and, therefore, increased latency and timeout requests for other web applications). The increased traffic also reportedly led to issues with some cellular networks, including users being unable to make voice-over-IP phone calls.

Facebook’s outage was initially caused by routine network maintenance gone wrong, but the error was missed by an auditing tool and propagated via an automated system, which were likely both built by Facebook. The command error reportedly blocked remote administrators from reverting the configuration change. What’s more, the people with access to Facebook’s physical routers (in Facebook’s data centers) did not have access to the network/logical system. This suggests two things: the network maintenance auditing tool and process were inadequately tested, and there was a lack of specialized staff with network-system access physically inside Facebook’s data centers.

When the only people who can remedy a potential network maintenance problem rely on the network that is being worked on, it seems obvious that a contingency plan needs to be in place.

Facebook, which like other cloud/internet giants has rigorous processes for applying lessons learned, should be better protected next time. But Uptime Institute’s research shows there are no guarantees — cloud/internet giants are particularly vulnerable to network and software configuration errors, a function of their complexity and the interdependency of many data centers, zones, systems and separately managed networks. Ten of the 21 outages in 2020 that affected cloud/internet giants were caused by software/network errors. That these errors can cause traffic pileups that can then snarl completely unrelated applications globally will further concern all those who depend on publicly shared digital infrastructure – including the internet.

More detailed information about the causes and costs of outages is available in our report Annual outage analysis 2021: The causes and impacts of data center outages.

2020

2020

UI @ 2020

UI @ 2020