Neoclouds: a cost-effective AI infrastructure alternative

Over the past decade, three tech giants have solidified their dominance in the cloud computing market: Amazon Web Services (AWS), Microsoft Azure and Google Cloud. Estimates suggest that by the end of 2023, these companies collectively controlled around two-thirds of global cloud spending, a notable increase from 47% in 2016.

As AI became a key driver of innovation, these hyperscalers expanded their offerings to include a diverse portfolio of tools and services. These include machine learning platforms, GPU-equipped server instances, and custom application-specific integrated circuits (ASICs) designed to facilitate the development and deployment of AI applications.

However, relying on hyperscaler AI infrastructure can prove to be costly and often falls short on delivering the promised flexibility and scalability of cloud computing. At the start of 2024, hyperscalers faced difficulties in meeting the rising demand for GPUs, leading to soaring prices for GPU-backed instances — sometimes exceeding $100 per hour when available. For example, renting four Nvidia H100-powered instances for a single month could cost nearly $300,000.

To address the growing demand for AI infrastructure, a new category of cloud providers has emerged, specializing in AI infrastructure as a service. Known as neoclouds, these providers focus on offering GPU-backed servers and virtual machines, often at prices more affordable than those of the hyperscalers. This group includes startups such as CoreWeave, Paperspace, Nebius, Lambda Labs and Vast AI, as well as established players such as OVH, Vultr, DigitalOcean and Rackspace, which are expanding their capabilities to include AI-centric services.

A previous report showed that dedicated GPU infrastructure can be more affordable than public cloud services, but only where utilization of the cluster is high over its lifetime (see Sweat dedicated GPU clusters to beat cloud on cost). In this report, Uptime Intelligence investigates the price differences between hyperscalers and neoclouds.

Hyperscaler versus neocloud

In the previous report, the benchmark price of public cloud services is averaged from three hyperscalers (AWS, Google and Microsoft Azure) and three neoclouds (CoreWeave, Nebius and Lambda Labs).

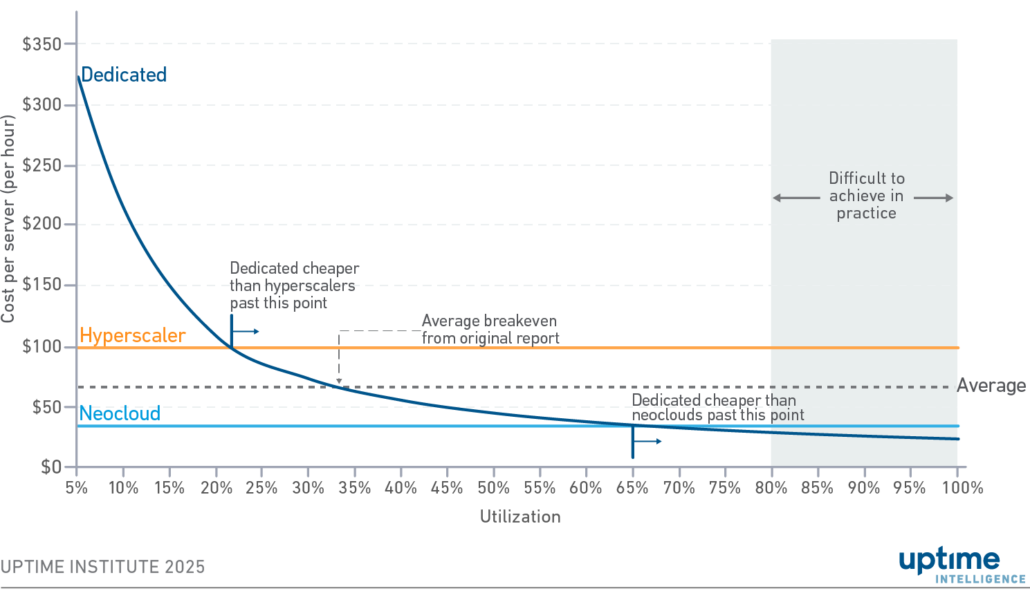

For this report, these groups are separated into two different price benchmarks. Figure 1 shows a unit cost comparison of Nvidia DGX H100 nodes hosted in a US (Northern Virginia) colocation facility against the costs of equivalent cloud instances from these two groups.

Figure 1 Cost per-server hour by utilization for hyperscaler versus neocloud

The average hourly cost of an Nvidia DGX H100 instance when purchased on-demand from a hyperscaler was $98. When an approximately equivalent instance is purchased from a neocloud, the unit cost drops to $34, a substantial 66% saving.

In the previous report, given certain assumptions, the breakeven point for dedicated infrastructure was approximately 33%. This point represents the minimum utilization level at which dedicated infrastructure is cheaper per unit than the public cloud. Specifically, over the amortization period, one-third of the cluster’s capacity needs to be consumed for it to be more cost-effective than the cloud. Note that these calculations do not account for any potential price cuts by the cloud vendor over the same period.

In Figure 1, two breakeven points are observed. When using an established cloud provider, dedicated infrastructure is cheaper than the public cloud if its average utilization levels are maintained at 22% or above. When using a neocloud, this figure rises to 66%.

In practice, it is relatively easy for a dedicated cluster to breach the 22% threshold, thereby saving costs over a hyperscaler cloud. However, breaching the 66% threshold would be difficult for all but the most efficient AI deployments (a report on this will follow). In this scenario, many enterprises would save money using a neocloud compared with deploying their own infrastructure.

What impacts price?

Neoclouds specializing in GPUs are essentially selling access to a third-party product. In general, they are not differentiated by any specific features or capabilities. If two competing providers are offering access to the same GPU in the same region, then price will be the deciding factor in any purchasing decision. These providers are trading in commodities — commodities that are valuable now but whose value is susceptible to change. As a result, GPU cloud providers need to be very cost-effective to win business that would typically go to a hyperscaler.

Neoclouds have a supply of resources and low prices. So why do hyperscalers not cut their prices to be more in line with the neoclouds? The simple reason is that they do not have to. Hyperscalers have a captive, incumbent user base. Organizations already using the public cloud generally find it easier to remain with the same cloud provider rather than use an additional one. A single provider means a single experience: a single set of APIs, management portal and invoice. Services from a single provider are likely better integrated, with issues being resolved more quickly than when dealing with multiple entities.

For many customers, paying more to use their existing hyperscaler for GPU instances is simpler than finding a cheaper alternative. The hassle of setting up additional contracts, training staff and moving data between clouds outweighs the potential savings. Hyperscalers also offer reserved instances and commitment-based enterprise discount schemes to reduce costs (not analyzed in this report), bringing the unit costs closer to those of the neoclouds. Organizations are often comfortable making commitments to incumbent cloud providers, knowing they are likely to continue to use the cloud provider going forward.

The higher prices for GPU-backed instances do not appear to be affecting the hyperscalers’ sales volume. Accessing some AWS instances has become incredibly difficult, enough that AWS allows users to make reservations in advance. Google has also implemented reservations. Such shortages could not exist if customers were not demanding instances at the listed price.

Neoclouds may benefit from lower costs because they do not need to maintain a wide variety of new and legacy infrastructure. Hyperscalers, on the other hand, provide a diverse range of CPUs, GPUs and specialized equipment for various use cases on a larger scale across infrastructure, platform and software services. Hyperscaler clouds typically offer dozens of products, with millions of individual line items for sale. In contrast, neoclouds provide only a handful of product lines, with variations in the tens. This focus allows them to operate with less diversely qualified staff, optimize a homogenous IT estate at scale and reduce management overheads. These cost savings can then be passed on to customers as lower prices.

However, hyperscalers have other cost benefits that neoclouds lack, most notably vast economies of scale which give them significant purchasing power — their high purchasing volume enables them to secure greater discounts than others. As a result, Uptime Intelligence believes that most of the price difference between high-end GPUs from hyperscalers and neoclouds is due to differences in gross margins rather than cost base.

GPU cloud providers do not position themselves as competitors to hyperscalers but as partners. They propose that they are components of an enterprise’s multi-cloud strategy, delivering specific services alongside the more prominent cloud providers. For example, an organization might use a GPU cloud provider to train a large language model, which is then deployed with a public cloud provider for inference and integration into a more extensive application.

Hyperscalers are also increasingly using neoclouds. Microsoft has agreed to spend $10 billion on infrastructure through CoreWeave by the end of 2029. It seems likely that many neoclouds will be acquired by hyperscalers in the coming years.

The Uptime Intelligence View

For most enterprises, some high-end GPU instances consumed from neoclouds are likely to be cheaper than both public cloud and dedicated infrastructure. Hyperscaler cloud infrastructure for AI can be more affordable than dedicated infrastructure if it is utilized efficiently over its lifetime. However, as neoclouds have limited products, capabilities and regions, most enterprises will continue to use hyperscalers and dedicated infrastructure for core infrastructure requirements (including inference), utilizing neoclouds only for large-scale AI requirements such as training.

2020

2020