New ASHRAE guidelines challenge efficiency drive

Earlier in 2021, ASHRAE’s Technical Committee 9.9 published an update — the fifth edition — of its Thermal Guidelines for Data Processing Environments. The update recommends important changes to data center thermal operating envelopes: the presence of pollutants is now a factor, and it introduces a new class of IT equipment for high-density computing. The new advice can, in some cases, lead operators to not only alter operational practices but also shift set points, a change that may impact both energy efficiency and contractual service level agreements (SLA) with data center services providers.

Since the original release in 2004, ASHRAE’s Thermal Guidelines have been instrumental in setting cooling standards for data centers globally. The 9.9 committee collects input from a wide cross-section of the IT and data center industry to promote an evidence-based approach to climatic controls, one which helps operators better understand both risks and optimization opportunities. Historically, most changes to the guidelines pointed data center operators toward further relaxations of climatic set points (e.g., temperature, relative humidity, dew point), which also stimulated equipment makers to develop more efficient air economizer systems.

In the fifth edition, ASHRAE adds some major caveats to its thermal guidance. While the recommendations for relative humidity (RH) extend the range up to 70% (the previous cutoff was 60%), this is conditional on the data hall having low concentrations of pollutant gases. If the presence of corrosive gases is above the set thresholds, ASHRAE now recommends operators keep RH under 50% — below its previous recommended limit. To monitor, operators should place metal strips, known as “reactivity coupons,” in the data hall and measure corroded layer formation; the limit for silver is 200 ångström per month and for copper, 300 ångström per month.

ASHRAE bases its enhanced guidance on an experimental study on the effects of gaseous pollutants and humidity on electronics, performed between 2015 and 2018 with researchers from Syracuse University (US). The experiments found that the presence of chlorine and hydrogen sulfide accelerates copper corrosion under higher humidity conditions. Without chlorine, hydrogen sulfide or similarly strong catalysts, there was no significant corrosion up to 70% RH, even when other, less aggressive gaseous pollutants (such as ozone, nitrogen dioxide and sulfur dioxide) were present.

Because corrosion from chlorine and hydrogen sulfide at 50% RH is still above acceptable levels, ASHRAE suggests operators consider chemical filtration to decontaminate.

While the data ASHRAE uses is relatively new, its conclusions echo previous standards. Those acquainted with the environmental requirements of data storage systems may find the guidance familiar — data storage vendors have been following specifications set out in ANSI/ISA-71.04 since 1985 (last updated in 2013). Long after the era of tapes, storage drives (hard disks and solid state alike) remain the foremost victims of corrosion, as their low-temperature operational requirements mean increased moisture absorption and adsorption.

However, many data center operators do not routinely measure gaseous contaminant levels, and so do not monitor for corrosion. If strong catalysts are present but undetected, this might lead to higher than expected failure rates even if temperature and RH are within target ranges. Worse still, lowering supply air temperature in an attempt to counter failures might make them more likely. ASHRAE recommends operators consider a 50% RH limit if they don’t perform reactivity coupon measurements. Somewhat confusingly, it also makes an allowance for following specifications set out in its previous update (the fourth edition), which recommends a 60% RH limit.

Restricted envelope for high-density IT systems

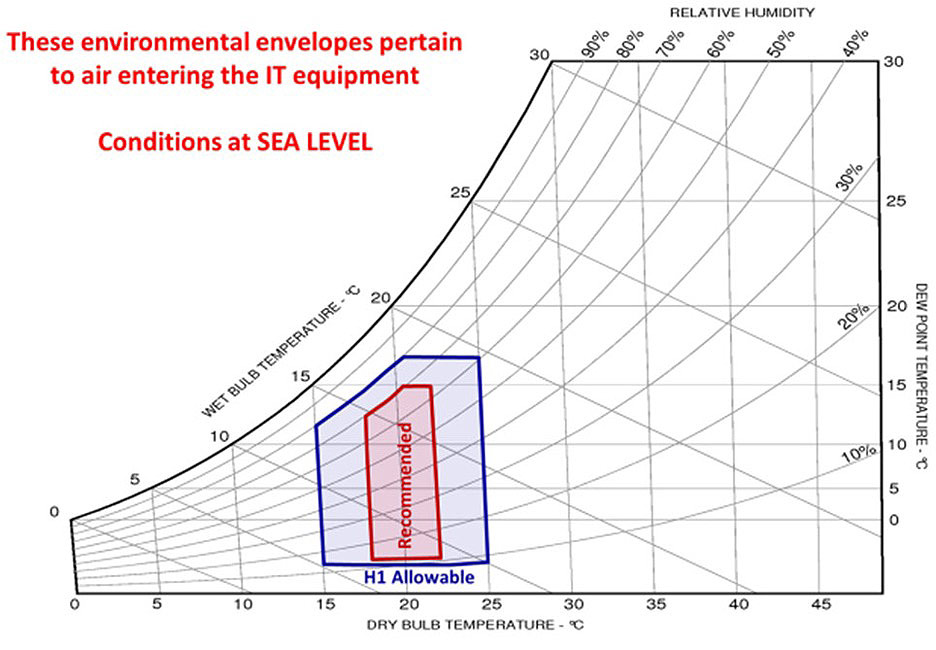

Another major change in the latest update is the addition of a new class of IT equipment, separate from the pre-existing classes of A1 through A4. The new class, H1, includes systems that tightly integrate a number of high-powered components (server processors, accelerators, memory chips and networking controllers). ASHRAE says these high-density systems need more narrow air temperature bands — it recommends 18°C/64.4°F to 22°C/71.6°F (as opposed to 18°C/64.4°F to 27°C/80.6°F) — to meet its cooling requirements. The allowable envelope has become tighter as well, with upper limits of 25°C/77°F for class H1, instead of 32°C/89.6°F (see Figure 1).

Source: Thermal Guidelines for Data Processing Environments, 5th Edition, ASHRAE

This is because, according to ASHRAE, there is simply not enough room in some dense systems for the higher performance heat sinks and fans that could keep components below temperature limits across the generic (classes A1 through A4) recommended envelope. ASHRAE does not stipulate what makes a system class H1, leaving it to the IT vendor to specify its products as such.

There are some potentially far-reaching implications of these new envelopes. Operators have over the past decade built and equipped a large number of facilities based on ASHRAE’s previous guidance. Many of these relatively new data centers take advantage of the recommended temperature bands by using less mechanical refrigeration and more economization. In several locations — Dublin, London and Seattle, for example — it is even possible for operators to completely eliminate mechanical cooling yet stay within ASHRAE guidelines by marrying the use of evaporative and adiabatic air handlers with advanced air-flow design and operational discipline. The result is a major leap in energy efficiency and the ability to support more IT load from a substation.

Such optimized facilities will not typically lend themselves well to the new envelopes. That most of these data centers can support 15- to 20-kilowatt IT racks doesn’t help either, since H1 is a new equipment class requiring a lower maximum for temperature — regardless of the rack’s density. To maintain the energy efficiency of highly optimized new data center designs, dense IT may need to have its own dedicated area with independent cooling. Indeed, ASHRAE says that operators should separate H1 and other more restricted equipment into areas with their own controls and cooling equipment.

Uptime will be watching with interest how colocation providers, in particular, will handle this challenge, as their typical SLAs depend heavily on the ASHRAE thermal guidelines. What may be considered an oddity today may soon become common, given that semiconductor power keeps escalating with every generation. Facility operators may deploy direct liquid cooling for high-density IT as a way out of this bind.