Density choices for AI training are increasingly complex

When it comes to generative AI models, the belief that bigger is better is an enduring one — shaping a wide array of high-stakes decisions from corporate investments to energy and permitting policy to technology export controls.

A defining technical feature in the pursuit of ever-bigger models is denser compute hardware. This makes sense: as problems demand more compute, the incentive grows to compress the footprint to maximize performance. When many processors (and their memory banks) are tightly coupled using latency-optimized interconnect topology, control messages and data pass through fewer hops (switching silicon) and travel shorter distances. All those nanoseconds add up — and when hardware costs millions of dollars, the performance degradation due to added latency matters.

While the idea that density can yield extra performance is clear, the balance point against costs (whether financial or in added delays and operational risks) remains obscure. Some level of densification can improve performance while lowering costs, an economic concept intuitively understood by data center professionals. However, strong densification will add costs, both in IT hardware and facility upgrades. The range of infrastructure density targets becomes wider with each successive GPU generation, and most organizations will find that selecting the right compute density level is not a trivial matter.

Dazzled by the headlines

The new IT systems built around Blackwell, Nvidia’s latest family of AI accelerators for data center applications, illustrate the point. The flagship NVL72 product, which unites 72 Blackwell GPUs using a copper-based interconnect fabric in a rack-scale system, is rated at 132 kW in a tall but otherwise fairly common 19-inch rack. By any standards, this density is extreme — according to the Uptime Institute Global Data Center Survey 2024, only about 1% of data center operators report having racks exceeding 100 kW, and these mostly run traditional high-performance technical computing workloads.

Just a few small rows of the Blackwell rack systems can consume as much power capacity as a sizable data hall filled with hundreds of “normal” racks. This concentration of capacity puts extra demands on power distribution equipment and floor loading tolerances (1.4 metric tons per rack). It also mandates the use of direct liquid cooling (DLC) combined with high-density air cooling — at full system load, the cold plates handle around 100 kW of thermal power, while the air exhaust exceeds 25 kW.

The next step down on the density scale is 64-GPU racks, comprising eight liquid-cooled server systems connected via high-speed fiber only and missing the copper fabric. With a lower thermal power envelope per GPU, these standard racks can operate within 80 kW to 90 kW and weigh about one metric ton. Although still very high, this is around a third lower in both power consumption and weight when compared with the flagship product.

With this approach, attaining the same training performance as a cluster built around the NVL72 system will likely require 50% more racks — based on Uptime Intelligence estimates that account for chip thermal power and copper fabric advantages. The associated IT hardware and networking costs will offset any savings due to avoided facility power and cooling upgrades. However, the benefit of a faster deployment by several weeks or even months using readily available off-the-shelf power distribution equipment (such as busways, breakers and cables) can be a decisive factor for many organizations.

AI hardware remains spreadable

To work around power delivery, air cooling or floor loading limitations, data center operators can spread the server nodes further apart, with four 8-GPU server frames per rack. This is a straightforward option, reducing fully loaded sustained power to under 50 kW, even with the latest generation of systems. Notably, air cooling becomes technically viable at this power point with good airflow management (e.g., containment and in-row coolers or rear-door systems). However, IT energy efficiency will worsen, as fan power will account for up to 10% of the full load. Still, the benefits of faster deployment and ease of operation compared with upgrading and operating a DLC system will make this a reasonable trade-off for many existing data centers.

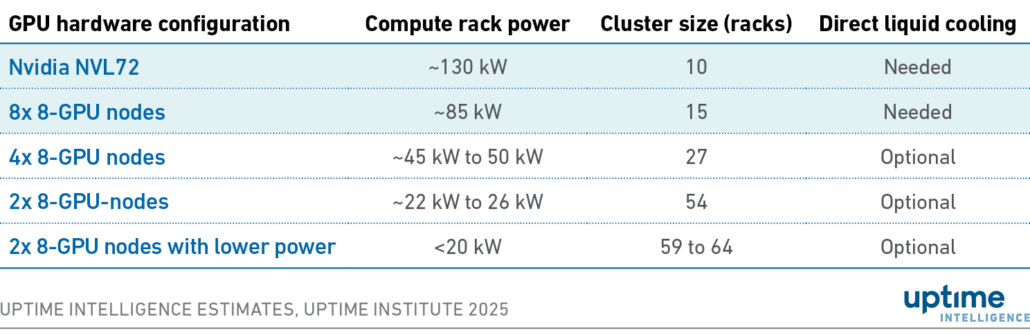

Table 1 shows key density options and their impact on footprint when aiming to achieve compute performance comparable to a cluster built on eight NVL72 racks, with a 10-rack footprint including network racks. Power estimates are not worst-case but assume sustained (continuous) maximum power when operating within ASHRAE’s recommended envelope using variable-speed fans.

Table 1 Example of density options for GPU-based AI training hardware

The density de-escalation options go on: halving the number of server frames per rack again will lower power delivery requirements to around 26 kW sustained maximum (or less with liquid cooling) per rack. Dynamic server power management tools from IT vendors can cap power levels to a lower maximum to stay within the capacity of a single circuit — for example, 22 kW with 400V/32A power delivery or 415V/40 A derated per North American codes for continuous loads — throttling chips if needed.

For less demanding or smaller models, opting for lower-powered GPUs (or alternative accelerators) can easily bring rack power below 20 kW, making it manageable for a broad range of data center facilities with trivial or no electrical or thermal management upgrades.

However, data in the public domain remains insufficient to fully inform trade-off decisions between AI compute cluster performance, costs and deployment speeds. Most pressingly, the industry needs a better understanding of the relative performance profile and cost implications of these IT hardware options, including how interconnect link speeds and topologies affect training performance. Uptime Intelligence’s research will focus on exploring these questions in greater detail.