AI and cooling: toward more automation

AI is increasingly steering the data center industry toward new operational practices, where automation, analytics and adaptive control are paving the way for “dark” — or lights-out, unstaffed — facilities. Cooling systems, in particular, are leading this shift. Yet despite AI’s positive track record in facility operations, one persistent challenge remains: trust.

In some ways, AI faces a similar challenge to that of commercial aviation several decades ago. Even after airlines had significantly improved reliability and safety performance, making air travel not only faster but also safer than other forms of transportation, it still took time for public perceptions to shift.

That same tension between capability and confidence lies at the heart of the next evolution in data center cooling controls. As AI models — of which there are several — improve in performance, becoming better understood, transparent and explainable, the question is no longer whether AI can manage operations autonomously, but whether the industry is ready to trust it enough to turn off the lights.

AI’s place in cooling controls

Thermal management systems, such as CRAHs, CRACs and airflow management, represent the front line of AI deployment in cooling optimization. Their modular nature enables the incremental adoption of AI controls, providing immediate visibility and measurable efficiency gains in day-to-day operations.

AI can now be applied across four core cooling functions:

- Dynamic setpoint management. Continuously recalibrates temperature, humidity and fan speeds to match load conditions.

- Thermal load forecasting. Predicts shifts in demand and makes adjustments in advance to prevent overcooling or instability.

- Airflow distribution and containment. Uses machine learning to balance hot and cold aisles and stage CRAH/CRAC operations efficiently.

- Fault detection, predictive and prescriptive diagnostics. Identifies coil fouling, fan oscillation, or valve hunting before they degrade performance.

A growing ecosystem of vendors is advancing AI-driven cooling optimization across both air- and water-side applications. Companies such as Vigilent, Siemens, Schneider Electric, Phaidra and Etalytics offer machine learning platforms that integrate with existing building management systems (BMS) or data center infrastructure management (DCIM) systems to enhance thermal management and efficiency.

Siemens’ White Space Cooling Optimization (WSCO) platform applies AI to match CRAH operation with IT load and thermal conditions, while Schneider Electric, through its Motivair acquisition, has expanded into liquid cooling and AI-ready thermal systems for high-density environments. In parallel, hyperscale operators, such as Google and Microsoft, have built proprietary AI engines to fine-tune chiller and CRAH performance in real time. These solutions range from supervisory logic to adaptive, closed-loop control. However, all share a common aim: improve efficiency without compromising compliance with service level agreements (SLAs) or operator oversight.

The scope of AI adoption

While IT cooling optimization has become the most visible frontier, conversations with AI control vendors reveal that most mature deployments still begin at the facility water loop rather than in the computer room. Vendors often start with the mechanical plant and facility water system because these areas present fewer variables, such as temperature differentials, flow rates and pressure setpoints, and can be treated as closed, well-bounded systems.

This makes the water loop a safer proving ground for training and validating algorithms before extending them to computer room air cooling systems, where thermal dynamics are more complex and influenced by containment design, workload variability and external conditions.

Predictive versus prescriptive: the maturity divide

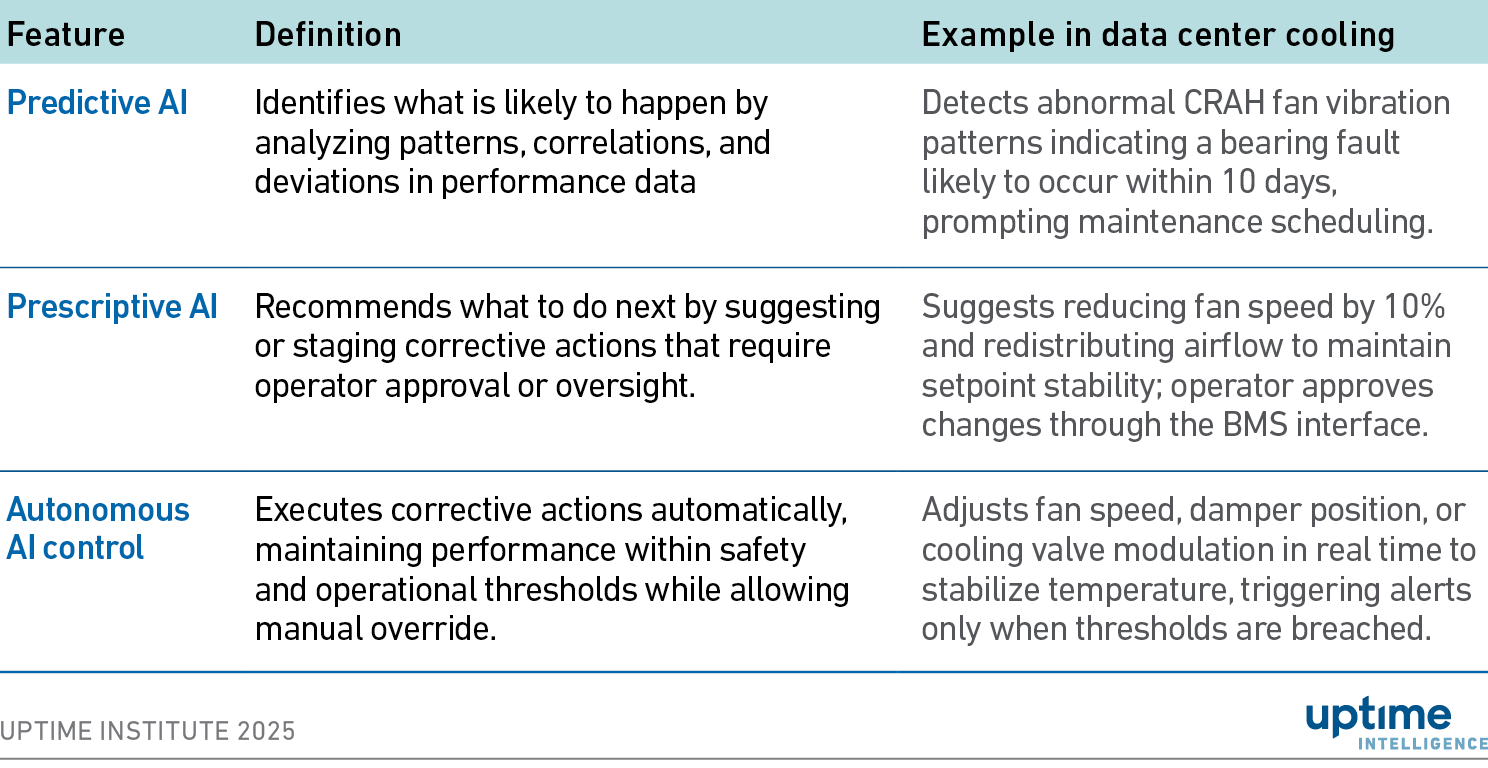

AI in cooling is evolving along a maturity spectrum — from predictive insight to prescriptive guidance and, increasingly, to autonomous control. Table 1 summarizes the functional and operational distinctions among these three stages of AI maturity in data center cooling.

Table 1 Predictive, prescriptive, and autonomous AI in data center cooling

Most deployments today stop at the predictive stage, where AI enhances situational awareness but leaves action to the operator. Achieving full prescriptive control will require not only a deeper technical sophistication but also a shift in mindset.

Technically, it is more difficult to engineer because the system must not only forecast outcomes but also choose and execute safe corrective actions within operational limits. Operationally, it is harder to trust because it challenges long-held norms about accountability and human oversight.

The divide, therefore, is not only technical but also cultural. The shift from informed supervision to algorithmic control is redefining the boundary between automation and authority.

AI’s value and its risks

No matter how advanced the technology becomes, cooling exists for one reason: maintaining environmental stability and meeting SLAs. AI-enhanced monitoring and control systems support operating staff by:

- Predicting and preventing temperature excursions before they affect uptime.

- Detecting system degradation early and enabling timely corrective action.

- Optimizing energy performance under varying load profiles without violating SLA thresholds.

Yet efficiency gains mean little without confidence in system reliability. It is also important to clarify that AI in data center cooling is not a single technology. Control-oriented machine learning models, such as those used to optimize CRAHs, CRACs and chiller plants, operate within physical limits and rely on deterministic sensor data. These differ fundamentally from language-based AI models such as GPT, where “hallucinations” refer to fabricated or contextually inaccurate responses.

At the Uptime Network Fall Americas Fall Conference 2025, several operators raised concerns about AI hallucinations — instances where optimization models generate inaccurate or confusing recommendations from event logs. In control systems, such errors often arise from model drift, sensor faults, or incomplete training data, not from the reasoning failures seen in language-based AI. When a model’s understanding of system behavior falls out of sync with reality, it can misinterpret anomalies as trends, eroding operator confidence faster than it delivers efficiency gains.

The discomfort is not purely technical, it is also human. Many data center operators remain uneasy about letting AI take the controls entirely, even as they acknowledge its potential. In AI’s ascent toward autonomy, trust remains the runway still under construction.

Critically, modern AI control frameworks are being designed with built-in safety, transparency and human oversight. For example, Vigilent, a provider of AI-based optimization controls for data center cooling, reports that its optimizing control switches to “guard mode” whenever it is unable to maintain the data center environment within tolerances. Guard mode brings on additional cooling capacity (at the expense of power consumption) to restore SLA-compliant conditions. Typical examples include rapid drift or temperature hot spots. In addition, there is also a manual override option, which enables the operator to take control through monitoring and event logs.

This layered logic provides operational resiliency by enabling systems to fail safely: guard mode ensures stability, manual override guarantees operator authority, and explainability, via decision-tree logic, keeps every AI action transparent. Even in dark-mode operation, alarms and reasoning remain accessible to operators.

These frameworks directly address one of the primary fears among data center operators: losing visibility into what the system is doing.

Outlook

Gradually, the concept of a dark data center, one operated remotely with minimal on-site staff, has shifted from being an interesting theory to a desirable strategy. In recent years, many infrastructure operators have increased their use of automation and remote-management tools to enhance resiliency and operational flexibility, while also mitigating low staffing levels. Cooling systems, particularly those governed by AI-assisted control, are now central to this operational transformation.

Operational autonomy does not mean abandoning human control; it means achieving reliable operation without the need for constant supervision. Ultimately, a dark data center is not about turning off the lights, it is about turning on trust.

The Uptime Intelligence View

AI in thermal management has evolved from an experimental concept into an essential tool, improving efficiency and reliability across data centers. The next step — coordinating facility water, air and IT cooling liquid systems — will define the evolution toward greater operational autonomy. However, the transition to “dark” operation will be as much cultural as it is technical. As explainability, fail-safe modes and manual overrides build operator confidence, AI will gradually shift from being a copilot to autopilot. The technology is advancing rapidly; the question is how quickly operators will adopt it.