Rigorous testing of data center components should be a continuous process

By Ryan Orr, with Chris Brown and Ed Rafter

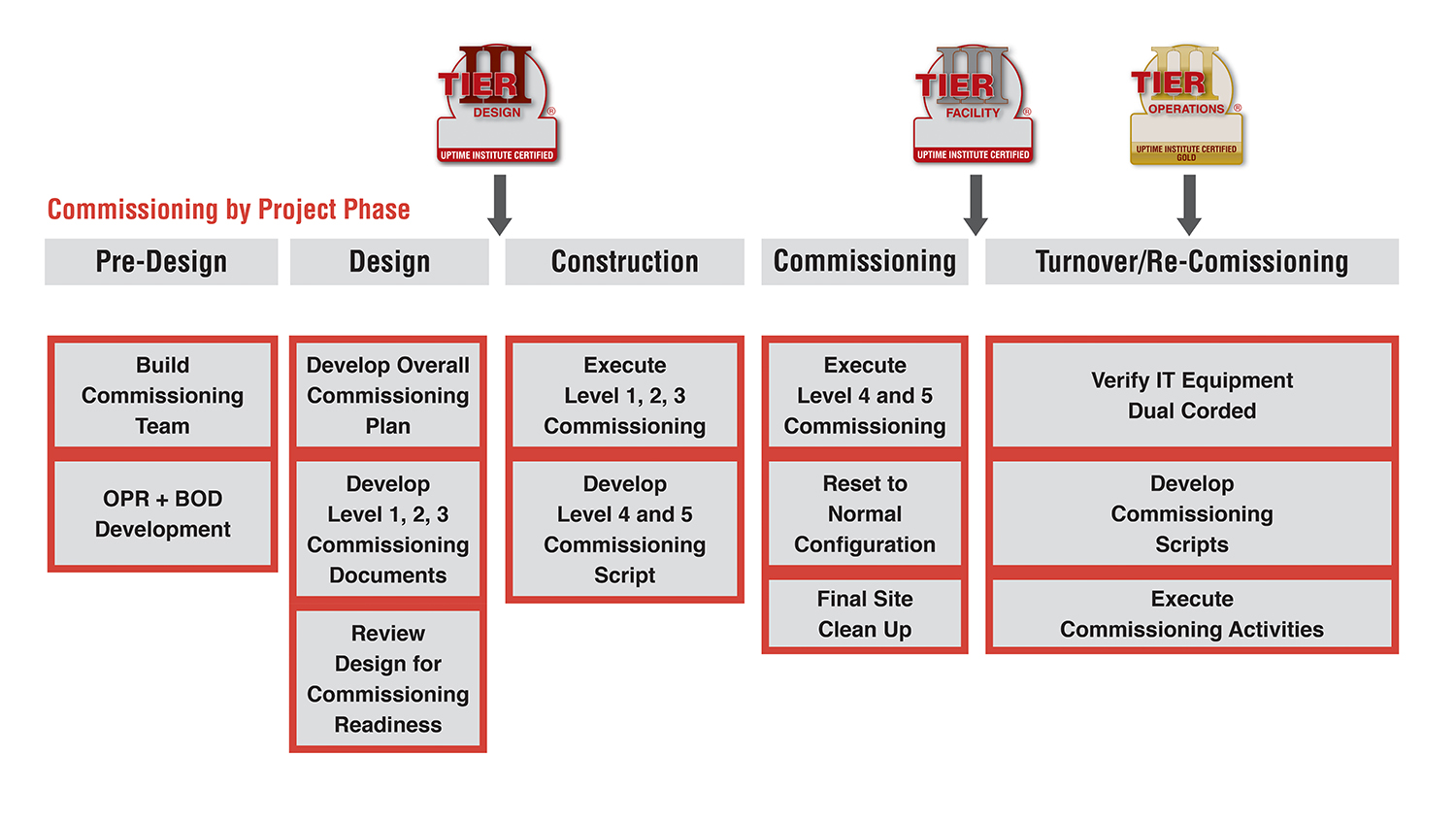

Many data center owners and others commonly believe that commissioning takes place only in the last few days before the facility enters into operation. In reality, data center commissioning is a continuous process that, when executed properly, helps ensure that the systems will meet mission critical objectives, design intent, and contract documents. The commissioning process should begin at project inception and continue through the life of the data center.

Uptime Institute’s extensive global field experience reveals that many of the problems, and subsequent consequences, observed in operational facilities could have been identified and remediated during a thorough commissioning process. Rigorous, comprehensive commissioning reduces initial failure rates, ensures that the data center functions as designed, and verifies facility operations capabilities—setting up Operations for success. At the outset of the commissioning program development, the owner and commissioning agent (CxA) should identify the important elements and benchmarks for each phase of the data center life cycle. Each element and benchmark must be executed successfully during commissioning to ensure the data center is rigorously examined prior to operations.

Uptime Institute wants to highlight the importance and benefits of commissioning for data center owners and operators and clarify the goals, objectives, and process of commissioning a data center.

This publication:

• Defines and reinforces the basic concepts of the five levels of commissioning

• Relates the levels of commissioning to the data center life cycle

• Presents technical considerations for commissioning activities associated witheach phase

• Details the overall management of a commissioning program

• Identifies minimal roles and responsibilities for each stakeholder throughout the commissioning process

Commissioning tests some of the most important operations a data center will perform over its life and helps ease the transition between site development and daily operations. Commissioning:

• Verifies that the equipment and systems operate as designed by the Engineer-of-Record

• Provides a baseline for how the facility should perform throughout the rest of its life

• Affords the best opportunity for Operations to become familiar with how systems operate and test and verify operational procedures without risking critical IT loads

• Determines the performance limits of a data center—the most overlooked benefit of commissioning

In other words, commissioning highlights what a system can do and how it will respond beyond the original requirements and design features if the process is executed to a high degree of quality. Commissioning, like data center operations, must be considered throughout the full life cycle of the data center (see Start with the End in Mind, The Uptime Institute Journal Vol. 3 p. 104). Commissioning should first be considered, and planned for, at a project’s inception and continue throughout the design, construction, transition-to-operations, and ongoing operations where re-commissioning is appropriate.

COMMISSIONING LEVELS

Over time, various organizations have defined the levels of commissioning. As a result, a data center owner may encounter a number of variations when attempting to understand and implement a commissioning program. With this publication, Uptime Institute clarifies the purpose of each level. However, each and every data center project is unique, which could mean that one or more of these activities might fit better within a different level of commissioning for some projects. Table 1 is organized to outline the process and sequence for commissioning, but the most important thing is that all the activities are completed. The high reliability essential to mission critical facilities requires that a rigorous and complete commissioning program includes all five levels to ensure that capital investments are not wasted.

Table 1. Commissioning Levels 1-5

COMMISSIONING AND UPTIME INSTITUTE TIERS

Unlike Uptime Institute’s Operational Sustainability Standard, the rigor associated with commissioning a data center has little relationship to its Tier level. The scope for commissioning and testing a Tier I data center may be less than that of a Tier IV data center—based on differences in the actual design complexity, topology, size, components, and sequence of operations. However, the roles and responsibilities and technical requirements for the commissioning team should not differ largely between Tiers and should be just as rigorous and comprehensive for a Tier I as it would be for a Tier IV.

COMMISSIONING STAKEHOLDERS

The most critical stakeholders involved on any project are listed below. They should fulfill their major roles sequentially. Stakeholders with additional expertise or valuable contributions should also participate. The CxA should ensure that the roles and responsibilities of the commissioning stakeholders are balanced and well documented.

Owner: The owner should initiate the commissioning process at the project outset, including identifying key stakeholders to take part in the program and communicating expectations for the commissioning program. Owner’s personnel are typically responsible for internal engineering, project management, and administration; however, the IT end user may also be part of the owner’s team. When the owner’s personnel lack the necessary experience for these activities, those responsibilities should be delegated to an authorized third-party representative, typically referred to as an owner’s representative. If the owner does not participate (or appoint a representative), no one on the commissioning team will have the knowledge or perspective to represent the owner’s interests. The end result could be a sub-standard facility, unnecessarily vulnerable to outages and unable to support the business needs.

Contractor: The commissioning team always includes the general contractor and specialty trade contractors, including mechanical, electrical, and controls, as well as OEM vendors who will be bringing equipment on site and assisting with testing. Without contractors, commissioning activities will be nearly impossible to complete properly. Contractors often coordinate vendors and physically operate equipment during commissioning procedures. Uptime Institute experience indicates that although contractors are rarely excluded entirely from commissioning their input is sometimes undervalued.

Architect and engineers: The Engineers-of-Record are legally responsible for the design of the data center, including those responsible for mechanical and electrical systems. Design intent may be compromised if designers do not participate in commissioning. The design engineer specifies the sequence of operations and is the only party who can confirm that the intent of the design was met.

Operations: The maintenance and operations managers, supervisors, and technicians are ultimately responsible for the day-to-day operations of the data center and its maintenance activities. This group may include owner’s personnelor a third party contracted for ongoing operations. Excluding Operations is a huge missed opportunity for training and compromises the team’s ability to verify maintenance and operations procedures (SOPs, EOPs, MOPs, etc.). Without live training during commissioning to verify effective procedures, the operations team will not be fully ready for maintenance or failures when the facility is in operation.

CxA: Ideally a third party is the responsible authority for the planning and execution of the entire commissioning process. The CxA may be an owner’s representative or a qualified mechanical or electrical contractor. Trying to commission without a CxA could result in poorly planned, undocumented, and unscripted commissioning activities. Additionally, it makes it more difficult to close out construction and properly transition to operations, which includes proper punch-list item closeout and execution of training for operations staff.

PRE-DESIGN PHASE ELEMENTS AND BENCHMARKS

Pre-design phase commissioning immediately follows the approval of a data center project and begins with selection of the CxA through a request for proposal (RFP) process (see Table 2). During the pre-design phase the owner, Engineers-of-Record, operations staff, and the CxA identify the owner’s project requirements (OPR) for the data center. Table 2 lists each participant in data center design, construction, and commissioning and denotes responsibility for particular tasks. In some projects, the owner may elect to utilize an owner’s representative to manage the day-to-day activities of the project on their behalf.

Table 2. Pre-Design Phase tasks

At this time, the owner should hire the data center facility manager and one data center facility supervisor to support the commissioning activities as representation for the operations team. It is not necessary to build the entire operations team to support the commissioning and construction activities.

PRE-DESIGN PHASE COMMISSIONING TECHNICAL REQUIREMENTS

Tasks to complete during the pre-design phase include developing a project schedule that includes commissioning, creating a budget, outlining a commissioning plan, and documenting the OPR and basis of design (BOD).

Technical requirements during this phase include:

CxA Selection

Selecting the CxA in the Pre-Design phase allows it to help develop the OPR and BOD, the commissioning program, the budget, and schedule.

The CxA should have and provide:

• Appropriate staff to support the technical requirements of the project

• Experience with mission critical/data center facility commissioning

• Experience with the project’s known topologies and technologies

• Sample commissioning documents (e.g., Commissioning Plan, Method Statements, Commissioning Scripts, System Manual)

• Commissioning certifications

• Client referrals

The CxA should be:

• Contracted directly to the owner to ensure the owner’s interests are held primary

• Optimally aligned to both the owner’s operations team and the owner’s design and construction team to further align interests and gain efficiencies in coordinating activities throughout commissioning

• An independent third party

Ideally, the CxA should not be an employee of the construction contractors or architect/engineering firms. When a third-party CxA is not a viable choice, the best alternative would be a representative from the owner’s team when the technical expertise is available within the company. When the owner’s team does not have the technical expertise required, a third-party mechanical or electrical contractor with commissioning experience could be utilized. Of course, cost is a factor in selecting a CxA, but it should not be allowed to compromise quality and rigor.

Project Schedule

• Must include all commissioning activity time on the schedule to avoid project delays.

• Should allot sufficient time for correcting installation and performance deficiencies revealed during commissioning.

• Should assess the requirement and/or capability for post-occupancy commissioning activities. This can include provisions for seasonal commissioning to assess the performance of critical components in a variety of ambient conditions.

At this point, the schedule should have significant flexibility and can be better defined at each phase. Depending on the size, complexity, and sequence of operations associated with a facility, a rigorous Level 5 commissioning schedule could take up to 20 working days or longer. Even for small and relatively basic data centers, commissioning teams will find it challenging to complete Level 5 commissioning with a high level of detail and rigor in less than a few days.

Commissioning Budget

• Should include a large contingency reserve for Level 4 and Level 5 commissioning budgets

• Should include all items and personnel required to support complete commissioning

At this point in the process there are a considerable number of unknowns in the design, construction, and commissioning requirements. Budgets for Level 4 and Level 5 commissioning should include a large contingency reserve to accommodate the unknown parameters of the project. This contingency reserve can be reduced as appropriate as the project moves along and more and more items are defined. However, the final budget should still have a contingency reserve to account for unforeseeable issues, such as additional load bank rental time in the event commissioning takes longer than scheduled. Budgets need to include all items and personnel required to support commissioning. This includes, but is not limited to, load banks, calibrated measurement devices, data loggers, technician support, engineering support, and consumables such as fuel for engine-generator sets.

OPR and BOD

American Society of Heating, Refrigerating and Air-Conditioning Engineers, Inc. (ASHRAE) Standard 202 defines an OPR as “a written document that details the requirements of a project and the expectations of how it will be used and operated. This includes project goals, measurable performance criteria, cost considerations, benchmarks, success criteria, and supporting information. (The term Project Intent or Design Intent is used by some owners for their Commissioning Process Owner’s Project Requirements.)”

ASHRAE Standard 202 defines a BOD as “a document that records the concepts, calculations, decisions, and product selections used to meet the OPR and to satisfy applicable regulatory requirements, standards, and guidelines. This includes both narrative descriptions and lists of individual items that support the design process.”

The OPR and BOD are generated by the project owner and communicate important expectations for the data center project. These documents should be revised and updated at the end of each phase in the construction process. Specific to data center commissioning, the OPR and BOD should:

• Comply with ASHRAE Standard 202, or similar

• Specify that the CxA is responsible for all commissioning, testing, and formal reporting

• Identify whether the intent of the data center design is to be scalable with IT requirements and whether the use of shared infrastructure is allowed and note any subsequent design features necessary to commissioning

The decision as to whether or not the design intent is to be scalable or use shared infrastructure will have a large effect on subsequent implementation and commissioning phases. Shared infrastructure systems in an incremental buildout can potentially increase the risk associated with future commissioning phases. Careful planning can mitigate this risk. Where shared infrastructure is to be used in a phased implementation, the OPR or BOD should highlight the importance of including design features that will allow a full and rigorous commissioning process at each phase of project implementation.

DESIGN AND PRE-CONSTRUCTION PHASE ELEMENTS AND BENCHMARKS

The design and pre-construction phases are commonly blended into a design/build format in which some activities are completed concurrently (see Table 3). The design typically goes through multiple iterations between the Engineer-of-Record and owner; each iteration identifies additional data center design details. Since the Engineer-of-Record is responsible for creating the designs and ensuring their concurrence with applicable commissioning documents, it becomes the responsibility of the other stakeholders to verify compliance. The CxA and contractor should review the design throughout this process, provide feedback based on their experience, and ensure compliance with the OPR document.

Table 3. Design and Pre-Construction Phase tasks

DESIGN AND PRE-CONSTRUCTION PHASE COMMISSIONING TECHNICALREQUIREMENTS

Commissioning Plan

The commissioning plan is the heart of the commissioning program for a data center. While the CxA will take the lead in its development, all other stakeholders should review and participate in the approval of the final commissioning plan. Utilizing each unique skillset in developing and reviewing the commissioning program will help ensure a rigorous commissioning program.

Once appointed, the CxA must develop the overall commissioning plan, which generally includes:

• Scope of commissioning activities, including identification of any re-commissioning requirements

• General schedule of commissioning

• Documentation requirements

• Risk identification and mitigation plans

• Required resources (e.g., tools, personnel, equipment)

• Identification of the means and methods for testing

Design Review

• For concurrence with the OPR and planned operations

• For commissioning readiness

At this phase, appropriate stakeholders should develop plans, checklists, and reports for Level 1, Level 2, and Level 3. All stakeholders must review these documents. Additional technical requirements during this phase include:

• Review project schedule and budget to ensure the schedule continues to maintain adequate resources and time to complete commissioning

• Verify adherence to OPR and BOD

• Amend documents as necessary in order to keep them up to date

• Add design elements as required to allow for the commissioning program to meet the minimum OPR and meet commissioning readiness requirements

• If the design is scalable and to be implemented in future phases with shared systems, ensure that the design allows for future commissioning by including enhancements to reduce the risk to the active IT equipment.

• Ensure that equipment specifications identify that the specified capacity is net of any deductions or tolerances allowed by national or international manufacturing standards (verified during Level 1 and Level 3)

Typically, long-lead items are procured using an RFP process in parallel with the design process. The commissioning requirements for this equipment should be included in the RFP documents and adherence to these should be assessed throughout the equipment delivery and installation. As part of these requirements, RFPs should identify the requirement for on-site OEM technician support throughout Level 4 and Level 5.

Commissioning Plans and Scripts for Level 1, Level 2, and Level 3

• OEMs should provide the written test procedure to the commissioning team for approval prior to the Level 1 activities.

• Contractors, in conjunction with the CxA, should provide Level 2 Post-Installation checklists from the record drawings to verify installation of all equipment.

• The entire commissioning team should review all Level 2 checklists.

• OEMs, in conjunction with the CxA, should provide start-up and functional checklists for Level 3.

• Where component-level functional testing is necessary beyond the OEM’s typical scope of work, the

CxA shall create testing procedures that should be reviewed by the commissioning team and executed by

the contractors.

CONSTRUCTION PHASE ELEMENTS AND BENCHMARKS

Throughout the data center construction, the CxA will monitor progress to ensure that the installations conform to the OPR document. Additionally, the first three levels of commissioning will take place and be overseen by the CxA (see Table 4).

Table 4. Construction Phase tasks

CONSTRUCTION PHASE COMMISSIONING TECHNICAL REQUIREMENTS

During the construction phase, the focus moves from developing plans to execution, with team members executing Level 1, Level 2, and Level 3 activities. At the same time, the operations team and the CxA develop scripts for Level 4 and Level 5, which are to be reviewed by all stakeholders.

Technical requirements during this phase include:

• Review project schedule and budget to ensure the schedule continues to have adequate time and budget to

complete commissioning

• Protect equipment stored on site awaiting installation from hazards (e.g., dirt and construction debris,

impact, fire hazards) and maintain according to manufacturer’s recommendations

• Verify that circuit breakers are set in accordance with the short circuit and breaker coordination and arc

flash study

• Repeat Level 1 procedures in the actual data center environment since factory witness testing is

performed in ideal conditions rarely seen in practice in the data center, ensuring that no equipment

damage occurred during transit and that the equipment performs at the same level as when it was tested

at the factory

• Ensure that the building management system (BMS) functions at a basic level so that it is ready to

support critical Level 4 and Level 5 commissioning activities

• Log critical asset information (e.g., make, model, serial number) into the maintenance management

system (MMS) (or other suitable recordkeeping) as equipment is received on site to be available for the

operations team

• Continue to submit formal reports to the owner detailing all items tested, steps taken to test, and the

results as soon as reasonably possible

• Repeat entire testing procedures when programming or control wiring is altered to correct a testing step

that does not complete successfully as it is possible to have an unexpected impact

In addition, Engineers-of-Record should provide a finalized sequence of operations document to the CxA, so it can create the Level 4 and Level 5 commissioning scripts.

Commissioning Plans and Scripts for Level 4 and Level 5

Script development is the responsibility of the CxA, with support from all other team members.

Plans and scripts should:

• Identify every test and step to be taken to complete commissioning

• Identify and describe the anticipated results for each step of the test

• Identify responsible parties for each step of each test to ensure that everyone is available and

prepared, and to assist with schedule and budget reviews

• Identify safety precautions and personal protection equipment (PPE) requirements for all team members

Testing should be conducted:

• Under expected normal operations in the same manner that the operations team will operate the

data center

• Under expected maintenance conditions in the same manner that the operations team will maintain

the data center

• In manual operation as necessary to support future upgrades and replacements

In addition, commissioning should simulate system and component failures to test fault tolerant features, even when fault tolerance may not be a specific design assumption because it will inform the operations team on how the infrastructure responds when failures inevitably occur. Uptime Institute recommends testing in as many additional scenarios as possible that make sense for the design—even scenarios that may be outside the scope of design—to provide key information to operations about how to respond when the facility does not function and/or respond as designed.

LEVEL 4 AND 5 COMMISSIONING PHASE ELEMENTS AND BENCHMARKS

Once construction of the data center has been substantially completed, the CxA will lead the team through Levels 4 and 5 commissioning. The purpose of these activities is to ensure that individual systems and the full data center ecosystem function as they were intended in the design and OPR documents. This includes verifying requiredcapacities and ensuring that equipment can be isolated as intended and that the data center responds as expected to faults (see Table 5).

Table 5. Commissioning Phase tasks

While weather cannot be anticipated, it can and will have an impact on the final commissioning results. Results from Level 4 and 5 commissioning activities should be extrapolated to predict how the equipment would perform at the actual design extreme temperatures and conditions. However, commissioning activities should be scheduled seasonally to verify system operation for the actual extreme and varying ambient conditions for which it was designed—especially in the event economizers are utilized in the data center.

LEVEL 4 and 5 COMMISSIONING PHASE TECHNICAL REQUIREMENTS

Level 4 and 5 commissioning presents a unique opportunity to any data center, which is to be able to fully test and practice equipment operation in any building condition without any risk to critical IT load. Improvements in future operations can result when stakeholders take advantage of this opportunity.

All critical components and systems must be fully tested—representative testing should not be acceptable for mission critical data centers.

Level 4

• Level 4 must include load bank tests of the engine-generator sets, UPS, and UPS battery systems at design

and rated capacities.

• Minimum continuous runtime durations of not less than eight hours are recommended for all load bank

tests; however, continuous runtimes of up to 24 hours are considered a best practice.

• Ensure that load banks are distributed within critical areas to best simulate the actual IT environment

distribution, ideally physically located within racks and with forced cooling on a horizontal path, which

allows for more accurate and realistic mechanical system testing.

• Prior to commencing Level 5, building management and control system (BMCS) graphics should be

completed to support the commissioning activities and to help commission the BMCS because operations

staff will eventually rely on the BMCS including alarming and data trending features.

Level 5

• Ensure that commissioning team members and contractors are positioned strategically throughout the

data center to monitor all systems throughout Level 5

• Size load banks as small as reasonably possible for Level 5 activities to best simulate the

actual IT environment for more accurate and realistic mechanical system testing

• Perform Level 5 tests with the fire detection and suppression systems active, rather than in bypass to

ensure there is no adverse impact to the critical infrastructure

• Isolate equipment at the upstream circuit breaker when performing equipment isolations to simulate

maintenance activities rather than at the local disconnect physically located on the unit

• Complete evaluations when changes are made throughout Level 5 to fix deficiencies and determine

which, if any, tests must be repeated/redone

• Consider the need to retest more than once in order to ensure the successful test was not an anomaly

when an initial test is not successfully completed

• Complete testing on both utility power and on engine-generator power (or other alternative to utility

power source)

• When simulating faults, simulate multiple fault types across separate tests on each piece of equipment

• Ensure that sensor failures are included in the testing scope when simulating faults on highly automated

data centers that rely heavily upon field sensors

• Document, identify, and validate normal operating set points, alarms, and component settings

• Monitor alarms that are generated in the BMCS and electrical power monitoring system (EPMS) to

ensure that they are accurate and useful

• At a minimum, take electrical load and critical area temperature readings between each discrete test;

where data loggers are used, measurements should be logged every 30-60 seconds

• Test a variety of load conditions—25%, 50%, 75%, and 100% step loads—in order to simulate the actual

load conditions as a data center gradually increases its critical IT load

• Test (as possible without causing damage) emergency conditions—such as N-1 and no cooling with

design load—to provide the information necessary for the operations team to structure future

emergency operating practices and plan for staff appropriately

• Install aisle containment strategies that are to be utilized as part of the design to ensure the aisle

containment strategies support the infrastructure as required

Site Cleanup

• Replace the air filters for the electrical systems and heating, ventilation, and air conditioning (HVAC)

systems following the conclusion of Level 5 commissioning

• Flush and clean piping and ductwork to ensure construction debris does not impact future mechanical

plant performance

TURNOVER-TO-OPERATIONS PHASE ELEMENTS AND BENCHMARKS

The turnover-to-operations includes all activities associated with formally turning the facility over to the owner and Operations. Primarily, this includes completing the final documentation associated from the commissioning activities Levels 1-5 and utilizing the commissioning results to finalize SOPs, MOPs, and EOPs.

Needless to say, this is a critical juncture for the data center. Soon after Level 4 and 5, the facility will become live and support critical IT infrastructure, which begins the need to maintain the facility. At this time, Operations must take all of the lessons learned and knowledge gained from the construction and commissioning phases to finalize the maintenance and operations program. Operations must complete this work in a relatively short amount of time in order to minimize risk to the data center. The longer it takes to finalize all of the documentation and processes, the longer the facility will be at risk. Operations needs full support during this transition to ensure the overall uptime and success of the data center.

During the Turnover-to-Operations phase, the CxA duties can include:

• Ensuring that all post-Level 5 punchlist items are successfully completed and closed out

• Facilitating and/or coordinating infrastructure or OEM training to the operations staff

• Assisting as necessary the development of critical operating procedures.

The CxA is also responsible for gathering all testing reports and checklists from all five levels of commissioning to create the final commissioning report. The final report to the owner should include:

• Electrical and mechanical load and system condition readings taken at timely intervals before major

actions are implemented in each test

• All steps, results, and system readings at every stage of the commissioning

The CxA should return to the site approximately one year following completion of commissioning to review the building operations and to ensure there are no outstanding items related to re-commissioning or seasonal commissioning efforts.

RE-COMMISSIONING AND FUTURE INSTALLATION PHASE ELEMENTS AND BENCHMARKS

Commissioning should be performed any time new infrastructure is installed or any time there is a significant change to the configuration of existing infrastructure. This could include planned expansion of the data center or major replacements (see Table 6).

Table 6. Re-Commissioning Phase tasks

In data centers that are built to be scalable, it is imperative that commissioning be just as rigorous for the follow-on infrastructure deployments to minimize risk to the facility. Commissioning activities undoubtedly add risk to the data center, especially where infrastructure systems are shared. However, this risk must be weighed against the risk of performing the associated commissioning tests. If a component or system is not going to perform as expected, the owner must decide if it is better to have this occur during a planned commissioning activity or during an unplanned failure. While performing a rigorous commissioning program during the initial buildout may prove the concept of the design, the facility could potentially be at risk if all of the new infrastructure components and systems are not tested rigorously.

These types of commissioning activities, by their very nature, occur while the systems are supporting critical IT load. In these instances, the operations team best knows how these activities may impact that mission critical load. During re-commissioning or incremental commissioning, the operations team should be working in very close collaboration with the commissioning team to ensure the integrity of the data center. Additionally, if re-commissioning involves changes to the configuration of the data center, Operations needs full awareness so that operating procedures that impact maintenance and emergency activities can be updated and tested completely.

The best way to mitigate the risk of re-commissioning efforts or for follow-on phases is to ensure that the facility is properly and extensively commissioned when it is originally built. And, as part of a rigorous re-commissioning program, all of the points discussed in this paper for standard commissioning apply to the re-commissioning efforts. However, due to the higher level of risk with these activities, there are some additional requirements.

RE-COMMISSIONING AND FUTURE INSTALLATION PHASE TECHNICAL REQUIREMENTS

All of the technical requirements previously provided apply to re-commissioning and future installation phases. However, follow-up commissioning activities also require the following special considerations by the CxA:

• Adequate notice must be provided to service owners about the schedule, duration, risk, and

countermeasures in place for the re-commissioning activities in order to gain concurrence from IT

end users.

• For facilities that are based on a dual-corded IT equipment topology, the owner and Operations

should verify that the existing critical load is appropriately dual corded where systems that support

installed IT loads are to be commissioned.

• As load banks can introduce contaminates, load bank placement should be considered carefully so as not

to impact the existing critical IT equipment.

• Detailed commissioning scripts must be prepared and followed during commissioning to ensure minimal

risk to existing IT equipment. Priority should be given to the live production IT environment, and back-out

procedures should be in place to ensure an optimal mean time to recovery (MTTR) in case of a power

down event.

• Seasonal testing of the systems should be performed to verify performance in a variety of climatic

conditions, including extreme ambient conditions. This also ensures that economizers, where used, will

be tested properly.

CONCLUSION

Commissioning activities represent a unique opportunity for data center owners. The ability to rigorously test the capabilities of the critical infrastructure that support the data center without any risk to mission critical IT loads is an opportunity that should be capitalized on to the maximum possible extent. Uptime Institute observes that this critical opportunity is being wasted far too often in data center facilities, with not nearly enough emphasis on the rigor and depth of the commissioning program required for a mission critical facility until critical IT hardware is already connected.

A well-planned and executed commissioning program will help validate the capital investment in the facility to date. It will also put the operations team in a far better position to manage and operate the critical infrastructure for the rest of the data center’s useful life, and ultimately ensure that the facility realizes its full potential.

Ryan Orr

Ryan Orr joined Uptime Institute in 2012 and currently serves as a senior consultant. He performs Design and Constructed Facility Certifications, Operational Sustainability Certifications, and customized Design and Operations Consulting and Workshops. Mr. Orr’s work in critical facilities includes responsibilities ranging from project engineer on major upgrades for legacy enterprise data centers, space planning for the design and construction of multiple new data center builds, and data center Maintenance and Operations support.

Chris Brown

Christopher Brown joined Uptime Institute in 2010 and currently serves as Vice President, Global Standards and is the Global Tier Authority. He manages the technical standards for which Uptime Institute delivers services and ensures the technical delivery staff is properly trained and prepared to deliver the services. Mr. Brown continues to actively participate in the technical services delivery including Tier Certifications, site infrastructure audits, and custom strategic-level consulting engagements.

Ed Rafter

Edward P. Rafter has been a consultant to Uptime Institute Professional Services (ComputerSite Engineering) since 1999 and assumed a full-time position with Uptime Institute in 2013 as principal of Education and Training. He currently serves as vice president-Technology. Mr. Rafter is responsible for the daily management and direction of the professional education staff to deliver all Uptime Institute training services. This includes managing the activities of the faculty/staff delivering the Accredited Tier Designer (ATD) and Accredited Tier Specialist (ATS) programs, and any other courses to be developed and delivered by Uptime Institute.

System efficiency proves more important than equipment efficiency

By Paolo Barberis, Leonardo Sergardi, and Ferdinando Ciardullo

FastWeb is an Italian telecommunications operator, 100% owned by Swisscom, providing ultrabroadband services to the Italian consumer and corporate markets, in which it holds a 35% market share. Since its founding 15 years ago, FastWeb has invested in infrastructure. For instance FastWeb has an optical fiber network of about 38,000 kilometers. This strategy was reconfirmed for 2014/2015 when FastWeb announced its Fiber to the Street project (FTTS), a two-year plan to provide ultrabroadband services to 30% of the Italian population, an increase from 20%. Similarly, FastWeb has invested in data centers. At present, the company owns two data centers, with a total area of 6,000 square meters (m2) of white space. Today FastWeb has chosen to focus on providing network services and services such as housing, hosting, Cloud, managed services, and managed security.

In order to support these services, FastWeb decided to build a new data center offering the highest level of security possible to its clients. This goal led to the decision to achieve Italy’s first Tier IV Certification of Constructed Facilities (TCCF) with quality construction and energy efficiency also in mind. After examining the various options, FastWeb decided to site the data center within an owned, existing facility built in 1937.

FastWeb’s choice to build its new data center within its headquarters alongside the existing data rooms and offices was the main constraint in developing the project. As a result the design approach was reversed. Every effort was made to build the best infrastructure possible to meet FastWeb’s performance requirements, without disrupting the existing functions and the surrounding environment. In practice, it was necessary to identify the parts of FastWeb’s entire business plan that could be carried out on site optimally and conform to the plan itself.

During the preliminary design phases, a major effort was made to locate the optimal solution, which made it possible to develop the project details in a very short time, with- out requiring any changes to the drawings. The challenge was integrating and holistically adapting the spaces available to the space requirements of the white space and sup- porting equipment, the volumetric plan of the facility, the requirements of Tier IV, and the efficiency goals. As a result of the detailed preliminary planning, time was recovered during the detailed design and execution phases, which had practically no surprises.

Finally, the acoustic pollution needed to be managed in line with the local standards because of the downtown location of the site. FastWeb carried out an acoustic audit to identify any mitigation measures that would be needed. On the basis of the results the mitigation interventions were decided. Acoustics were tested twice afterwards: once during commissioning and then again after the data center went live. The issue of acoustic pollution was fully addressed by specifying super-silenced chillers and engine-generator sets and positioning them in the courtyard and installing appropriate gear to make them super silent. These steps did not affect the loads in any way.

Because the site was already part of FastWeb’s network, the new data center ben- efits from all the robustness and redundancy of connectivity of the network at low cost. In addition, the company’s Technological Department is located on the site, which gives the facility access to the highest technical skills available. Moreover, from a market viewpoint, key clients like being hosted in central Milano, which is a capital of finance and industry and near many of their headquarters.

Figure 1. Data room layout

Figure 2. Mechanical plant infrastructure in the internal courtyard

The facility comprises a 600-m2 data room with 80 m2 of ancillary space, 930 m2 of machine rooms, and 650 m2 of external technological areas, all located in the same building on multiple adjacent floors (see Figures 1 and 2). The facility’s white space houses (162) 42U racks using Hot Aisle containment technology (see Figure 3), with 1,250 kilowatts (kW) available for IT loads (an average of 7.5 kW/rack and power density of 2.1 kW/m2). The facility can also accommodate a few high-density (20 kW/rack) islands. The data center is the first one built in Italy to achieve TCCF from Uptime Institute.

Figure 3. Data room Cold Aisle

FastWeb’s data center includes a double-path, always-on scheme, both for electric

as well as mechanical systems, including two feeds from the external utility. Utility service to the facility is 5 megavolt-amperes (MVA), with two 2.4 megawatt (MW) engine-generator sets (N+N) (continuous-rated power as per ISO 8528) and four very high yield, scalable modular static 800 kVA UPSs (N+N),capable of operating in voltage double conversion, line interactive, or off-line modes to limit losses, producing annual efficiencies of 98% (see Figure 4). Total cooling capacity is 3.340 kW.

Figure 4. UPS room

PUE as measured during commissioning was 1.25. The FastWeb facility includes both a building management system (BMS) (see Figure 5) and Schneider Electric’s StruxureWare Data Center Infrastructure Management (DCIM) that help it manage energy use and achieve its energy goals. Together these monitor or control about 10,000 points and variables.

Figure 5. BMS control panel

In order to ensure the most rapid and easiest flexibility of change, power distribution and data cabling have been made on site above the racks. Power is distributed through double-path busbars. Power to the racks can be completely changed without affecting the others, because feeds from the busbar are through extractable boxes equipped with switches. This system has eliminated the need for electric distribution panels bringing a noticeable reduction of losses related to electrical distribution.

The data center required about 50,000 m of power cables and about 50,000 m of auxiliary and control cables plus 900 manual and automatic valves on the cooling circuits. Two distinct 40 Gbit fiberoptic backbones link the facility to the external mains network.

COOLING

In order to reach FastWeb’s high energy efficiency goals, the cooling system

has been designed to reduce the energy required for pumps as allowed by the existing building. The mechanical systems are sized to ensure the data room has a C1 class microclimate, as defined by ASHRAE’s Thermal Guidelines. Design temperatures at the server input are 77°F (25°C), which can be raised to 86°F (30°C) in case of future installation of new-generation servers.

The choice of cooling equipment was made with overall facility efficiency in mind, considering the real operational parameters of the system (temperatures, loads,

etc.) and the specific designed setting logic. In other words, simply picking the most efficient equipment does not guarantee the most efficient system. This thinking led to solutions where the highest efficiency equipment was not chosen for the project.

It was gratifying then when operating experience confirmed that most efficient system was achieved by matching the main equipment to the specific operating conditions expected in the facility. This outcome was particularly noticeable in the performance of chiller units and in-row units.

Four high-efficiency chillers provide N+N cooling to the facility. The chillers are leading edge in terms of energy efficiency, internal redundancy, reliability, occupancy and noise; they are equipped with oil-free magnetic levitation compressors and are specified to be super silenced. Both primary cooling loops are equipped with inertial refrigerated water storage tanks to ensure operation of the data room for 15 minutes in case of blackout. Secondary distribution of cooling fluids is achieved using double-ring distributions, each powered by one of the two chiller plants. The energy efficiency ratio (EER) coefficient of 9.0 (50% of rated load for each chiller) demonstrates the energy efficiency of the system.

A dedicated control system ensured that the chillers operate as efficiently as possible, balancing their output ac- cording to the load present. The same system also identifies maintenance actions—in addition to routine maintenance operations—that could improve efficiency.

In the white space, in-row cooling units ensure an N+N redundancy factor for each aisle. During normal operation, each aisle is cooled separately thanks to modulating two-way valves and fans activated by inverters.

The in-row technology was chosen since it has the following advantages respect to the traditional perimeter computer room air handlers CRAH units:

High efficiency

No mixing of cold and hot air

No by pass phenomena

Proximity to cold and hot air flows allows for minimal loss and good airflow management

Minimizes the need for humidification or dehumidification

Supports modular design

Ease of installation

The high temperature of the chilled water (15–20°C) used in the facility means that air can’t be dehumidified in the summer. As a result, the facility includes two direct expansion generator sets operating with heat pumps to treat the fresh air. Two cascaded heat recovery systems give the units particularly high overall efficiency.

FastWeb utilizes a DCIM system (see Figure 6) and a double-path BMS to control the facility , which accounts for energy use to the PDUs and to the in-row cooling units.

Figure 6. Data room BMS – DCIM control panel

The BMS and DCIM integrate control of the facility and the IT infrastructure, correctly managing in real time the operating variations of the servers due to the use of cloud tools as well as those due to changes and hardware and software physical integrations. The DCIM system has been equipped with specific modules dedicated to the management of colocation facilities with various users and companies present in the same room.

FIRE PREVENTION

Fire prevention in the facility is particularly advanced. The entire system combines a traditional smoke detection system, combined with a high-sensitivity smoke detection system (HSSD) for early detection. An inert gas IG01 (argon) system can be deployed for active fire protection. The system is centralized with a double set of cylinders (main and reserve), to protect the data room, the tape library, UPS rooms, batteries, electric transformers, and the engine generators.

FINAL RESULT

Design work proceeded through the following phases:

• Site assessment

• First preliminary stage

• Second preliminary stage in which FastWeb’s needs were matched to the features of the site

• Detailed design, with a high level of definition (see Figure 7)

All the phases included cost estimates, timing, and impact on the existing facilities.

Figure 7. Deployment phases and process model

The whole design and construction process (from kick off to commissioning) required a high level of communication between all the people involved in project implementation, with continuous sharing of choices, decisions, and changes in order to achieve the owner’s desired goals. In addition, each phase of design was developed to the greatest degree compatible with that given phase. Creating detailed drawings and documents reduced miscommunication and confusion on the project.

A team comprising FastWeb stakeholders, including the project leader, IT, networking, business unit, operation, facility, maintenance, purchasing managers, as well as design engineers and the director of works managed the design, procurement, and construction processes. The team, through meetings held at least weekly, constantly checked and managed the whole construction process, sharing and reporting results and decisions at

the end of each phase to the board of directors. Additional communication took place at all critical moments of the process. This team approach ensured that the construction would meet budgets (with a 5% tolerance) and deadlines, while providing a facility that meets FastWeb’s needs.

Paolo Barberis

Paolo Barberis is the manager of the Department of Technology at FastWeb S.p.a., a telecommunications company operating landline and mobile networks in Italy. He graduated in electronic engineering in 1989 at the Politecnico of Milano and is a member of the Charter of Engineers of the Province of Sondrio. Mr. Barberis has over 25 years of experience in designing and managing Telecommunication and Information Technology services with landline and mobile operators having mission critical services. He has been entrusted with the design, construction, and management of six data centers.

Leonardo Sergardi

Leonardo Sergardi is a partner and the cofounder of the engineering and design company AS ingg. He has a degree in Electrotechnical Engineering from the Politecnico di Milano in 1978. Mr. Sergardi is an Accredited Tier Designer, Certified Data Centre Professional, Certified Data Centre Energy Professional, and a member of the Charter of Engineers of the Province of Milano. He has completed more than 100 projects and 20 data centers, with more than 35 years experience designing systems and project management for advanced tertiary buildings, data centers, and mission critical facilities.

Ferdinando Ciardullo

Ferdinando Ciardullo works at the engineering and design company AS ingg. He has a degree in Mechnical Engineering at the Politecnico di Milano. Mr. Ciardullo is a CDCDP and a member of the Charter of Engineers of the Province of Milano. He has completed more than 100 projects and 15 data centers, with more than 35 years experience designing systems and project management for advanced tertiary buildings, data centers, and mission critical facilities.

https://journal.uptimeinstitute.com/wp-content/uploads/2015/04/fastwebtop.jpg4751201Kevin Heslinhttps://journal.uptimeinstitute.com/wp-content/uploads/2022/12/uptime-institute-logo-r_240x88_v2023-with-space.pngKevin Heslin2015-04-24 10:22:512015-04-24 12:15:34Case Study: Italy’s First Tier IV TCCF

ViaWest, the Shaw Communications-owned data center service provider, is being accused of misleading customers about reliability of its Las Vegas data center. Nevada Attorney General Adam Laxalt’s office has asked the company to address the accusations in a letter, a copy of which Data Center Knowledge has obtained. The letter is in response to a complaint filed with the attorney general by a man whose last name is Castor, but whose first name is not included. The accusation is that ViaWest has been advertising its Las Vegas data center as a Tier IV facility, when in fact it was not constructed to Tier IV standards. The attorney general’s letter says that in doing so the company may be in violation of the state’s Deceptive Trade Act.

Uptime Institute does not comment on specific projects, as a matter of commitment to our clients and governing policies.

But following the publication of the Data Center Knowledge article, we have received numerous variations on the following questions, which warrant clarification:

How many Tier Certifications of Design Documents, Constructed Facility, and Operational Sustainability has Uptime Institute awarded?

Since 2009, Uptime Institute has awarded 545 Certifications in 68 countries.

How many conflicts have been experienced in applying the Tier or Operational Sustainability criteria in any countries?

Uptime Institute criteria remain widely applicable and have not experienced conflict with local codes or jurisdictions.

How common is it for a data center to have one Tier Certification level for Design Documents and another Tier Certification level for Constructed Facility?

It is highly irregular for the Tier Certification of Design Documents (TCDD) and Tier Certification of Constructed Facility (TCCF) of the same data center to be misaligned in terms of Certification level. This is also an incongruent use of the Certification process, which was developed to provide assurances throughout the project to deliver on a single objective.

Instances of misaligned Tier Certification of Design Documents and installed infrastructure (i.e., stranded, altered or false Design Certifications) have been recklessly misleading to the industry and compelled us to amend the terms and conditions of Tier Certification so that Design Certifications expire after two years.

If discrepancies between Design and Facility Certifications happen, what is the purpose of the Tier Certification of Design Documents?

Tier Certification of Design Documents was never intended to be standalone designation as the end-point. It is provisional in nature and intended as a checkpoint on the Tier Certification of Constructed Facility and Operational Sustainability path to message upper management that the project is progressing to the Tier performance objective defined for the specific site.

There are multiple reasons that an enterprise data center project may achieve Tier Certification of Design Documents and not achieve Tier Certification of Constructed Facility. Some of these reasons include projects delays, cancellations, re-scoping. However, these same reasons for an enterprise project do not apply to a commercial data center services market, in which Tier Certifications drive competitive differentiation and pricing advantages for those who have demonstrated the capability of their facility.

What is Uptime Institute doing to prevent misrepresentation in the market?

In response to the gaming of the Tier Certification process for marketing reasons and to differentiate the achievement of Facility Certification, Uptime Institute implemented on 1 January 2014 a 2-year expiry for Tier Certification of Design Documents, as well as revocation rights for cases of clear, willful, and unscrupulous misrepresentations.

Julian Kudritzki, Chief Operating Officer, Uptime Institute

https://journal.uptimeinstitute.com/wp-content/uploads/2015/03/1.jpg4751201Matt Stansberryhttps://journal.uptimeinstitute.com/wp-content/uploads/2022/12/uptime-institute-logo-r_240x88_v2023-with-space.pngMatt Stansberry2015-03-17 11:56:222015-03-17 12:43:12Data center design goals and certification of proven achievement are not the same

The care and feeding of a data center

By Richard F. Van Loo

Managing and operating a data center comprises a wide variety of activities, including the maintenance of all the equipment and systems in the data center, housekeeping, training, and capacity management for space power and cooling. These functions have one requirement in common: the need for trained personnel. As a result, an ineffective staffing model can impair overall availability.

The Tier Standard: Operational Sustainability outlines behaviors and risks that reduce the ability of a data center to meet its business objectives over the long term. According to the Standard, the three elements of Operational Sustainability are Management and Operations, Building Characteristics, and Site Location (see Figure 1).

Figure 1. According to Tier Standard: Operational Sustainability, the three elements of Operational Sustainability are Management and Operations, Building Characteristics, and Site Location.

Management and Operations comprises behaviors associated with:

• Staffing and organization

• Maintenance

• Training

• Planning, coordination, and management

• Operating conditions

Building Characteristics examines behaviors associated with:

• Pre-Operations

• Building features

• Infrastructure

Site Location addresses site risks due to:

• Natural disasters

• Human disasters

Management and Operations includes the behaviors that are most easily changed and have the greatest effect on the day-to-day operations of data centers. All the Management and Operations behaviors are important to the successful and reliable operation of a data center, but staffing provides the foundation for all the others.

Staffing

Data center staffing encompasses the three main groups that support the data center, Facility, IT, and Security Operations. Facility operations staff addresses management, building operations, and engineering and administrative support. Shift presence, maintenance, and vendor support are the areas that support the daily activities that can affect data center availability.

The Tier Standard: Operational Sustainability breaks Staffing into three categories:

• Staffing. The number of personnel needed to meet the workload requirements for specific maintenance

activities and shift presence.

• Qualifications. The licenses, experience, and technical training required to properly maintain and

operate the installed infrastructure.

• Organization. The reporting chain for escalating issues or concerns, with roles and responsibilities

defined for each group.

In order to be fully effective, an enterprise must have the proper number of qualified personnel, organized correctly. Uptime Institute Tier Certification of Operation Sustainability and Management & Operations Stamp of Approval assessments repeatedly show that many data centers are less than fully effective because their staffing plan does not address all three categories.

Headcount

The first step in developing a staffing plan is to determine the overall headcount. Figure 2 can assist in determining the number of personnel required.

Figure 2. Factors that go into calculating staffing requirements

The initial steps address how to determine the total number of hours required for maintenance activities and shift presence. Maintenance hours include activities such as:

• Preventive maintenance

• Corrective maintenance

• Vendor support

• Project support

• Tenant work orders

The number of hours for all these activities must be determined for the year and attributed to each trade.

For instance, the data center must determine what level of shift presence is required to support its business objective. As uptime objectives increase so do staffing presence requirements. Besides deciding whether personnel is needed on site 24 x 7 or some lesser level, the data center operator must also decide what level of technical expertise or trade is needed. This may result in two or three people on site for each shift. These decisions make it possible to determine the number of people and hours required to support shift presence for the year. Activities performed on shift include conducting rounds, monitoring the building management system (BMS), operating equipment, and responding to alarms. These jobs do not typically require all the hours allotted to a shift, so other maintenance activities can be assigned during that shift, which will reduce the overall total number of staffing hours required.

Once the total number hours required by trade for maintenance and shift presence has been determined, divide it by the number of productive hours (hours/person/year available to perform work) to get the required number of personnel for each trade. The resulting numbers will be fractional numbers that can be addressed by overtime (less than 10% overtime advised), contracting, or rounding up.

Qualification Levels

Data center personnel also need to be technically qualified to perform their assigned activities. As the Tier level or complexity of the data center increases, the qualification levels for the technicians also increase. They all need to have the required licenses for their trades and job description as well as the appropriate experience with data center operations. Lack of qualified personnel results in:

• Maintenance being performed incorrectly

• Poor quality of work

• Higher incidents of human error

• Inability to react and correct data center issues

Organized for Response

A properly organized data center staff understands the reporting chain of each organization, along with their individual roles and responsibilities. To aid that understanding, an organization chart showing the reporting chain and interfaces between Facilities, IT, and Security should be readily available and identify backups for key positions in case a primary contact is unavailable.

Impacts to Operations

The following examples from three actual operational data centers show how staffing inefficiencies may affect data center availability

The first data center had two to three personnel per shift covering the data center 24 x 7, which is one of the larger staff counts that Uptime Institute typically sees. Further investigation revealed that only two individuals on the entire data center staff were qualified to operate and maintain equipment. All other staff had primary functions in other non-critical support areas. As a result, personnel unfamiliar with the critical data center systems were performing activities for shift presence. Although maintenance functions were being done, if anything was discovered during rounds additional personnel had to be called in increasing the response time before the incident could be addressed.

The second data center had very qualified personnel; however, the overall head count was low. This resulted in overtime rates far exceeding the advised 10% limit. The personnel were showing signs of fatigue that could result in increased errors during maintenance activities and rounds.

The third data center relied solely on a call in method to respond to any incidents or abnormalities. Qualified technicians performed maintenance two or three days a week. No personnel were assigned to perform shift rounds. On-site Security staff monitored alarms, which required security staff to call in maintenance technicians to respond to alarms. The data center was relying on the redundancy of systems and components to cover the time it took for technicians to respond and return the data center to normal operations after an incident.

Assessment Findings

Although these examples show deficiencies in individual data centers, many data centers are less than optimally staffed. In order to be fully effective in a management and operations behavior, the organization must be Proactive, Practiced, and Informed. Data centers may have the right number of personnel (Proactive), but they may not be qualified to perform the required maintenance or shift presence functions (Practiced), or they may not have well-defined roles and responsibilities to identify which group is responsible for certain activities (Informed).

Figure 3 shows the percentage of data centers that were found to have ineffective behaviors in the areas of staffing, qualifications, and organization.

Figure 3. Ineffective behaviors in the areas of staffing, qualifications, and organization.

Staffing (appropriate number of personnel) is found to be inadequate in only 7% of data centers assessed. However, personnel qualifications are found to be inadequate in twice as many data centers, and the way the data center is organized is found to be ineffective even more often. Although these percentages are not very high, staffing affects all data center management. Staffing shortcomings are found to affect maintenance, planning, coordination, and load management activities.

The effects of staffing inadequacies show up most often in data center operations. According to the Uptime Institute Abnormal Incident Reports (AIRs) database, the root cause of 39% of data center incidents falls into the operational area (see Figure 4). The causes can be attributed to human error stemming from fatigue, lack of knowledge on a system, and not following proper procedure, etc. The right, qualified staff could potentially prevent many of these types of incidents.

Figure 4. According to the Uptime Institute Abnormal Incident Reports (AIRs) database, the root cause of 39% of data center incidents falls into the operational area.

Adopting the proven Start with the End in Mind methodology provides the opportunity to justify the operations staff early in the planning cycle by clearly defining service levels and the required staff to support the business. Having those discussions with the business and correlating it to the cost of downtime should help management understand the returns on this investment.

Staffing 24 x 7

When developing an operations team to support a data center, the first and most crucial decision to make is to determine how often personnel need to be available on site. Shift presence duties can include a number of things, including facility rounds and inspections, alarm response, vendor and guest escorts, and procedure development. This decision must be made by weighing a variety of factors, including criticality of the facility to the business, complexity of the systems supporting the data center, and, of course, cost.

For business objectives that are critical enough to require Tier III or IV facilities, Uptime Institute recommends a minimum of one to two qualified operators on site 24 hours per day, 7 days per week, 365 days per year (24 x 7). Some facilities feel that having operators on site only during normal business hours is adequate, but they are running at a higher risk the rest of the time. Even with outstanding on-call and escalation procedures, emergencies may intensify quickly in the time it takes an operator to get to the site.

Increased automation within critical facilities causes some to believe it appropriate to operate as a “Lights Out” facility. However, there is an increased risk to the facility any time there is not a qualified operator on site to react to an emergency. While a highly automated building may be able to make a correction autonomously from a single fault, those single faults often cascade and require a human operator to step in and make a correction.

The value of having qualified personnel on site is reflected in Figure 5, which shows the percentage of data center saves (incident avoidance) based on the AIRs database.

Figure 5. The percentage of data center saves (incident avoidance) based on the AIRs database

Equipment redundancy is the largest single category of saves at 38%. However, saves from staff performing proper maintenance and having technicians on site that detected problems before becoming incidents totaled 42%.

Justifying Qualified Staff

The cost of having qualified staff operating and maintaining a data center is typically one of the largest, if not the largest, expense in a data center operating budget. Because of this, it is often a target for budget reduction. Communicating the risk to continuous operations may be the best way to fight off staffing cuts when budget cuts are proposed. Documenting the specific maintenance activities that will no longer be performed or the availability of personnel to monitor and respond to events can support the importance of maintaining staffing levels.

Cutting budget in this way will ultimately prove counterproductive, result in ineffective staffing, and waste initial efforts to design and plan for the operation of a highly available and reliable data center. Properly staffing, and maintaining the appropriate staffing, can reduce the number and severity of incidents. In addition, appropriate staffing helps the facility operate as designed, ensuring planned reliability and energy use levels.

Rich Van Loo

Rich Van Loo is vice president, Operations for Uptime Institute. He performs Uptime Institute Professional Services audits and Operational Sustainability Certifications. He also serves as an instructor for the Accredited Tier Specialist course.

Mr. Van Loo’s work in critical facilities includes responsibilities ranging from projects manager of a major facility infrastructure service contract for a data center, space planning for the design/construct for several data center modifications, and facilities IT support. As a contractor for the Department of Defense, Mr. Van Loo provided planning, design, construction, operation, and maintenance of worldwide mission critical data center facilities. Mr. Van Loo’s 27-year career includes 11 years as a facility engineer and 15 years as a data center manager.

https://journal.uptimeinstitute.com/wp-content/uploads/2015/03/vl.jpg4751201Kevin Heslinhttps://journal.uptimeinstitute.com/wp-content/uploads/2022/12/uptime-institute-logo-r_240x88_v2023-with-space.pngKevin Heslin2015-03-12 11:22:522015-03-12 19:44:06Proper Data Center Staffing is Key to Reliable Operations

Good MOPs (method of procedure) help humans manage the complexity inherent in data centers

By Alfonso Aranda with Lee Kirby

Data centers are complex techno–human systems. The number of interrelated and interdependent elements (including the human element) that interact in the normal operation of a data center and the large number of interactions that take place combine to generate this complexity. These interactions include any installation or decommissioning of IT equipment; expansion or reconfiguration of the electrical, mechanical, control infrastructure, and ancillary installations (fuel, fire, and water treatment systems, etc.); and any maintenance action, etc. Increased automation adds more working and failure modes, which adds complexity to the system.

Failure Types

Complexity generates a high risk of data center failure, which can originate in the infrastructure (electrical, mechanical, IT, communications) or as a result of interaction between those who manage the infrastructure and systems within those infrastructures or systems. Uptime Institute considers these interactions to be the leading cause of data center outages.

Failures of infrastructure can by contained and mitigated by redundancy in the data center, depending on the topology (Tier). Building a more robust and resilient infrastructure does not minimize these failures; additional mechanisms, organizational and operational in nature, must be deployed to prevent failures caused by human activities in the data center.

One such mechanism, the methods of procedure or MOP, is a step-by-step sequence of actions to be executed by maintenance/operations technicians performing an operation or action that implies a change of state in any critical component of an installation. Such actions include switching breakers on or off, opening or closing sectioning valves, and other actions that could pose a risk to the normal operation of the data center. The purpose of an MOP is to control actions to ensure the desired outcome.

MOPs, SOPs, and EOPs

MOPs can be stand-alone documents or part of higher-level standard operating procedures (SOPs). In the latter case, the SOP is the overarching document that controls how changes are to be made during normal operations. They begin and end the overall procedure. Often, they comprise several MOPs that spell out specific steps for portions of the SOP. SOPs are not as detailed as individual MOPs.

Building an SOP from individual MOPs makes it easier to revise procedures, because the same MOP can be used as part of multiple SOPs. For example, the SOPs describing a filter change for CRAC1, CRAC2, etc., would include the same MOP of how-to steps to change out a filter but a different SOP for each CRAC. In that way updating the procedure for changing the filter only requires changes to the MOP, not to every SOP.

MOPs, SOPs, emergency operating procedures (EOPs), and site configuration procedures (SCPs) form the core of the data center site policies. EOPs are detailed written instructions that must be carried out sequentially when an abnormal event triggers the procedure.

Many of the characteristics of MOPs are also found in SOPs and EOPs, and all of these procedures can be applied—and actually are applied—in other fields (military, research, medical, etc.)

SCPs comprise those design and commissioning documents, drawings, schemes, tables, and studies that describe and document the normal configuration of the site, which sets up the initial conditions for the execution of any SOP or MOP.

Different sites may use different nomenclature in their site policies; however, to ensure their effectiveness, all site policies must cover the following areas:

• Description and administration of the normal operating configuration of the

site (including setpoints, discrimination study, and normally open/normally

closed breakers or valves, etc.)

• General overarching description of standard operations

• Detailed written instructions for executing changes of state or high/

medium-risk intervention

• Detailed written instructions for executing emergency operations for responding to abnormal events

The site policies and actual changes of state of the infrastructure operate within a change management environment.

Components of a MOP

MOPs may contain different elements, fields, and details depending on the complexity of the activity to be carried out and the probability and impact of a failure in its execution. For instance, the field “expected result of the action” could be added to every step in the procedure.

In order to be effective, an MOP needs to be followed at the point and time of use by the appropriate person, supervised or not, depending on the company’s policies.

Every MOP should include a title, description of the procedure, author, approval authority/signature, date, unique identifier, and version control (see Figures 1-3).

Figure 1. Basic information to be included on all MOPs

Figure 2. Detailed step-by-step instructions form the basis of a good MOP. Note the columns for time and initials

Figure 3. The procedures continue, with a final step to inform the BMS operator that the procedure has finished, indicating cross-team communication.

MOPs should also include other information, including prerequisites, safety requirements, special tools and parts, procedure sequencing, and a back-out plan.

Prerequisites include any actions that must be completed prior to performing the procedure, including verifying that all appropriate approvals (e.g., change approval, permit to work, access approval) have been obtained, that any required notifications have been issued, and that any required reconfiguration of the infrastructure (SCPs) have been performed.

The MOP should list any special tools and parts, in addition to the basic toolbox and inventory of parts that technicians usually carry, needed to do the job. Interruptions to retrieve missing tools or parts will usually extend the length of maintenance windows and increase risk to the data center. Extensions of maintenance windows or deviations from the schedule agreed upon during the change approval can lead to the maintenance window being aborted. Aborted changes (maintenance windows are one type of change) can lead to deferred maintenance, which also increases risk to a data center.

Safety requirements include lockout/tagout (LOTO) procedures and the verifications associated with them, the required presence of safety representatives, and the required use of personal protective equipment (PPE).

Include any special tools and parts, in addition to the basic toolbox and inventory of parts that technicians usually carry, needed to do the job. Interruptions to retrieve missing tools or parts will usually extend the length of maintenance windows and increase risk to the data center. Extensions of maintenance windows or deviations from the schedule agreed upon during the change approval can lead to the maintenance window being aborted. Aborted changes (maintenance windows are one type of change) can lead to deferred maintenance, which also increases risk to a data center.

The most important parts of an MOP are the step-by-step instructions. Each and every step needs to be described in detail to indicate exactly what needs to be done and what the expected result is (e.g., alarms going off or indicator lights changing state).

MOPs must incorporate a mechanism that allows technicians to mark each step as completed, once it has been executed. This is normally achieved by adding a field for the technician to initial after carrying out the action indicated on that line. Additionally, incorporating a time stamp of the moment at which the action was completed provides a log of events in case a later analysis is needed.

Some MOPs incorporate pictures or diagrams to communicate certain steps and their outcomes.

Returning to State