Digital Realty Deploys Comprehensive DCIM Solution

Examining the scope of the challenge

By David Schirmacher

Digital Realty’s 127 properties cover around 24 million square feet of mission-critical data center space in over 30 markets across North America, Europe, Asia and Australia, and it continues to grow and expand its data center footprint. As senior VP of operations, it’s my job to ensure that all of these data centers perform consistently—that they’re operating reliably and at peak efficiency and delivering best-in-class performance to our 600-plus customers.

At its core, this challenge is one of managing information. Managing any one of these data centers requires access to large amounts of operational data.

If Digital Realty could collect all the operational data from every data center in its entire portfolio and analyze it properly, the company would have access to a tremendous amount of information that it could use to improve operations across its portfolio. And that is exactly what we have set out to do by rolling out what may be the largest-ever data center infrastructure management (DCIM) project.

Earlier this year, Digital Realty launched a custom DCIM platform that collects data from all the company’s properties, aggregates it into a data warehouse for analysis, and then reports the data to our data center operations team and customers using an intuitive browser-based user interface. Once the DCIM platform is fully operational, we believe we will have the ability to build the largest statistically meaningful operational data set in the data center industry.

Business Needs and Challenges

The list of systems that data center operators report using to manage their data center infrastructure often includes a building management system, an assortment of equipment-specific monitoring and control systems, possibly an IT asset management program and quite likely a series of homegrown spreadsheets and reports. But they also report that they don’t have access to the information they need. All too often, the data required to effectively manage a data center operation is captured by multiple isolated systems, or worse, not collected at all. Accessing the data necessary to effectively manage a data center operation continues to be a significant challenge in the industry.

At every level, data and access to data are necessary to measure data center performance, and DCIM is intrinsically about data management. In 451 Research’s DCIM: Market Monitor Forecast, 2010-2015, analyst Greg Zwakman writes that a DCIM platform, “…collects and manages information about a data center’s assets, resource use and operational status.” But 451 Research’s definition does not end there. The collected information “…is then distributed, integrated, analyzed and applied in ways that help managers meet business and service-oriented goals and optimize their data center’s performance.” In other words, a DCIM platform must be an information management system that, in the end, provides access to the data necessary to drive business decisions.

Over the years, Digital Realty successfully deployed both commercially available and custom software tools to gather operational data at its data center facilities. Some of these systems provide continuous measurement of energy consumption and give our operators and customers a variety of dashboards that show energy performance. Additional systems deliver automated condition and alarm escalation, as well as work order generation. In early 2012 Digital Realty recognized that the wealth of data that could be mined across its vast data center portfolio was far greater than current systems allowed.

In response to this realization, Digital Realty assembled a dedicated and cross-functional operations and technology team to conduct an extensive evaluation of the firm’s monitoring capabilities. The company also wanted to leverage the value of meaningful data mined from its entire global operations.

The team realized that the breadth of the company’s operations would make the project challenging even as it began designing a framework for developing and executing its solution. Neither Digital Realty nor its internal operations and technology teams were aware of any similar development and implementation project at this scale—and certainly not one done by an owner/operator.

As the team analyzed data points across the company portfolio, it found additional challenges. Those challenges included how to interlace the different varieties and vintages of infrastructure across the company’s portfolio, taking into consideration the broad deployment of Digital Realty’s Turn-Key Flex data center product, the design diversity of its custom solutions and acquired data center locations, the geographic diversity of the sites and the overall financial implications of the undertaking as well as its complexity.

Drilling Down

Many data center operators are tempted to first explore what DCIM vendors have to offer when starting a project, but taking the time to gain internal consensus on requirements is a better approach. Since no two commercially available systems offer the same features, assessing whether a particular product is right for an application is almost impossible without a clearly defined set of requirements. All too often, members of due diligence teams are drawn to what I refer to as “eye candy” user interfaces. While such interfaces might look appealing, the 3-D renderings and colorful “spinning visual elements” are rarely useful and can often be distracting to a user whose true goal is managing operational performance.

When we started our DCIM project, we took a highly disciplined approach to understanding our requirements and those of our customers. Harnessing all the in-house expertise that supports our portfolio to define the project requirements was itself a daunting task but essential to defining the larger project. Once we thought we had a firm handle on our requirements, we engaged a number of key customers and asked them what they needed. It turned out that our customers’ requirements aligned well with those our internal team had identified. We took this alignment as validation that we were on the right track. In the end, the project team defined the following requirements:

• The first of our primary business requirements was global access to consolidated data. We required every single one of Digital Realty’s data centers have access to the data, and we needed the capability to aggregate data from every facility into a consolidated view, which would allow us to compare performance of various data centers across the portfolio in real time.

• Second, the data access system had to be highly secured and give us the ability to limit views based on user type and credentials. More than 1,000 people in Digital Realty’s operations department alone would need some level of data access. Plus, we have a broad range of customers who would also need some level of access, which highlights the importance of data security.

• The user interface also had to be extremely user-friendly. If we didn’t get that right, Digital Realty’s help desk would be flooded with requests on how to use the system. We required a clean navigational platform that is intuitive enough for people to access the data they need quickly and easily, with minimal training.

• Data scalability and mining capability were other key requirements. The amount of information Digital Realty has across its many data centers is massive, and we needed a database that could handle all of it. We also had to ensure that Digital Realty would get that information into the database. Digital Realty has a good idea of what it wants from its dashboard and reporting systems today, but in five years the company will want access to additional kinds of data. We don’t want to run into a new requirement for reporting and not have the historical data available to meet it.

Other business requirements included:

• Open bidirectional access to data that would allow the DCIM system to exchange information with

other systems, including computerized maintenance management systems (CMMS), event management,

procurement and invoicing systems

• Real-time condition assessment that allows authorized users to instantly see and assess operational

performance and reliability at each local data center as well as at our central command center

• Asset tracking and capacity management

• Cost allocation and financial analysis to show not only how much energy is being consumed but also how

that translates to dollars spent and saved

• The ability to pull information from individual data centers back to a central location using minimal re

sources at each facility

Each of these features was crucial to Digital Realty. While other owners and operators may share similar requirements, the point is that a successful project is always contingent on how much discipline is exercised in defining requirements in the early stages of the project—before users become enamored by the “eye candy” screens many of these products employ.

To Buy or Build?

With 451 Research’s DCIM definition—as well as Digital Realty’s business requirements—in mind, the project team could focus on delivering an information management system that would meet the needs of a broad range of user types, from operators to C-suite executives. The team wanted DCIM to bridge the gap between facilities and IT systems, thus providing data center operators with a consolidated view of the data that would meet the requirements of each user type.

The team discussed whether to buy an off-the-shelf solution or to develop one on its own. A number of solutions on the market appeared to address some of the identified business requirements, but the team was unable to find a single solution that had the flexibility and scalability required to support all of Digital Realty’s operational requirements. The team concluded it would be necessary to develop a custom solution.

Avoiding Unnecessary Risk

There is significant debate in the industry about whether DCIM systems should have control functionality—i.e., the ability to change the state of IT, electrical and mechanical infrastructure systems. Digital Realty strongly disagrees with the idea of incorporating this capability into a DCIM platform. By its very definition, DCIM is an information management system. To be effective, this system needs to be accessible to a broad array of users. In our view, granting broad access to a platform that could alter the state of mission-critical systems would be careless, despite security provisions that would be incorporated into the platform.

While Digital Realty and the project team excluded direct-control functionality from its DCIM requirements, they saw that real-time data collection and analytics could be beneficial to various control-system schemas within the data center environment. Because of this potential benefit, the project team took great care to allow for seamless data exchange between the core database platform and other systems. This feature will enable the DCIM platform to exchange data with discrete control subsystems in situations where the function would be beneficial. Further, making the DCIM a true browser-based application would allow authorized users to call up any web-accessible control system or device from within the application. These users could then key in the additional security credentials of that system and have full access to it from within the DCIM platform. Digital Realty believes this strategy fully leverages the data without compromising security.

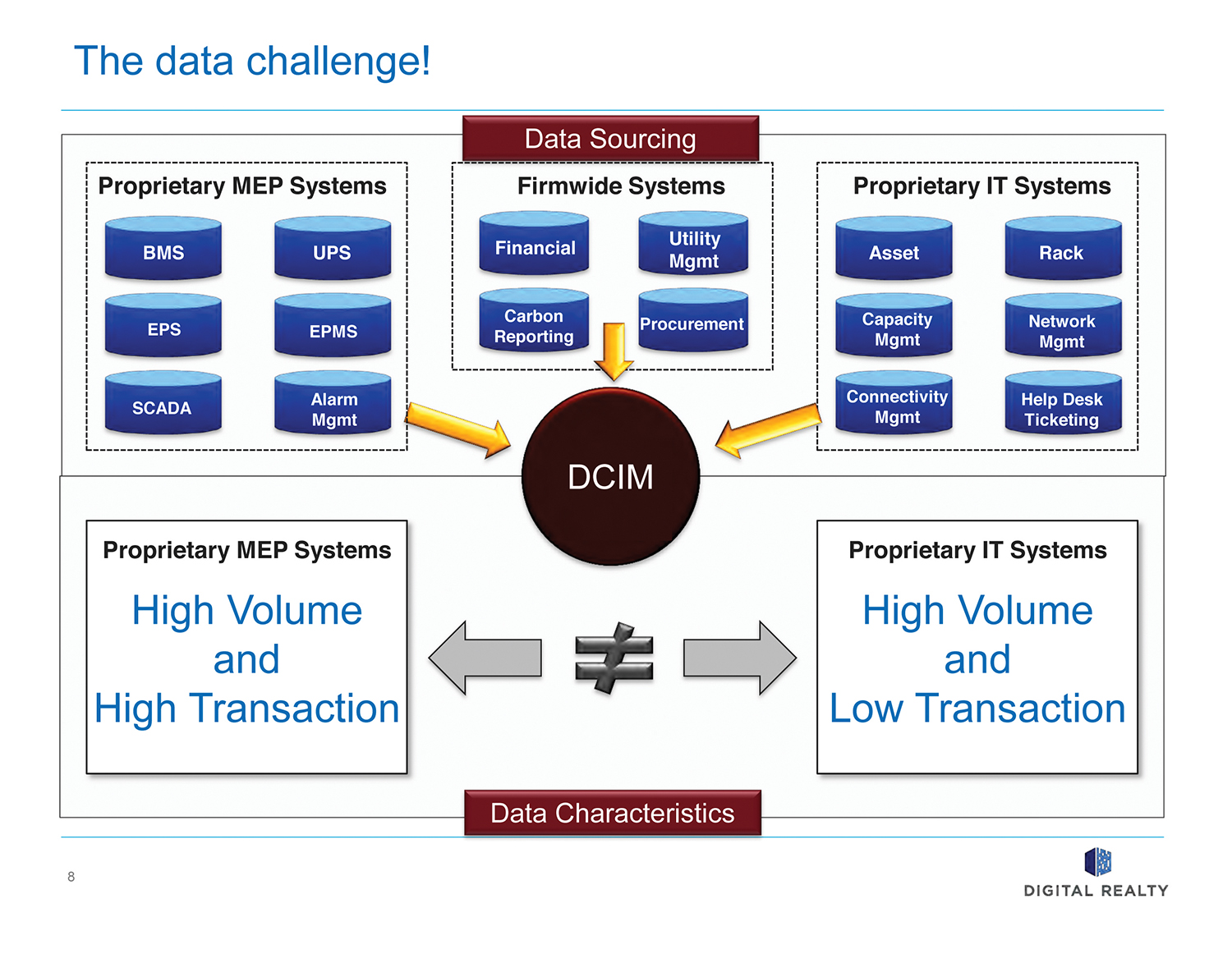

The Challenge of Data Scale

Managing the volume of data generated by a DCIM is among the most misunderstood areas of DCIM development and application. A DCIM platform collects, analyzes and stores a truly immense volume of data. Even a relatively small data center generates staggering amounts of information—billions of annual data transactions—that few systems can adequately support. By contrast, most building management systems (BMS) have very limited capability to manage significant amounts of historical data for the purposes of defining ongoing operational performance and trends.

Consider a data center with a 10,000-ft2 data hall and a traditional BMS that monitors a few thousand data points associated mainly with the mechanical and electrical infrastructure. This system communicates in near real time with devices in the data center to provide control- and alarm-monitoring functions. However, the information streams are rarely collected. Instead they are discarded after being acted on. Most of the time, in fact, the information never leaves the various controllers distributed throughout the facility. Data are collected and stored at the server for a period of time only when an operator chooses to manually initiate a trend routine.

If the facility operators were to add an effective DCIM to the facility, it would be able to collect much more data. In addition to the mechanical and electrical data, the DCIM could collect power and cooling data at the IT rack level and for each power circuit supporting the IT devices. The DCIM could also include detailed information about the IT devices installed in the racks. Depending on the type and amount desired, data collection could easily required 10,000 points.

But the challenge facing this facility operator is even more complex. In order to evaluate performance trends, all the data would need to be collected, analyzed and stored for future reference. If the DCIM were to collect and store a value for each data point for each minute of operation, it would have more than five billion transactions per year. And this would be just the data coming in. Once collected, the five billion transactions would have to be sorted, combined and analyzed to produce meaningful output. Few, if any, of the existing technologies installed in a typical data center have the ability to manage this volume of information. In the real world, Digital Realty is trying to accomplish this same goal across its entire global portfolio.

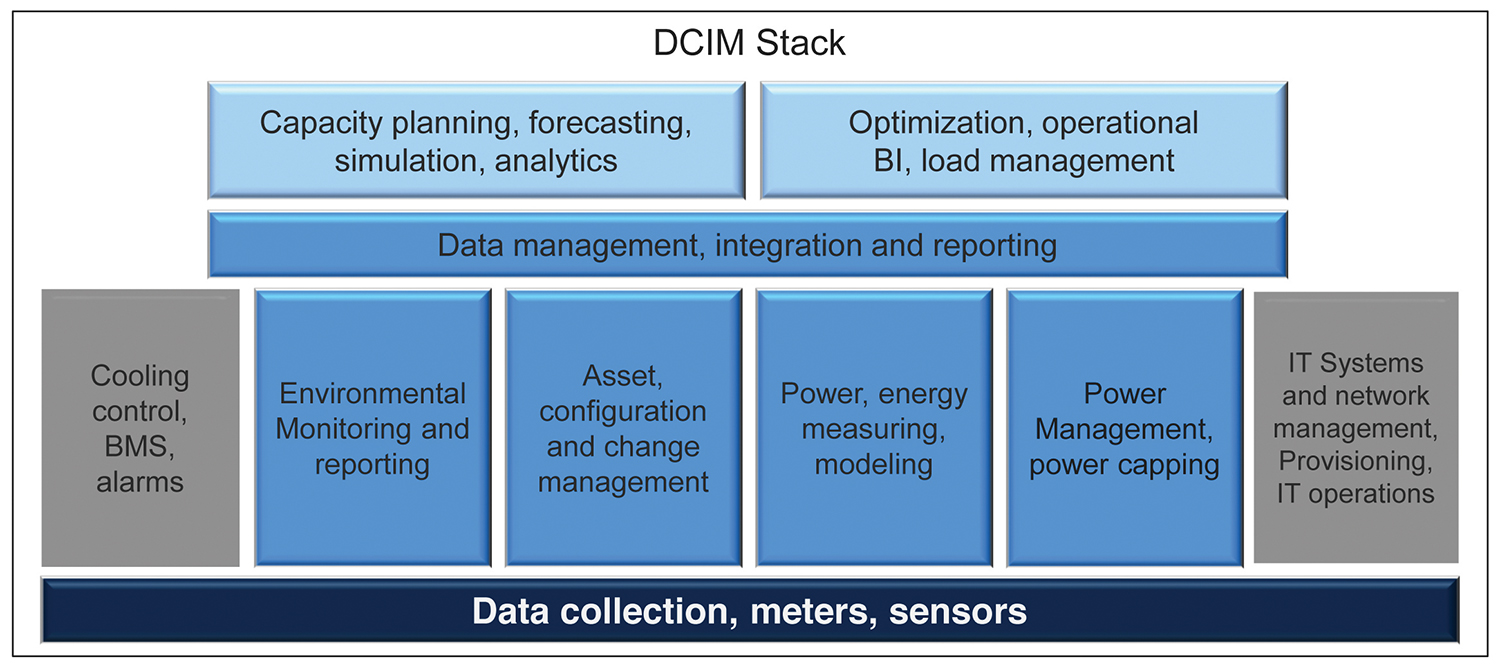

The Three Silos of DCIM

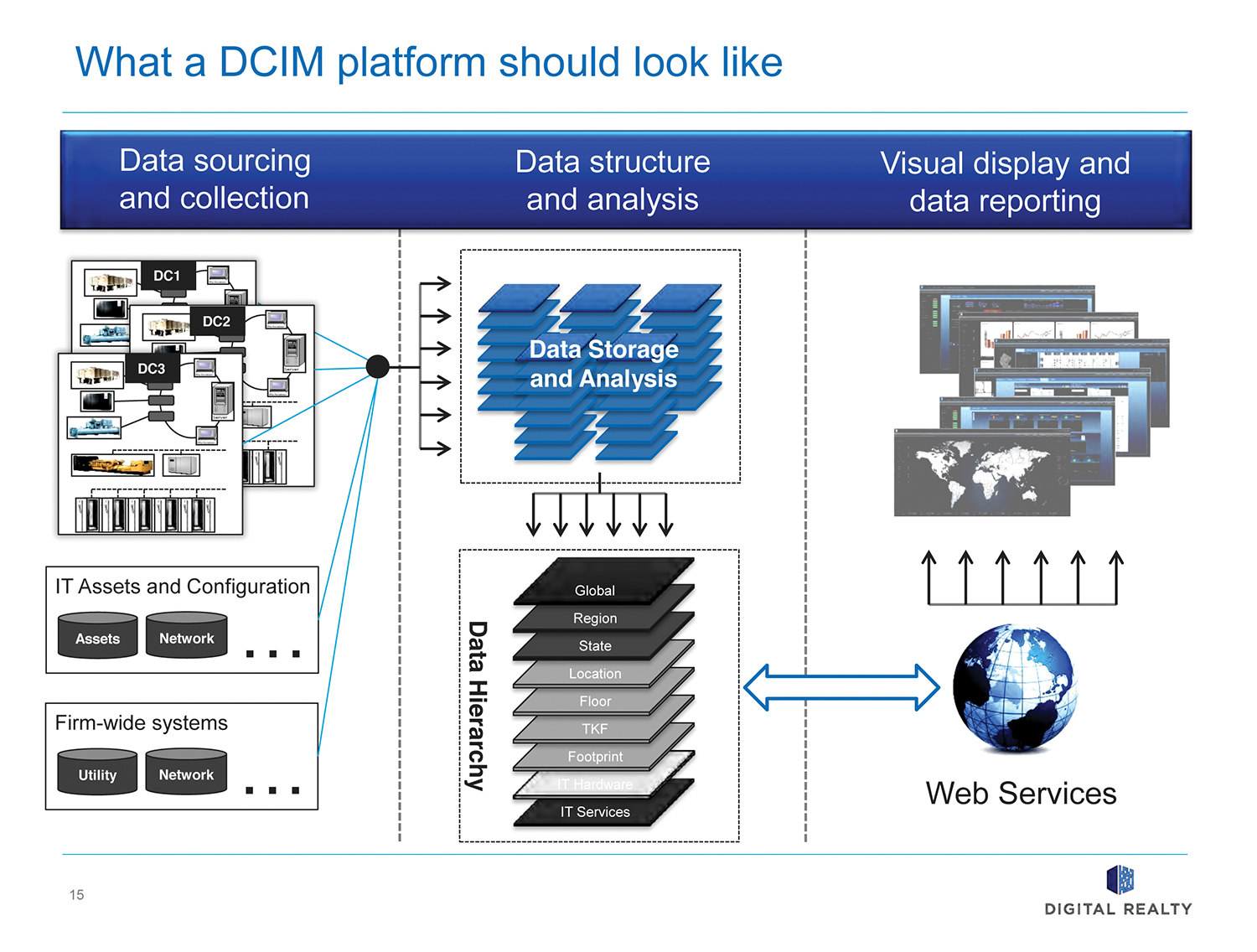

As Digital Realty’s project team examined the process of developing a DCIM platform, it found that the challenge included three distinct silos of data functionality: the engine for collection, the logical structures for analysis and the reporting interface.

The engine of Digital Realty’s DCIM must reach out and collect vast quantities of data from the company’s entire portfolio (see Figure 1). The platform will need to connect to all the sites and all the systems within these sites to gather information. This challenge requires a great deal of expertise in the communication protocols of these systems. In some instances, accomplishing this goal will require “cracking” data formats that have historically stranded data within local systems. Once collected, the data to be checked for integrity and packaged for reliable transmission to the central data store.

The project team also faced the challenge of creating the logical data structures that to process, analyze and archive the data once the DCIM has successfully accessed and transmitted the raw data from each location to the data store. Dealing with 100-plus data centers, often with hundreds of thousands of square feet of white space each, increases the scale of the challenge exponentially. The project team overcame a major hurdle in addressing this challenge when it was able to define relationships between various data categories that allowed the database developers to prebuild and then volume-test data structures to ensure they were up to the challenge.

These data structures, or “data hierarchies” as Digital Realty’s internal team refers to them, are the “secret sauce” of the solution (see Figure 2). Many of the traditional monitoring and control systems in the marketplace require a massive amount of site-level point mapping that is often field-determined by local installation technicians. These points are then manually associated with the formulas necessary to process the data. This manual work is why these projects often take much longer to deploy and can be difficult to commission as mistakes are flushed out.

Figure 2. Digital mapped all the information sources and their characteristics as a step to developing its DCIM.

In this solution, these data relationships have been predefined and are built into the core database from the start. Since this solution is targeted specifically to a data center operation, the project team was able to identify a series of data relationships, or hierarchies, that can be applied to any data center topology and still hold true.

For example, an IT application such as an email platform will always be installed on some type of IT device or devices. These devices will always be installed in some type of rack or footprint in a data room. The data room will always be located on a floor, the floor will always be located in a building, the building in a campus or region, and so on, up to the global view. The type of architectural or infrastructure design doesn’t matter; the relationship will always be fixed.

The challenge is defining a series of these hierarchies that always test true, regardless of the design type. Once designed, the hierarchies can be pre-built, their validity tested and they can be optimized to handle scale. There are many opportunities for these kinds of hierarchies. This is exactly what we have done.

Having these structures in place facilitates rapid deployment and minimizes data errors. It also streamlines the dashboard analytics and reporting capabilities, as the project team was able to define specific data requirements and relationships and then point the dashboard or report at the layer of the hierarchy to be analyzed. For example, a single report template designed to look at IT assets can be developed and optimized and would then rapidly return accurate values based on where the report was pointed. If pointed at the rack level, the report would show all the IT assets in the rack; if pointed at the room level, the report would show all the assets in the room, and so on. Since all the locations are brought into a common predefined database, the query will always yield an apples-to-apples comparison regardless of any unique topologies existing at specific sites.

Last remains the challenge of creating the user interface, or front end, for the system. There is no point in collecting and processing the data if operators and customers can’t easily access it. A core requirement was that the front end needed to be a true browser-based application. Terms like “web-based” or “web-enabled” are often used in the control industry to disguise the user interface limitations of existing systems. Often to achieve some of the latest visual and 3-D effects, vendors will require the user’s workstation to be configured with a variety of thin-client applications. In some cases, full-blown applications have to be installed. For Digital Realty, installing add-ins on workstations would be impractical given the number of potential users of the platform. In addition, in many cases, customers would reject these installs due to security concerns. A true browser-based application requires only a standard computer configuration, a browser and the correct security credentials (see Figure 3).

Intuitive navigation is another key user interface requirement. A user should need very little training to get to the information they need. Further, the information should be displayed to ensure quick and accurate assessment of the data.

Digital Realty’s DCIM Solution

Digital Realty set out to build and deploy a custom DCIM platform to meet all these requirements. Rollout commenced in May 2013, and as of August, the core team was ahead of schedule in terms of implementing the DCIM solution across the company’s global portfolio of data centers.

The name EnVision reflects the platform’s ability to look at data from different user perspectives. Digital Realty developed EnVision to allow its operators and customers insight into their operating environments and also to offer unique features specifically targeted to colocation customers. EnVision provides Digital Realty with vastly increased visibility into its data center operations as well as the ability to analyze information so it is digestible and actionable. It has a user interface with data displays and reports that are tailored to operators. Finally, it has access to historical and predictive data.

In addition, EnVision provides a global perspective allowing high-level and granular views across sites and regions. It solves the stranded data issue by reaching across all relevant data stores on the facilities and IT sides to provide a comprehensive and consolidated view of data center operations. EnVision is built on an enterprise-class database platform that allows for unlimited data scaling and analysis and provides intuitive visuals and data representations, comprehensive analytics, dashboard and reporting capabilities from an operator’s perspective.

Trillions of data points will be collected and processed by true browser-based software that is deployed on high-availability network architecture. The data collection engine offers real-time, high-speed and high-volume data collection and analytics across multiple systems and protocols. Furthermore, reporting and dashboard capabilities offer visualization of the interaction between systems and equipment.

Executing the Rollout

A project of this scale requires a broad range of skill sets to execute successfully. IT specialists must build and operate the high-availability compute infrastructure that the core platform sits on. Network specialists define the data transport mechanisms from each location.

Control specialists create the data integration for the various systems and data sources. Others assess the available data at each facility, determine where gaps exist and define the best methods and systems to fill those gaps.

The project team’s approach was to create and install the core, head-end compute architecture using a high-availability model and then to target several representative facilities for proof-of-concept. This allowed the team of specialists to work out the installation and configuration challenges and then to build a template so that Digital Realty could repeat the process successfully at other facilities. With the process validated, the program moved onto the full rollout phase, with multiple teams executing across the company’s portfolio.

Even as Digital Realty deploys version 1.0 of the platform, a separate development team continues to refine the user interface with the addition of reports, dashboards and other functions and features. Version 2.0 of the platform is expected in early 2014, and will feature an entirely new user interface, with even more powerful dashboard and reporting capabilities, dynamically configurable views and enhanced IT asset management capabilities.

The project has been daunting, but the team at Digital Realty believes the rollout of the EnVision DCIM platform will set a new standard of operational transparency, further bridging the gap between facilities and IT systems and allowing operators to drive performance into every aspect of a data center operation.

David Schirmacher is senior vice president of Portfolio Operations at Digital Realty, where is responsible for overseeing the company’s global property operations as well as technical operations, customer service and security functions. He joined Digital Realty in January 2012. His more than 30 years of relevant experience includes turns as principal and Chief Strategy Officer for FieldView Solutions, where he focused on driving data center operational performance; and vice president, global head of Engineering for Goldman Sachs, where he focused on developing data center strategy and IT infrastructure for the company’s headquarters, trading floor, branch offices and data center facilities around the world. Mr. Schirmacher also held senior executive and technical positions at Compass Management and Leasing and Jones Lang LaSalle. Considered a thought leader within the data center industry, Mr. Schirmacher is president of 7×24 Exchange International and he has served on the technical advisory board of Mission Critical.