Too hot to handle? Operators to struggle with new chips

Standard IT hardware was a boon for data centers: for almost two decades, mainstream servers have had relatively constant power and cooling requirements. This technical stability moored the planning and design of facilities (for both new builds and retrofits) and has helped attract investment in data center capacity and technical innovation. Furthermore, many organizations are operating data centers near or beyond their design lifespan because, at least in part, they have been able to accommodate several IT refreshes without major facility upgrades.

This stability has helped data center designers and planners. Data center developers could confidently plan for design power averaging between 4 kilowatts (kW) and 6 kW per rack, while (in specifying thermal management criteria) following US industry body ASHRAE’s climatic guidelines. This maturity and consistency in data center power density and cooling standards has, of course, been dependent on stable, predictable power consumption by processors and other server components.

The rapid rise in IT power density, however, now means that plausible design assumptions regarding future power density and environmental conditions are starting to depart from these standard, narrow ranges.

This increases the technical and business risks — particularly because of the risks inherent under future, potentially divergent scenarios. The business costs of incorrect design assumptions can be significant: be too conservative (i.e., retain low-density approaches), and a data center may quickly become limited or even obsolete; be too technically aggressive (i.e., assume or predict highly densified racks and heat reuse) and there is a risk of significant overspend on underutilized capacity and capabilities.

Facilities built today need to remain economically competitive and technically capable for 10 to 15 years. This means certain assumptions must be made through speculation, without data center designers knowing the future specifications of IT racks. As a result, engineers and decision-makers need to grapple with the uncertainty that will surround data center technical requirements for the second half of the 2020s and beyond.

Server heat turns to high

Driven by the rising demand of IT silicon, server power and — in turn — typical rack power are both escalating. Extreme-density racks are also increasingly prevalent in technical computing, high-performance analytics and artificial intelligence training. New builds and retrofits will be more difficult to optimize for future generations of IT.

While server heat output remained relatively modest, it was possible to establish industry standards around air cooling. ASHRAE’s initial recommendations on supply temperature and humidity ranges (in 2004, almost 20 years ago) met the needs and risk appetites of most operators. ASHRAE subsequently encouraged incrementally wider ranges, helping drive industry gains in facilities’ energy efficiency.

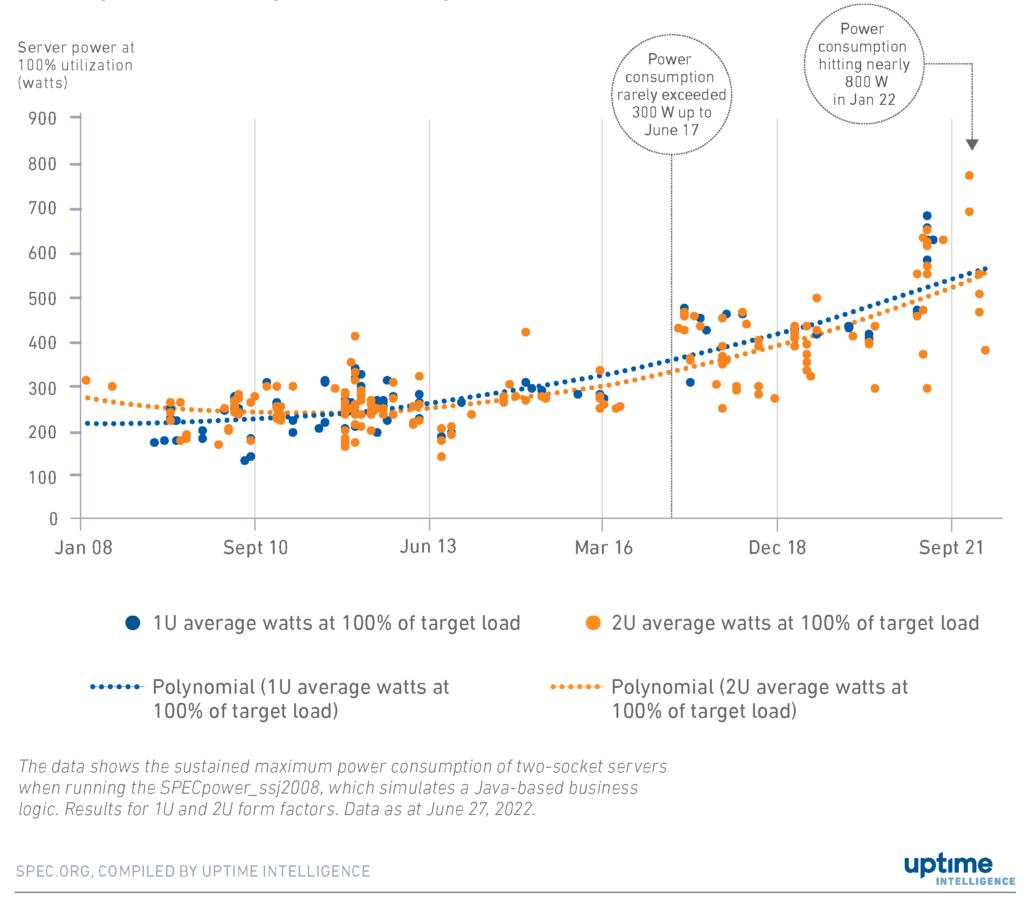

Uptime Institute research shows a trend in consistent, if modest, increases in rack power density over the past decade. Contrary to some (aggressive) expectations, the typical rack remains under 10 kW. This long-running trend has picked up pace more recently, and Uptime expects it to accelerate further. The uptick in rack power density is not exclusively due to more heavily loaded racks. It is also due to greater power consumption per server, which is being driven primarily by the mass-market emergence of higher-powered server processors that are attractive for their performance and often superior energy efficiency if utilized well (Figure 1).

This trend will soon reach a point when it starts to destabilize existing facility design assumptions. As semiconductor technology slowly — but surely — approaches its physical limits, there will be major consequences for both power delivery and thermal management (see Silicon heatwave: the looming change in data center climates).

“Hotter” processors are already a reality. Intel’s latest server processor series, expected to be generally available from January 2023, achieves thermal design power (TDP) ratings as high as 350 watt (W) — with optional configuration to more than 400 W should the server owner seek ultimate performance (compared with 120 W to 150 W only 10 years ago). Product roadmaps call for 500 W to 600 W TDP processors in a few years. This will result in mainstream “workhorse” servers approaching or exceeding 1 kW in power consumption each — an escalation that will strain not only cooling, but also power delivery within the server chassis.

Servers for high-performance computing (HPC) applications can act as an early warning of the cooling challenges that mainstream servers will face as their power consumption rises. ASHRAE, in a 2021 update, defined a new thermal standard (Class H1) for high-density servers requiring restricted air supply temperatures (of up to 22°C / 71.6°F) to allow for sufficient cooling, adding a cooling overhead that will worsen energy consumption and power usage effectiveness (PUE). This is largely because of the number of tightly integrated, high-power components. HPC accelerators, such as graphics processing units, can use hundreds of watts each at peak power — in addition to server processors, memory modules and other electronics.

The coming years will see more mainstream servers requiring similar restrictions, even without accelerators or densification. In addition to processor heat output, cooling is also constrained by markedly lower limits on processor case temperatures — e.g., 55°C, down from a typical 80°C to 82°C — for a growing number of models. Other types of data center chips, such as computing accelerators and high-performance switching silicon, are likely to follow suit. This is the key problem: removing greater volumes of lower-temperature heat is thermodynamically challenging.

Data centers strike the balance

Increasing power density may prove difficult at many existing facilities. Power or cooling capacity may be limited by budgetary or facility constraints — and upgrades may be needed for live electrical systems such as UPS, batteries, switchgears and generators. This is expensive and carries operational risks. Without it, however, more powerful IT hardware will result in considerable stranded space. In a few years, the total power of a few servers will exceed 5 kW: and a quarter-rack of richly configured servers can reach 10 kW if concurrently stressed.

Starting with a clean sheet, designers can optimize new data centers for a significantly denser IT configuration. There is, however, a business risk in overspending on costly electrical gear, unless managed by designing a flexible power capacity and technical space (e.g., prefabbed modular infrastructure). Power requirements for the next 10 to 15 years are still too far ahead to be forecast with confidence. Major chipmakers are ready to offer technological guidance covering the next three to five years, at most. Will typical IT racks reach average power capacities of 10 kW, 20 kW or even 30 kW by the end of the decade? What will be the highest power densities a new data center will be expected to handle? Today, even the best informed can only speculate.

Thermal management is becoming tricky too. There are multiple intricacies inherent in any future cooling strategy. Many “legacy” facilities are limited in their ability to supply the necessary air flow to cool high-density IT. The restricted temperatures typically needed by (or preferable for) high-density racks and upcoming next-generation servers, moreover, demand higher cooling power at the risk of losing IT performance (modern silicon throttles itself when it exceeds temperature limits). To which end, ASHRAE recommends dedicated low-temperature areas to minimize the hit on facilities’ energy efficiency.

A growing number of data center operators will consider support for direct liquid cooling (DLC), often as a retrofit. Although DLC engineering and operations practices have matured, and now offer a wider array of options (cold plates or immersion) than ever before, its deployment will come with its own challenges. A current lack of standardization raises fears of vendor lock-in and supply-chain constraints for key parts, as well as a reduced choice in server configurations. In addition, large parts of enterprise IT infrastructure (chiefly storage systems and networking equipment) cannot currently be liquid-cooled.

Although IT vendors are offering (and will continue to offer) more server models with integrated DLC systems, this approach requires bulk buying of IT hardware. For facilities’ management teams, this will lead to technical fragmentation involving multiple DLC vendors, each with its own set of requirements. Data center designers and operations teams will have to plan not only for mixed-density workloads, but also for a more diverse technical environment. The finer details of DLC system maintenance procedures, particularly for immersion-type systems, will be unfamiliar to some data center staff, highlighting the importance of training and codified procedure over muscle memory. The propensity for human error can only increase in such an environment.

The coming changes in data center IT will be powerful. Semiconductor physics is, fundamentally, the key factor behind this dynamic but infrastructure economics is driving it: more powerful chips tend to help deliver infrastructure efficiency gains and, through the applications they run, generate more business value. In a time of technological flux, data center operators will find there are multiple opportunities for gaining an edge over peers and competitors — but not without a level of risk. Going forward, adaptability is key.

See our Five Data Center Predictions for 2023 webinar here.

Jacqueline Davis, Research Analyst, Uptime Institute

Max Smolaks, Research Analyst, Uptime Institute

Getty Images

Getty Images

2020

2020