Generative AI and global power consumption: high, but not that high

In the past year, Uptime Intelligence has been asked more questions about generative AI and its impact on the data center sector than any other topic. The questions come from enterprise and colocation operators, suppliers of a wide variety of equipment and services, regulators and the media.

Most of the questions concern power consumption. The compute clusters — necessary for the efficient creation and use of generative AI models — are enormously power-hungry, creating a surge in projected demand for capacity and, for operators, challenges in power distribution and cooling in the data center.

The questions about power typically fall into one of three groups. The first is centered around, “What does generative AI mean for density, power distribution and cooling in the data center?”

Uptime Intelligence’s view is that the claims of density distress are exaggerated. While the AI systems for training generative AI models, equipped with Nvidia accelerators, are much denser than usual, they are not extreme and can be managed by spreading them out into more cabinets. Further Uptime Intelligence reports will cover this aspect since it is not the focus here.

Also, generative AI has an indirect effect on most data center operators in the form of added pressures on the supply chain. With lead times on some key equipment, such as engine generators, transformers and power distribution units, already abnormally long, unforeseen demand for even more capacity by generative AI will certainly not help. The issues of density and forms of indirect impact were initially addressed in the Uptime Intelligence report Hunger for AI will have limited impact on most operators.

The second set of questions relates to the availability of power in a given region or grid — and especially of low-carbon energy. This is also a critical, practical issue that operators and utilities are trying to understand. The largest clusters for training large generative AI models comprise many hundreds of server nodes and draw several megawatts of power at full load. However, the issues are largely localized, and they are also not the focus here.

Generative AI and global power

Instead, the focus of this report is the third typical question, “How much global power will AI use or require?” While in immediate practical terms this is not a key concern for most data center operators, the headline numbers will shape media coverage and public opinion, which ultimately will drive regulatory action.

However, some of the numbers on AI power circulating in the press and at conferences, cited by key influencers and other parties, are extremely high. If accurate, these figures suggest major infrastructural and regulatory challenges ahead — however, any unduly high forecasts may prompt regulators to overreact.

Among the forecasts at the higher end is from Schneider Electric’s respected research team, which estimated AI power demand at 4 gigawatts (GW), equivalent to 35 terawatt-hours (TWh) if annualized, in 2023, rising to around 15 GW (131 TWh if annualized) in 2028 (see Schneider White paper 110: The AI Disruption: Challenges and Guidance for Data Center Design). Most likely, these figures include all AI workloads and not only new generative models.

And Alex de Vries, of digital trends platform Digiconomist and whose calculations of Bitcoin energy use have been influential, has estimated AI workload use at 85 TWh to 134 TWh by 2027. These figures suggest AI could add 30% to 50% or more to global data center power demand over the next few years (see below).

There are two reasons why Uptime Intelligence considers these scenarios overly bullish. First, estimates on power for all AI workloads are problematic for both taxonomy (what is AI) and the improbability of tracking it meaningfully. Also, most forms of AI are already accounted for in capacity planning, despite generative AI being unexpected. Second, projections that span beyond a 12- to 18-month horizon carry high uncertainty.

Some of the numbers cited above imply an approximately thousand-fold increase in AI compute capacity in 3 to 4 years from the first quarter of 2024, when accounting for hardware and software technology evolution. That is not only unprecedented but also has weak business fundamentals when considering the hundreds of billions of dollars it would take to build all that AI infrastructure.

Uptime Intelligence takes a more conservative view with its estimates — but these estimates still indicate rapidly escalating energy use by new large generative AI models.

To reach our numbers, we have estimated the current shipments and installed base of Nvidia-based systems through to the first quarter of 2025 and the likely power consumption associated with their use. Systems based on Nvidia’s data center accelerators, derived from GPUs, dominate the generative AI model accelerator market and will continue to do so until at least mid-2025 due to an entrenched advantage in the software toolchain.

We have considered a range of technical and market factors to calculate the power requirements of generative AI infrastructure: workload profiles (workload activity, utilization and load concurrency in a cluster), the shifting balance between training and inferencing, and the average PUE of the data center housing the generative AI systems.

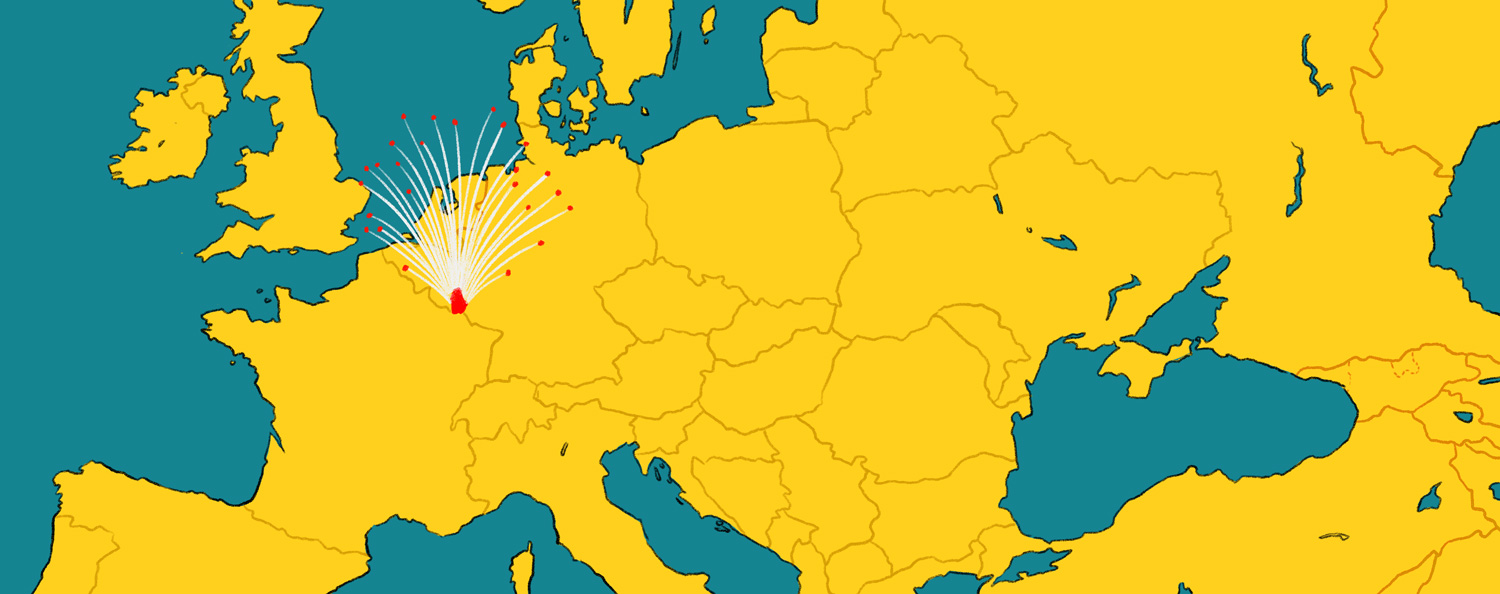

This data supports our earlier stated view that the initial impact of generative AI is limited, beyond a few dozen large sites. For the first quarter of 2024, we estimate the annualized power use by Nvidia systems installed to be around 5.8 TWh. This figure, however, will rise rapidly if Nvidia meets its forecast sales and shipment targets. By the first quarter of 2025, the generative AI infrastructure in place could account for 21.9 TWh of annual energy consumption.

We expect these numbers to shift as new information emerges, but they are indicative. To put these numbers into perspective, the total global data center energy use has been variously estimated at between 200 TWh and 450 TWh per year in the periods from 2020 to 2022. (The methodologies and terms of various studies vary widely and suggest that data centers use between 1% and 4% of all consumed electricity.) By taking a middle figure of 300 TWh for the annual global data center power consumption, Uptime Intelligence puts generative AI annualized energy at around 2.3% of the total grid power consumption by data centers in the first quarter of 2024. However, this could reach 7.3% by the first quarter of 2025.

Outlook

These numbers indicate that generative AI’s power use is not, currently, disruptively impactful, given the data center sector’s explosive growth in recent years. Generative AI’s share of power consumption relative to its footprint is outsized given its likely very high utilization. A share of data center capacity by power will fall in the low-to-mid single digits even by the end of 2025.

However, it is nevertheless a dramatic increase and suggests that generative AI could account for a much larger percentage of overall data center power use in the years ahead. Inevitably, the surge needs to slow down, also in great part because newer AI systems built around vastly more efficient accelerators will displace the install base en masse rather than adding net new infrastructure.

While Uptime Intelligence believes some of the estimates of generative AI power use (and data center power use) to be too high, the sharp uptick — and the concentration of demand in certain regions — will still be high enough to attract and stimulate regulatory attention. Uptime Intelligence will continue to analyze the development of AI infrastructure and its impact on the data center industry.

Andy Lawrence, Executive Research Director [email protected]

Daniel Bizo, Research Director dbizo@uptimeinstitute.com

2020

2020

2020

2020