Cybersecurity and the cost of human error

Cyber incidents are increasing rapidly. In 2024, the number of outages caused by cyber incidents was twice the average of the previous four years, according to Uptime Institute’s annual report on data center outages (see Annual outage analysis 2025). More operational technology (OT) vendors are experiencing significant increases in cyberattacks on their systems. Data center equipment vendor Honeywell analyzed hundreds of billions of system logs and 4,600 events in the first quarter of 2025, identifying 1,472 new ransomware extortion incidents — a 46% increase on the fourth quarter of 2024 (see Honeywell’s 2025 Cyber Threat Report). Beyond the initial impact, cyberattacks can have lasting consequences for a company’s reputation and balance sheet.

Cyberattacks increasingly exploit human error

Cyberattacks on data centers often exploit vulnerabilities — some stemming from simple and preventable errors, while others are overlooked systemic issues. Human error, such as failing to follow procedures, can create vulnerabilities, which the attacker exploits. For example, staff might forget regular system patches or delay firmware updates, leaving systems exposed. Companies, in turn, implement policies and procedures to ensure employees perform preventative actions on a consistent basis.

In many cases, data center operators may well be aware that elements of their IT and OT infrastructure have certain vulnerabilities. This may be due to policy noncompliance or the policy itself lacking appropriate protocols to defend against hackers. Often, employees lack training on how to recognize and respond to common social engineering techniques used by hackers. Tactics such as email phishing, impersonation and ransomware are increasingly targeting organizations with complex supply chain and third-party dependencies.

Cybersecurity incidents involving human error often follow similar patterns. Attacks may begin with some form of social engineering to obtain login credentials. Once inside, the attack moves laterally through a system, exploiting small errors to cause systemic damage (see Table 1).

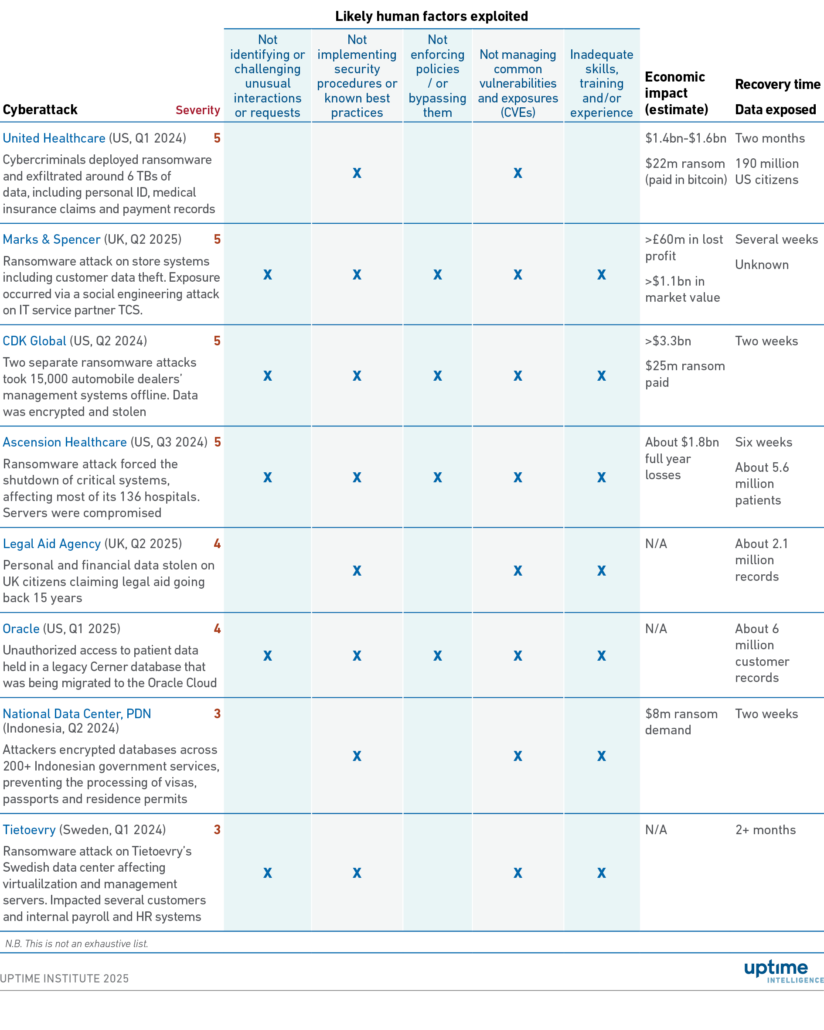

Table 1 Cyberattackers exploit human factors to induce human error

Failure to follow correct procedures

Although many companies have policies and procedures in place, employees can become complacent and fail to follow them. At times, they may unintentionally skip a step or carry it out incorrectly. For instance, workers might forget to install a software update or accidentally misconfigure a port or firewall — despite having technical training. Others may feel overwhelmed by the volume of updates and leave systems vulnerable as a result. In some cases, important details are simply overlooked, such as leaving a firewall port open or setting their cloud storage to public access.

Procedures concerning password strength, password changes and inactive accounts are common vulnerabilities that hackers exploit. Inactive accounts that are not properly deactivated may miss out on critical security updates, as these are monitored less closely than active accounts, making it easier for security breaches to go unnoticed.

Unknowingly engaging with social engineering

Social engineering is a tactic used to deceive individuals into revealing sensitive information or downloading malicious software. It typically involves the attacker impersonating someone from the target’s company or organization to build trust with them. The primary goal is to steal login credentials or gain unauthorized access to the system.

Attackers may call employees while posing as someone from the IT help desk, requesting login details. Another common tactic involves the attacker pretending to be a help desk technician and, under the guise of “routine testing,” pressuring an employee to disclose their login credentials.

Like phishing, spoofing is a tactic used to gain an employee’s trust by simulating familiar conditions, but it often relies on misleading visual cues. For example, social engineers may email a link to a fake version of the company’s login screen, prompting the unsuspecting employee to enter their login information as usual. In some rare cases, attackers might even use AI to impersonate an employee’s supervisor during video call.

Deviation from policies or best practices

Adhering to policies and best practices is critical to determining whether cybersecurity succeeds or fails. Procedures need to be written clearly and without ambiguity. For example, if a procedure does not explicitly require an employee to clear saved login data from their devices, hackers or rogue employees may be able to gain access to the device using default administrator credentials. Similarly, if regular password changes are not mandated, it may be easier for attackers to compromise system access credentials.

Policies must also account for the possibility of a disgruntled employee or third-party worker stealing or corrupting sensitive information for personal gain. To reduce this risk, companies can implement clear deprovisioning rules in their offboarding process, such as ensuring passwords are changed immediately upon an employee’s departure. While there is always a chance that a procedural step may be accidentally overlooked, comprehensive procedures increase the likelihood that each task is completed correctly.

Procedures are especially critical when employees have to work quickly to contain a cybersecurity incident. They should be clearly written, thoroughly tested for reliability, and easily accessible to serve as a reference during a variety of emergencies.

Poor security governance and oversight

A lack of governance or oversight from management can lead to overlooked risks and vulnerabilities, such as missed security patches or failure to monitor systems for threats or alerts. Training helps employees to approach situations with healthy skepticism, encouraging them to perform checks and balances consistent with the company’s policies.

Training should evolve to ensure that workers are informed about the latest threats and vulnerabilities, as well as how to recognize them.

Notable incidents exploiting human error

The types of human error described above are further complicated due to the psychology of how individuals behave in intense situations. For example, mistakes may occur due to heightened stress, fatigue or coercion, all of which can lead to errors of judgment when a quick decision or action is required.

Table 2 identifies how human error may have played a part in eight major public cybersecurity breaches between 2023 and 2025. This includes three of the 10 most significant data center outages — United Healthcare, CDK Global and Ascension Healthcare — highlighted in Uptime Institute’s outages report (see Annual outage analysis 2025). We note the following trends:

- At least five of the incidents involved social engineering. These attacks often exploited legitimate credentials or third-party vulnerabilities to gain access and execute malicious actions.

- All incidents likely involved failures by employees to follow policies, procedures or properly manage common vulnerabilities.

- Seven incidents exposed gaps in skills, training or experience to mitigate threats to the organization.

- In half of the incidents, policies may have been poorly enforced or bypassed for unknown reasons.

Table 2 Impact of major cyber incidents involving human error

Typically, organizations are reluctant to disclose detailed information about cyberattacks. However, regulators and government cybersecurity agencies are increasingly expecting more transparency — particularly when the attacks affect citizens and consumers — since attackers often leak information on public forums and the dark web.

The following findings are particularly concerning for data center operators and warrant serious attention:

- The financial cost of cyber incidents is significant. Among the eight identified cyberattacks, the estimated total losses exceed $8 billion.

- Full financial and reputational impact can take longer to play out. For example, UK retailer Marks & Spencer is facing lawsuits from customer groups over identity theft and fraud following a cyberattack. Similar actions may be taken by regulators or government agencies, particularly if breaches expose compliance failures with cybersecurity regulations, such as those in the Network and Information Security Directive 2 and the Digital Operational Resilience Act.

The Uptime Intelligence View

Human error is often viewed as a series of unrelated mistakes; however, the errors identified in this report stem from complex, interconnected systems and increasingly sophisticated attackers who exploit human psychology to manipulate events.

Understanding the role of human error in cybersecurity incidents is crucial to help employees recognize and prevent potential oversights. Training alone is unlikely to solve the problem. Data center operators should continuously adapt cyber practices and foster a culture that redefines how staff perceive and respond to the risk of cyber threats. This cultural shift is likely critical to staying ahead of evolving threat tactics.

John O’Brien, Senior Research Analyst, jobrien@uptimeinstitute.com

Rose Weinschenk, Analyst, rweinschenk@uptimeinstitute.com

2020

2020 UI 2021

UI 2021

UI 2020

UI 2020