IT efficiency: an untapped power resource

Projections of data center capacity growth have exploded with the emergence of AI infrastructure and the continuing expansion and integration of standard IT functionality into the global economy. Electricity demand is growing in developed markets for the first time in more than a decade, led by proposed data center expansions and efforts to electrify the overall economy.

However, there is a looming gap between power demand and supply. The limits of the electricity generation development pipeline, combined with most grids’ inability to quickly interconnect distributed generation and energy storage assets, mean new supply is falling behind demand growth. While much has been written on prospective solutions to the power demand and supply mismatch, the potential for the data center industry to roll back its power demand projections while still meeting its business goals to grow AI and compute capacity requires more attention.

Data center energy growth

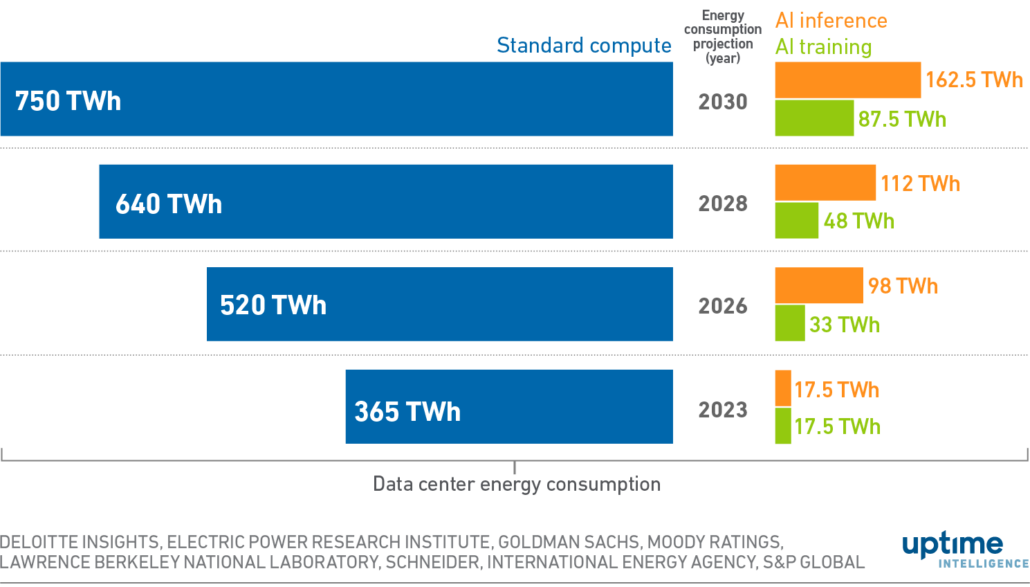

Industry analysts and energy organizations project data center energy consumption to grow between 10% and 15% annually through 2030. The evaluation of multiple data center energy consumption projections suggests that demand could grow from 400 TWh in 2023 to 1,000 TWh in 2030 (see Figure 1). These figures are highly uncertain, with the 2030 projections varying from 750 TWh to 1,100 TWh or more.

Uptime Intelligence has catalogued 208 announced data center projects with 100 megawatts (MW) or more demand (from 2021 to 2024), representing 93 GW of electricity demand (570 TWh) at 70% utilization. However, Uptime Intelligence estimates that only 25% of these projects are likely to be built. While this is largely attributed to speculative announcements (nicknamed phantom or ghost projects) without existing customers, it is also due to constraints in power generation capacity, facility equipment, and permitting. The industry faces significant challenges in realizing even the lower end of the growth projections.

The sudden acceleration in projected energy consumption is attributed to demand by AI-centric data centers. Various analyses estimate that AI systems (both training and inference) will be responsible for 20% to 30% of the 2030 consumption (see Figure 1), with Berkeley Lab’s 2024 United States Data Center Energy Usage Report estimating that AI operations will account for 60% to 75% of US data center energy consumption by 2030. The catch-all category of standard compute systems — servers typically populated with x86, ARM and newly emerging compute options — would be responsible for 70% to 80% of the total consumption. AI and standard computing systems contribute roughly equally to the projected growth.

Figure 1 Estimates of global data center energy consumption 2023 to 2030

The discussions addressing this rapid growth focus on new electricity generation capacity — either bulk on the grid or new generation assets collocated with the data center facilities — and high-voltage transmission and distribution systems. They often overlook opportunities to reduce electricity demand by improving IT infrastructure and software efficiency. Uptime Intelligence believes that reducing demand can address a significant percentage of the projected supply shortage.

Opportunities for IT energy efficiency

The data center industry portrays the IT infrastructure as a black box. There is minimal discussion of the average and maximum utilization of the IT hardware’s work capacity, the number of virtual machines or containers supported by a processor or group(s) of servers — or the application software’s efficiency. Their performance and efficiency are presented as an immutable given. The industry has also generally resisted reporting or evaluating efficiency metrics, such as IT infrastructure utilization and work delivered per MWh of energy consumption, claiming a lack of representative metrics and the confidentiality of the data.

There are numerous underexploited opportunities for data center operators to improve the efficiency of the IT infrastructure (see Why bigger is not better: gen AI models are shrinking and Server energy efficiency: five key insights). Each of the three IT infrastructure categories — AI training, AI inference, and standard computing — can improve hardware and software efficiency to reduce energy and space requirements as well as associated expenditures. Ultimately, such improvement would help drive better business and sustainability performance.

AI training

AI training systems’ energy and facility infrastructure demands are receiving the most attention because of their high computing and energy density, highly variable energy use profile, and more demanding cooling requirements. Energy use projections expect these training systems to represent 5% or more of global data center energy use in 2030.

Current large language models are designed with model performance, not compute efficiency, in mind. Development efforts are focused on expanding to larger, more capable models — time to market and first to release the next-generation model are the critical business metrics. Discussions with AI experts and a review of the current literature suggest multiple opportunities for operators to reduce the projected energy demand.

Enterprises are evaluating and installing smaller AI training systems, comprised of one to as many as tens of racks of GPU-based servers. The AI models are purpose-built to address specific business problems. Smaller models can be developed by pruning less useful parameters, reducing the complexity of algorithms while maintaining 95% to 98% precision, and managing the size, quality, and scope of the data set to match the model’s purpose. These purpose-built models will run on smaller AI systems with lower power and cooling demands.

There are also opportunities to increase the energy efficiency of the AI hardware. Companies are exploring purpose-built processors, such as application-specific integrated circuits (ASICs) and other unique designs, to increase the amount of work completed per MWh of power consumed.

AI computing, like standard computing in 2007, is in its infancy. As hardware manufacturers and software designers expand their design criteria to include efficiency and performance, AI training models should see substantial improvements in work per MWh performance with attendant reductions in the projected power and data center infrastructure demands.

AI inference

Like AI training, AI inference hardware and software systems are in the nascent stages of their development. If AI systems account for 20% to 30% of 2030 global data center energy consumption, AI inference systems are expected to represent 50% to 65% of this energy consumption — equivalent to 10% to 20% of the total global consumption.

There are competing visions for inference systems. In the coming years, inference infrastructure will likely be a mix, dominated by HPC-style GPU servers and standard CPU hardware. These systems are also ripe to capture energy performance improvements using inference ASICs and other purpose-built processors (such as Groq boards and tensor processing units), combined with more optimized software.

The energy efficiency of inference systems will also depend on how hardware capacity is utilized and the CPU and GPU power management functions are deployed to minimize energy consumption during idle periods or when the system is under low utilization. A range of well-established software management tools and power management templates are available for managing energy efficiency and increasing the energy performance of HPC and standard computing systems (see Server energy efficiency: five key insights). The degree to which IT operators deploy and apply the available efficiency tools can mitigate and reduce currently projected energy demand growth.

Standard computing

Despite indications to the contrary, standard data center computing — the application workloads run by corporations, governments, and individuals — will continue to be the major driver for data center energy demand. By 2030, it is expected to account for 70% to 80% of data center demand and up to 50% of the energy growth from 2023 to 2030 (see Figure 1). Though their functions may differ, these systems will often be largely indistinguishable from AI inference systems — industry-standard compute servers. As with AI inference systems, IT operators can optimize energy performance and efficiency by managing workload placement to maximize the utilization of the available work capacity and the deployment of power management functions.

Refresh and consolidate

Energy demand growth can be further mitigated by applying a “refresh and consolidate” mindset when updating server, storage, and network infrastructure. Each new generation of product technology offers improved work-per-watt characteristics but typically only at equivalent or higher utilization rates (as compared with the removed equipment). IT operators should be able to consolidate their infrastructure by removing at least two pieces of existing equipment for each new unit installed. When replacing older equipment (especially 6 years or older), it may be possible to raise the consolidation rate to five-to-one or beyond.

Industry experience suggests that IT equipment work delivered per MWh ratios should double to quadruple every four to five years. These improvements will result from new technology generations of CPUs, storage, memory, and network components, specialty designs for high-volume functions and workloads (e.g., ASICs for AI inference), and improvements in the efficiency of middleware (e.g., Java) and software applications.

Observations

IT operators need to address the data center growth challenge with a dual-pronged approach: collaborating with energy retailers, developers, and utilities to increase the electricity supply, while working internally and with IT equipment providers to improve the energy efficiency and performance of all aspects of the IT infrastructure, thereby reducing the rate and size of the projected demand growth.

IT system managers, coders, and executives should consider prioritizing IT infrastructure energy performance alongside system performance and reliability. Increased efficiency will enable the industry to mitigate and reduce the projected energy demand to align better the industry’s growth timeline with the achievable energy system rate of expansion.

The Uptime Intelligence View

The data center industry has accelerated its projections for IT infrastructure capacity and demand growth as AI systems are being deployed. A closer look at the energy growth projections indicates that this growth could be balanced between AI and standard computing demands. While projected growth will require an increase in electricity supply, the industry can mitigate its impact and reduce the strain on the electricity generation and transmission system by prioritizing improvements in the energy performance and efficiency of the IT infrastructure and software systems.

2019

2019