Open19 is About Improving Hardware Choices, Standardizing Deployment

In July 2016, Yuval Bachar, principal engineer, Global Infrastructure Architecture and Strategy at LinkedIn announced Open19, a project spearheaded by the social networking service to develop a new specification for server hardware based on a common form factor. The project aims to standardize the physical characteristics of IT equipment, with the goal of cutting costs and installation headaches without restricting choice or innovation in the IT equipment itself.

Uptime Institute’s Matt Stansberry discussed the new project with Mr. Bachar following the announcement. The following is an excerpt of that conversation:

Please tell us why LinkedIn launched the Open19 Project.

We started Open19 with the goal that any IT equipment we deploy would be able to be installed in any location, such as a colocation facility, one of our owned sites, or sitting in a POP, in a standardized 19-inch rack environment. Standardizing on this form factor significantly reduces the cost of integration.

We aren’t the kind of organization that has one type or workload or one type of server. Data centers are dynamic, with apps evolving on a weekly basis. New demands for apps and solutions require different technologies. Open19 provides the opportunity to mix and match server hardware very easily without the need to change any of the mechanical or installation aspects.

Different technologies evolve at a different pace. We’re always trying a variety of different servers—from low-power multi core machines to very high end, high performance hardware. In an Open19 configuration, you can mix and match this equipment in any configuration you want.

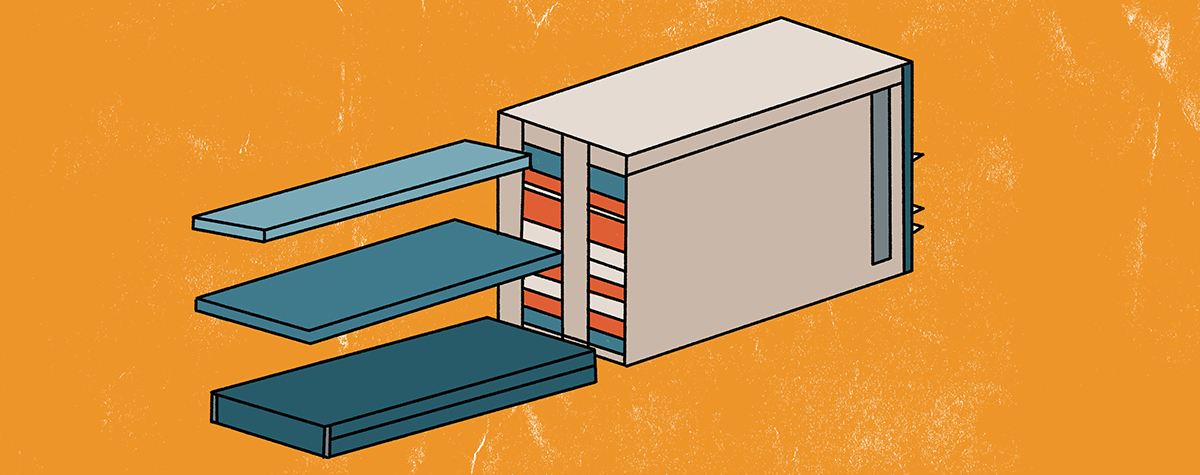

In the past, if you had a chassis full of blade servers, they were super-expensive, heavy, and difficult to handle. With Open19, you would build a standardized chassis into your cage and fit servers from various suppliers into the same slot. This provides a huge advantage from a procurement perspective. If I want to replace one blade today, the only opportunity I have is to buy from a single server supplier. With Open19, I can buy a blade from anybody that complies. I can have five or six proposals for a procurement instead of just one.

Also, by being able to blend high performance and high power with low-power equipment in two adjacent slots in the brick cage in the same rack, you can create a balanced environment in the data center from a cooling and power perspective. It helps avoid hot spots.

I recall you mentioned that you may consider folding Open19 into the Facebook-led Open Compute Project (OCP), but right now that’s not going to happen. Why launch Open19 as a standalone project?

The reason we don’t want to fold Open19 under OCP at this time is that there are strong restrictions around how innovation and contributions are routed back into the OCP community.

IT equipment partners aren’t willing to contribute IP and innovation. The OCP solution wasn’t enabling the industry—when organizations create things that are special, OCP requires you to expose everything outside of your company. That’s why some of our large server partners couldn’t join OCP. Open19 defines the form factor, and each server provider can compete in the market and innovate in their own dimension.

What are your next steps and what is the timeline?

We will have an Open19-based system up and running in the middle of September 2016 in our labs. We are targeting late Q1 of 2017 to have a variety of servers installed from three to four suppliers. We are considering Open19 as our primary deployment model, and if all of the aspects are completed and tested, we could see Open19 in production environments after Q1 2017.

The challenge is that this effort has multiple layers. We are working with partners to do the engineering development. And that is achievable. A secondary challenge is the legal side. How do you create an environment that the providers are willing to join? How do we do this right this time?

But most importantly, for us to be successful we have to have significant adoption from suppliers and operators.

It seems like something that would be valuable for the whole industry—not just the hyperscale organizations.

Enterprise groups will see an opportunity to participate without having to be the 100,000-server data center companies. So many enterprise IT groups had expressed reluctance about OCP because the white box servers come with limited support and warranty levels.

It will also lower their costs, increase the speed of installations and changes, and improve the positions of business negotiations on procurement.

If we come together, we will generate enough demand to make Open19 interesting to all of the suppliers and operators. You will get the option to choose.

Yuval Bachar is a principal engineer in the global infrastructure and strategy team for Linkedin, which is responsible for the company strategy for data center architecture and implementation of the mega scale future data centers. In this capacity, he drives and supports the new technology development, architecture, and collaboration to support the tremendous growth in LinkedIn’s future user-base, data centers, and services provided. Prior to Linkedin, Mr. Bachar held IT leadership positions at Facebook, Cisco and Juniper Networks.

Yuval Bachar is a principal engineer in the global infrastructure and strategy team for Linkedin, which is responsible for the company strategy for data center architecture and implementation of the mega scale future data centers. In this capacity, he drives and supports the new technology development, architecture, and collaboration to support the tremendous growth in LinkedIn’s future user-base, data centers, and services provided. Prior to Linkedin, Mr. Bachar held IT leadership positions at Facebook, Cisco and Juniper Networks.