Resiliency v low PUE: regulators a catalyst for innovation?

Research has shown that while data center owners and operators are mostly in favor of sustainability regulation, they have a low opinion of the regulators’ expertise. Some operators have cited Germany’s Energy Efficiency Act as evidence: the law lays down a set of extremely challenging power usage effectiveness (PUE) requirements that will likely force some data centers to close and prevent the building of others.

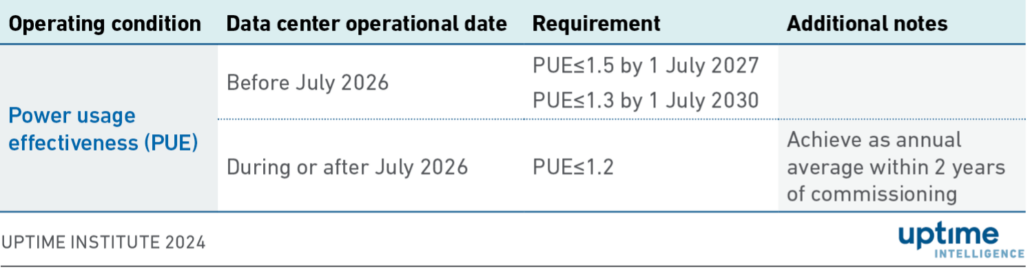

The headline requirement of the act (see Table 1) is that new data centers (i.e., those that become operational in 2026 and beyond) must have an annual operational PUE of 1.2 or below within two years of commissioning. While meeting this tough energy efficiency stipulation is possible, very few data centers today — even new and well-managed ones — are achieving this figure. Uptime Intelligence data shows an average PUE of 1.45 for data centers built in the past three years.

This stretch goal of 1.2 is particularly challenging for those trying to grapple with competing business or technical requirements. For example, some are still trying to populate new data centers, meaning they are operating at partial load; many have overriding requirements to meet Tier III concurrent maintainability and Tier IV fault-tolerant objectives; and increasingly, many are aiming to support high-density racks or servers with strict cooling requirements. For these operators, achieving this PUE level routinely will require effort and innovation that is at the limits of modern data center engineering — and it may require considerable investment.

The rules for existing data centers are also tough. By 2030, all data centers will have to operate below a PUE of 1.3. This requirement will ultimately lead to data center closures, refurbishments and migration to newer colocations and the cloud — although it is far too early to say which of these strategies will dominate.

Table 1. PUE requirements under Germany’s Energy Efficiency Act

To the limits

The goal of Germany’s regulators is to push data center designers, owners and operators to the limits. In doing so, they hope to encourage innovation and best practices in the industry that will turn Germany into a model of efficient data center operations and thus encourage similar practices across Europe and beyond.

Whether the regulators are pushing too hard on PUE or if the act will trigger some unintended consequences (such as a movement of workloads outside of Germany) will likely only emerge over time. But the policy raises several questions and challenges for the entire industry. In particular, how can rigorous efficiency goals be achieved while maintaining high availability? And how will high-density workloads affect the achievement of efficiency goals?

We consulted several Uptime Institute experts* to review and respond to these questions. They take a mostly positive view that while the PUE requirements are tough, they are achievable for new (or recently constructed) mission-critical data centers — but there will be cost and design engineering consequences. Some of their observations are:

- Achieving fault tolerance / concurrent availability. Higher availability (Tier III and Tier IV) data centers can achieve very low PUEs — contrary to some reports. A Tier IV data center (fully fault tolerant) is not inherently less efficient than a Tier II or Tier III (concurrently maintainable) data center, especially given recent advances in equipment technology (such as digital scroll compressors, regular use of variable frequency drives, more sophisticated control and automation capabilities). These are some of the technologies that can help ensure that the use of redundant extra capacity or resilient components does not require significantly more energy.

It is often thought that Tier IV uses a lot more energy than a Tier III data center. However, this is not necessarily the case — the difference can be negligible. The only definitive increase in power consumption that a Tier IV data center may require is an increased load on uninterruptible power supply (UPS) systems and a few extra components. However, this increase can be as little as a 3% to 5% loss on 10% to 20% of the total mechanical power load for most data centers.

The idea that Tier IV data centers are less efficient usually comes from the requirement to have two systems in operation. But this does not necessarily mean that each of the two systems in operation can support the full current workload at a moment’s notice. The goal is to ensure that there is no interruption of service.

On the electrical side, concurrent availability and fault tolerance may be achieved using a “swing UPS” and distributed N+1 UPS topologies. In this well-established architecture, the batteries provide immediate energy storage and are connected to an online UPS. There is not necessarily a need for two fully powered (2N) UPS systems; rather, the distribution is arranged so that a single failure only impacts a percentage of the IT workload. Any single redundant UPS only needs to be able to support that percentage of IT workload. The total UPS installed capacity, therefore, is far less than twice the entire workload, meaning that the power use and losses and are less.

Similarly, power use and losses can be reduced in the distribution systems by designing with as few transformers and distribution boards and transfer switches as possible. Fault tolerance can be harder to achieve in this way, with a larger number of smaller components increasing complexity — but this has the benefit of higher electrical efficiency.

On the mechanical side, two cooling systems can be operational, with each supporting a part of the current workload. When a system fails, thermal storage is used to buy time to power up additional cooling units. Again, this is a well-established approach. - Cooling.Low PUEs assume that highly efficient cooling is place, which in turn requires the use of ambient cooling. Regulation will drive the industry to greater use of economization — whether these are air-side economizers, water-side economizers, or pumped refrigerant economizers.

- While very low PUEs do not rule out the use of standard direct expansion (DX) units, their energy use may be problematic. The use of DX units on hot days may be required to such an extent that very low PUEs are not achievable.

- More water, less power? The PUE limitation of 1.2 may encourage the use of cooling technologies that evaporate more water. In many places, air-cooled chillers may struggle to cool the workload on hot days, which will require mechanical assistance. This may happen too frequently to achieve low annualized PUEs. A water-cooled plant or the use of evaporative cooling will likely use less power — but, of course, require much more water.

- Increasing density will challenge cooling — even direct liquid cooling (DLC). Current and forecasted high-end processors, including graphics processing units, require server inlet temperatures at the lower ends of the ASHRAE range. This will make it very difficult to achieve low PUEs.

DLC can only provide a limited solution. While it is highly effective in efficiently cooling higher density systems at the processor level, the lower temperatures required necessitate ever-chilled or refrigerated air / water — which, of course, increases the power consumption and pushes up the PUEs. - Regulation may drive location. Low PUEs are much easier and more economically achieved in cooler or less humid climates. Regulators that mandate low PUEs will have to take this into account. Germany, for example, has mostly cool or cold winters and warm or hot summers, but with low / manageable humidity — therefore, it may be well suited to economization. However, it will be easier and less expensive to achieve these low PUEs in Northern Germany, or even in Scandinavia, rather than in Southern Germany.

- Build outs. Germany’s Energy Efficiency Act requires that operators reach a low PUE within two years of the data center’s commissioning. This, in theory, gives the operator time to fill up the data center and reach an optimum level of efficiency. However, most data centers actually fill out and/or build out over a much longer timescale (four years is more typical).

This may have wider design implications. Achieving a PUE of 1.2 at full workload requires that the equipment is selected and powered to suit the workload. But at a partial workload, many of these systems will be over-sized or not be as efficient. To achieve optimal PUE at all workloads, it may be necessary to deploy more, smaller capacity components and take a more modular approach — possibly using repeatable, prefab subsystems. This will have cost implications; to achieve concurrent maintainability for all loads, designers may have to use innovative N+1 designs with the greater use of smaller components. - Capital costs may rise.Research suggests that low PUE data centers can also have low operational costs — notably because of reduced energy use. However, the topologies and the number of components, especially for higher availability facilities, may be more expensive. Regulators mandating lower PUEs may be forcing up capital costs, although these can be recuperated later.

The Uptime Intelligence View

The data center industry is not so new. Many facilities — perhaps most — achieve very high availability as a result of proven technology and assiduous attention by management and operators. However, the great majority do not use state-of-the-art engineering to achieve high energy efficiency. Regulators want to push them in this direction. Uptime Intelligence’s assessment is that — by adopting well-thought-out designs and building in a modular, balanced way — it is possible to have and operate highly available, mission-critical data centers with very high energy efficiency at all workloads. However, there will likely be a considerable cost premium.

*The Uptime Institute experts consulted for this Update are:

Chris Brown, Chief Technical Officer, Uptime Institute

Ryan Orr, Vice President, Topology Services and Global Tier Authority, Uptime Institute

Jay Dietrich, Research Director Sustainability, Uptime Institute

Dr. Tomas Rahkonen, Research Director, Distributed Data Centers, Uptime Institute

2020

2020

2020

2020