Data Center Cost Myths: SCALE

What happens when economies of scale is a false promise?

By Chris Crosby

Chris Crosby is a recognized visionary and leader in the data center space, Founder and CEO of Compass Datacenters. Mr. Crosby has more than 20 years of technology experience and 10 years of real estate and investment experience. Previously, he served as a senior executive and founding member of Digital Realty Trust. Mr. Crosby was Senior Vice President of Corporate Development for Digital Realty Trust, responsible for growth initiatives including establishing the company’s presence in Asia. Mr. Crosby received a B.S. degree in Computer Sciences from the University of Texas at Austin.

For many of us, Economics 101 was not a highlight of our academic experience. However, most of us picked up enough jargon to have an air of competence when conversing with our business compadres. Does “supply and demand” ring a bell?

Another favorite term that we don’t hesitate to use is “economies of scale.” It sounds professorial and is easy for everyone, even those of us who slept our way through the course, to understand. Technically the term means: The cost advantages that enterprises obtain due to size, throughput, or scale of operation, with cost per unit of output generally decreasing with increasing scale as fixed costs are spread out over more units of output.

The metrics used in our world are usually expressed as cost/kilowatt (kW) of IT capacity and cost/square foot (ft2) of real estate. Some folks note all costs as cost/kW. Others simply talk about the data center fit out in cost/kW and leave the land and building (cost/ft2) out of the equation entirely. In both cases, however, economy of scale is the assumed catalyst that drives cost/ft2 and/or cost/kW ever lower. Hence the birth of data centers so large that they have their own atmospheric fields.

This model is used both by providers of multi-tenant data centers (MTDC), vendors of pre-fabricated modular units, and many enterprises building their own facilities. Although the belief that building at scale is the most cost efficient data center development method appears logical on the surface, it does, in fact, rely on a fundamental requirement: boat loads of cash to burn.

It’s First Cost, Not Just TCO

In data center economics, no concept has garnered more attention, and less understanding, than Total Cost of Ownership (TCO). Entering the term “data center total cost of ownership” into Google returns more than 11.5 million results, so obviously people have given this a lot of thought. Fortunately for folks who write white papers, nobody has broken the code. To a large degree, the problem is the nature of the components that comprise the TCO calculus. Because of the longitudinal elements that are part of the equation, energy costs over time for example, the perceived benefits of design decisions sometimes hide the fact that they are not worth the cost of the initial investment (first cost) required to produce them. For example, we commonly find this to be the case in the quest of many operators and providers to achieve lower PUE. While certainly admirable, incomplete economic analysis can mask the impact of a poor investment. In other words, this is like my wife bragging about the money she saved by buying the new dining room set because it was on sale even though we really liked the one we already had.

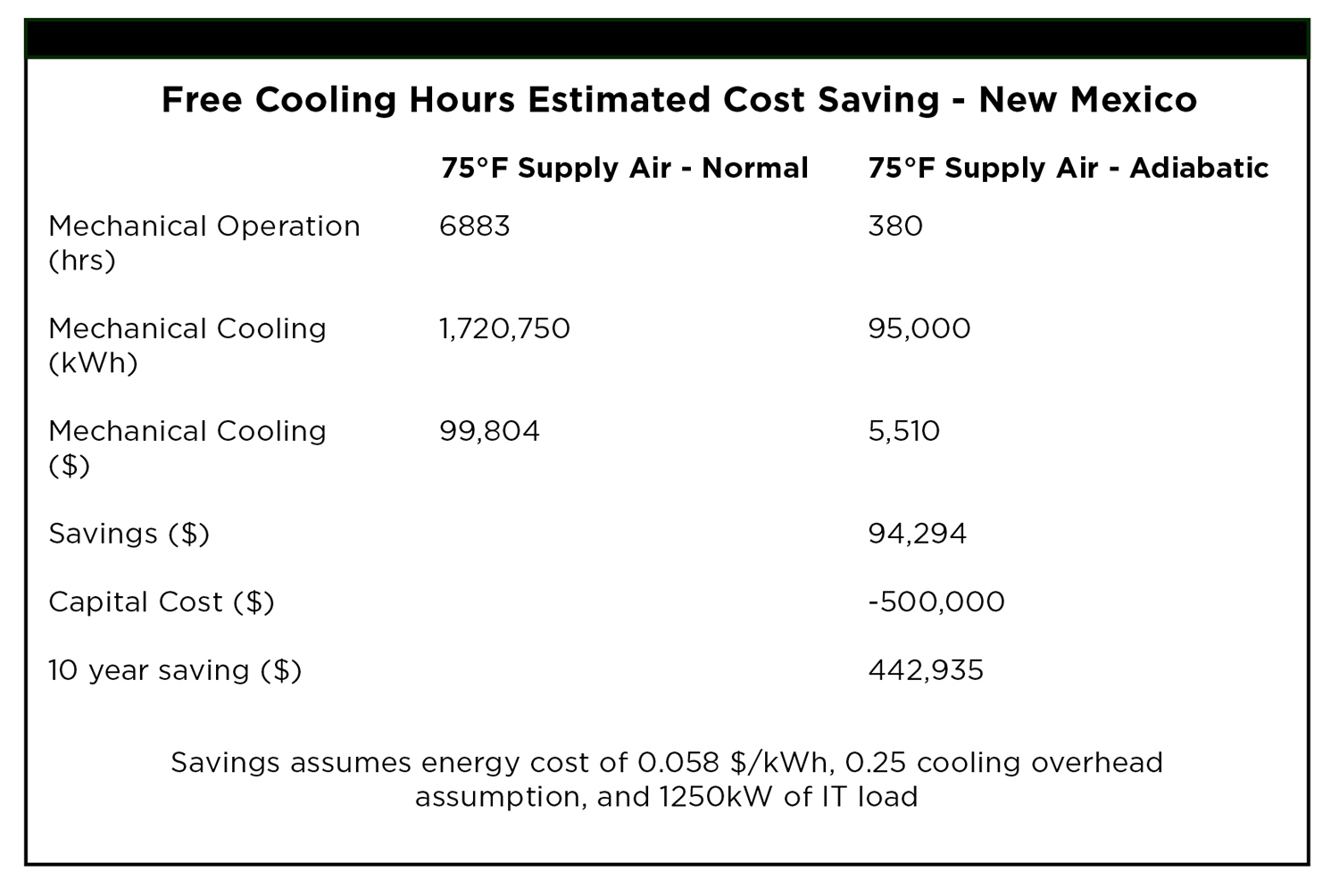

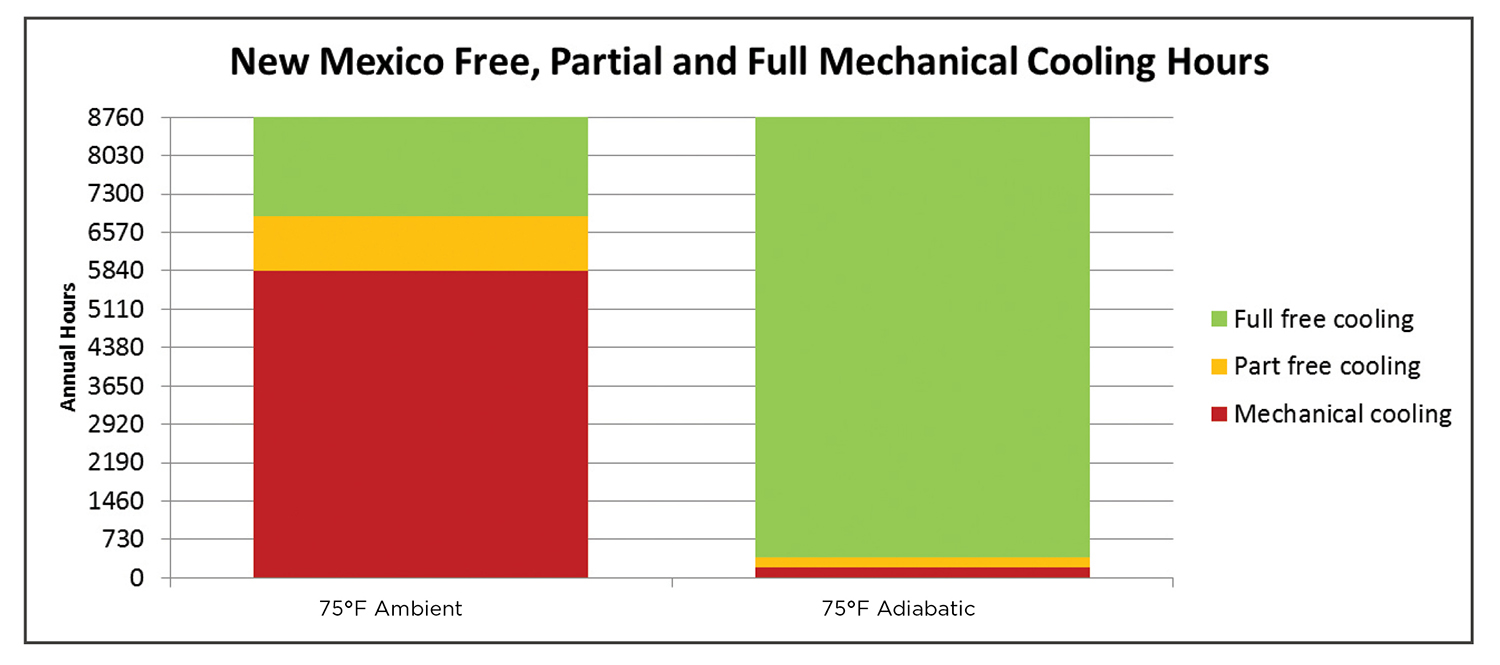

In a paper posted on the Compass website, Trading TCO for PUE?, Romonet, a leading provider of data center analytical software, illustrated the effect of failing to properly examine the impact of first cost on a long-term investment. Due to New Mexico’s favorable atmospheric conditions, Compass chose it as the location to examine the value of using an adiabatic cooling system in addition to airside economization as the cooling method for a hypothetical location. This is a fairly common industry approach to free cooling. New Mexico’s climate is hot and dry and offers substantial free cooling benefits in the summer and winter as demonstrated by Figure 1.

Figure 1. Free cooling means that the compressors are off, which, on the surface, means “free” as they are not drawing electricity.

In fact, through the use of an adiabatic system, the site would benefit from over four times the free-cooling hours than a site without one. Naturally, the initial reaction to this cursory data would be “get that cooling guy in here and give him a PO so I have a really cool case study to present at the next Uptime Institute Symposium.” And, if we looked at the perceived cost savings over a ten-year period, we¹d be feeling even better about our US$500,000 investment in that adiabatic system since it appears that it saved us over US$440,000 in operating expenses.

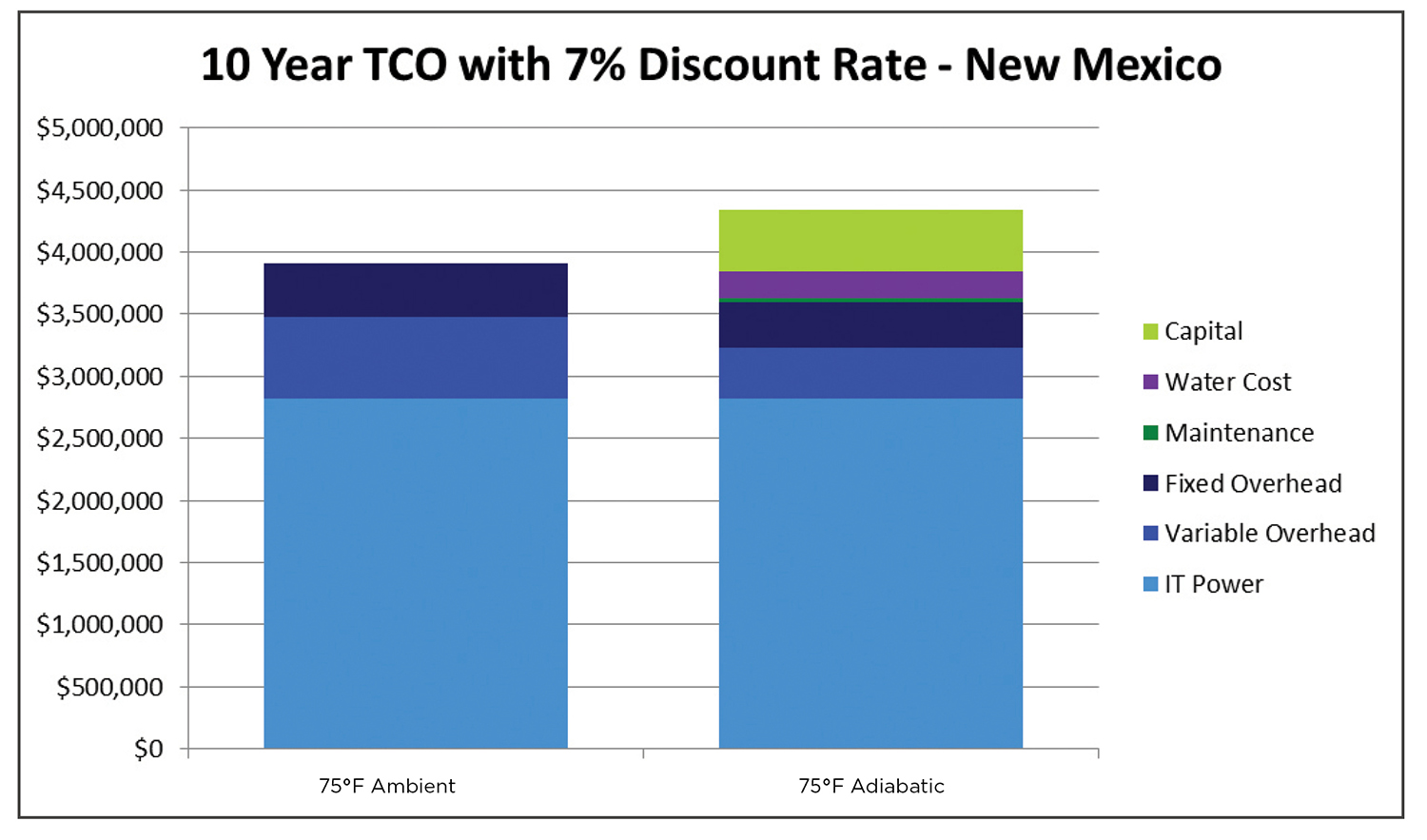

Unfortunately, appearances can be deceiving, and any analysis of this type needs to include a few things such as discounted future savings‹otherwise known as net present value (NPV), the cost of not only the system maintenance but also for the water used, and its treatment, over the 10-year period. When these factors are taken into account, it turns out that our US$500,000 investment in an adiabatic cooling system actually resulted in a negative payback of US$430,000! That’s a high price to pay for a tenth of a point in your PUE.

The point is that the failure to account for the long-term impact of an initial decision can permanently preclude companies from exercising alternative business, not just data center, strategies.

The Myth of Scale (a.k.a., The First Cost Trap)

Perhaps there is no better example of how the myth of scale morphs into the first cost trap than when a company elects to build out the entire shell of its data center upfront, even though their initial space requirements are only a fraction of the ultimate capacity. This is typically done using the justification that they will eventually “grow into it,” and it is necessary to build a big building because of the benefit of economy of scale. It¹s important to note that this is also a strategy used by providers of MTDCs, and it doesn’t work any better for them.

The average-powered core and shell (defined here as the land, four walls, and roof along with a transformer and common areas for security, loading dock, restrooms, corridors, etc.) of a data center facility typically ranges from US$20 million to upwards of US$100 million. The standard rationale for this “upfront” mode of data center construction is that this is not the ³expensive² portion of the build and will be necessary in the long run. In other words, the belief is that it is logical to build the facility in its entirety because construction is cheap on a per-square-foot basis. Under this scenario, the cost savings are gained through the purchase and use of materials in a high enough volume that price reductions can be extracted from providers. The problem is that when the first data center pod or module is added the costs go up in additional US$10 million increments. In other words, in the best case it costs US$30 million minimum just to turn on the first server! Even modular options require that first generator and that first heat rejection and piping. First cost per kW is two to four times the supposed “end” point cost per kilowatt. Enterprises can pay two or three times more.

Option Value

This volume mode of cost efficiency has long been viewed as an irrefutable truth within the industry. Fortunately, or unfortunately, depending on how you look at things, irrefutable truths oftentimes prove very refutable. In this method of data center construction, what is gained is often less important than what has been lost.

Option value is the associated monetary value of the prospective economic alternatives (options) that a company has in making decisions. In the example, the company gained a shell facility that it believes, based on its current analysis, will satisfy both its existing and future data center requirements. However, the inexpensive (as compared to the fit out of the data center) cost of US$100-US$300/ft2 is still real money (US$20-US$100 million depending on the size and hardening of the building). The building and the land it sits on are now dedicated to the purpose of housing the company’s data center, which means that it will employ today¹s architecture for the data center of the future. If the grand plan does not unfold as expected, this is kind of like going bust after you’ve gone all in during a poker game.

Now that we have established what the business has gained through its decision to build out the data center shell, we should examine what it has lost. In making the decision to build in this way, the business has chosen to forgo any other use. By building the entire shell first, it has lost any future value of an appreciating asset‹the land used for the facility. It cannot be used to support any other corporate endeavors, such as disaster recovery offices, and it cannot be sold for its appreciated value. While maybe not foreseeable, this decision can become doubly problematic if the site never reaches capacity and some usable portion of the building/land is permanently rendered useless. It will be a 39-year depreciating rent payment that delivers zero return on assets. Suddenly, the economy of scale is never realized, so the initial cost per kilowatt is the end-point cost.

For example, let’s assume a US$3-million piece of land and US$17 million to build a building of 125,000 ft2 that supports six pods at 1,100 kW each. At US$9,000 per kW for the first data center, we have an all-in of US$30 million for 1,100 kWh over US$27,000 per kW. It’s not until we build all six pods that we get to the economy of scale that produces an all-in of US$12,000/kW. In other words, there is no economy of scale unless you commit to invest almost US$80M! This is the best case, assuming the builder is an MTDC.

It is logical for corporate financial executives to ask whether this is the most efficient way to allocate capital. The company has also forfeited any alternative uses for the incremental capital that was invested to manifest this all at once approach. Obviously once invested, this capital cannot be repurposed and remains tied to an underutilized depreciating asset.

Figure 3. Savings assumes energy cost of $US0.058 kWh, 0.25 cooling overhead and 1,250 kW of IT load

An Incremental Approach

The best way to address the shortcomings associated with the myth of scale is to construct data center capacity incrementally. This approach entails building a facility in discrete units that, as part of the base architecture, enable additional capacity to be added when it is required. For a number of reasons, until recently, this approach has not been a practical reality for businesses desiring this type of solution.

For organizations that elect to build their own data centers, the incremental approach described above is difficult to implement due to resource limitations. Lacking a viable prototype design (the essential element for incremental implementation), each project effectively begins from scratch and is typically focused on near-term requirements. Thus, the ultimate design methodology reflects the build it all at once approach as it is perceived to limit the drain on corporate resources to a one-time-only requirement. The typical end result of these projects is an extended design and construction period (18-36 months on average), which sacrifices the efficiency of capital allocation and option value for a flawed definition of expediency.

For purveyors of MTDC facilities, incremental expansion via standardized discrete units is precluded due to their business models. Exemplifying the definition of economies of scale found in our old Economics 101 textbooks, these organizations reduce their cost metrics by leveraging their size to procure discounted volume purchase agreements with their suppliers. These economies then translate into the need to build large facilities designed to support multiple customers. Thus, the cost efficiencies of MTDC providers drive a business model that requires large first-cost investments in data center facilities, with the core and shell built all at once and data center pods completed based on customer demand. Since MTDC efficiencies can only be achieved by reducing high first-cost investments by leasing capacity to multiple tenants or multiple pods to a tenant, they are forced to locate these sites in market areas that include a high population of their target customers. Thus, the majority of MTDC facilities are predominately found within a handful of markets (e.g., Northern Virginia, New York/New Jersey, and the San Francisco Bay area) where a critical mass of prospective customers can be found. This is the predominant reason why they have not been able to respond to customers requiring data centers in other locations. As a result, this MTDC model requires a high degree of sacrifice to be made by the customers. Not only must they relinquish their ability to locate their new data center wherever they need it, they must pre-lease additional space to ensure that it will be if they grow over time as even the largest MTDC facilities have finite data center capacity.

Many industry experts view prefabricated data centers as a solution to this incremental requirement. In a sense, they are correct. These offerings are designed to make the addition of capacity a function of adding one or more additional units. Unfortunately, many users of prefabricated data centers experience problems from how these products are incorporated in designs. Unless the customer is using them in a parking lot, more permanent configurations require the construction of a physical building to house them. The end result of this need is the construction of an oversized facility that will be grown into, but also suffers from the same first cost and absence of option value as the typical customer-constructed or MTDC facility. In other words, if I have to spend $US20 million day one for the shell and core, how am I saving by only building in 300-kW increments instead of 1-megawatt like the traditional guys?

The Purpose-Built Facility

In order to effectively implement a data center strategy that eliminates the issues of exorbitant first costs and the elimination of option value, the facility itself must be designed for just such a purpose. Unlike attempting to use size as the method for cost reduction, the data center would achieve this requirement through the use of a prototype, replicable design. In effect, the data center becomes a product with cost focus on a system level, not parts and pieces.

To many, the term “standard” is viewed as a pejorative that denotes a less than optimal configuration. However, as ³productization² has shown with the likes of the Boeing 737, the Honda Accord, or the Dell PC, when you include the most commonly desired features at a price below non-standard offerings, you eliminate or minimize the concern. For example, features like: Uptime Institute Tier III Design and Construction Certification, LEED certification, a hardened shell, and ergonomic features like a move/add/change optimized design would be included in the standard offering. This limits the scope of customer personalization to the data hall, branding experience, security and management systems, and jurisdictional requirements. This is analogous to car models that incorporate the most commonly desired features as standard, while enabling the customer to “customize their selection in areas such as car color, wheels, and interior finish.

The resulting solution then provides the customer with a dedicated facility, including the most essential features that can be delivered within a short timeframe (under six months from initial ground breaking) without requiring them to spend US$20-US$100 million on a shell while simultaneously relinquishing the option value of the remaining land. Each unit would also be designed to easily allow additional units to be built and conjoined to enable expansion to be based on the customer’s timeframe and financial consideration rather than have them imposed on them by the facility itself or a provider.

Summary

Due to their historically limited alternatives, many businesses have been forced to justify the inefficiency of their data center implementations based on the myth of scale. Although solutions like pre-fabricated facilities have attempted to offer prospective users the incremental approach that negates the impact of high first costs and the elimination of alternatives (option value), ultimately they require the same upfront physical and financial requirements as MTDC alternatives. The alternative to these approaches is through the productization of the data center in which a standard offering, that includes all of the most commonly requested customer features, provides end users with a cost effective option that can be grown incrementally in response to their individual corporate needs.

Industrialization, a la Henry Ford, ensures that each component is purchased at scale to reduce the cost per component. Productization shatters this theory by focusing on the system levels, not the part/component level. It is through productization that the paradox of high quality, low cost, in quickly delivered data centers becomes a reality.