Where the cloud meets the edge

Low latency is the main reason cloud providers offer edge services. Only a few years ago, the same providers argued that the public cloud (hosted in hyperscale data centers) was suitable for most workloads. But as organizations have remained steadfast in their need for low latency and better data control, providers have softened their resistance and created new capabilities that enable customers to deploy public cloud software in many more locations.

These customers need a presence close to end users because applications, such as gaming, video streaming and real-time automation, require low latency to perform well. End users — both consumers and enterprises — want more sophisticated capabilities with quicker responses, which developers building on the cloud want to deliver. Application providers also want to reduce the network costs and bandwidth constraints that result from moving data over wide distances — which further reinforces the need to keep data close to point of use.

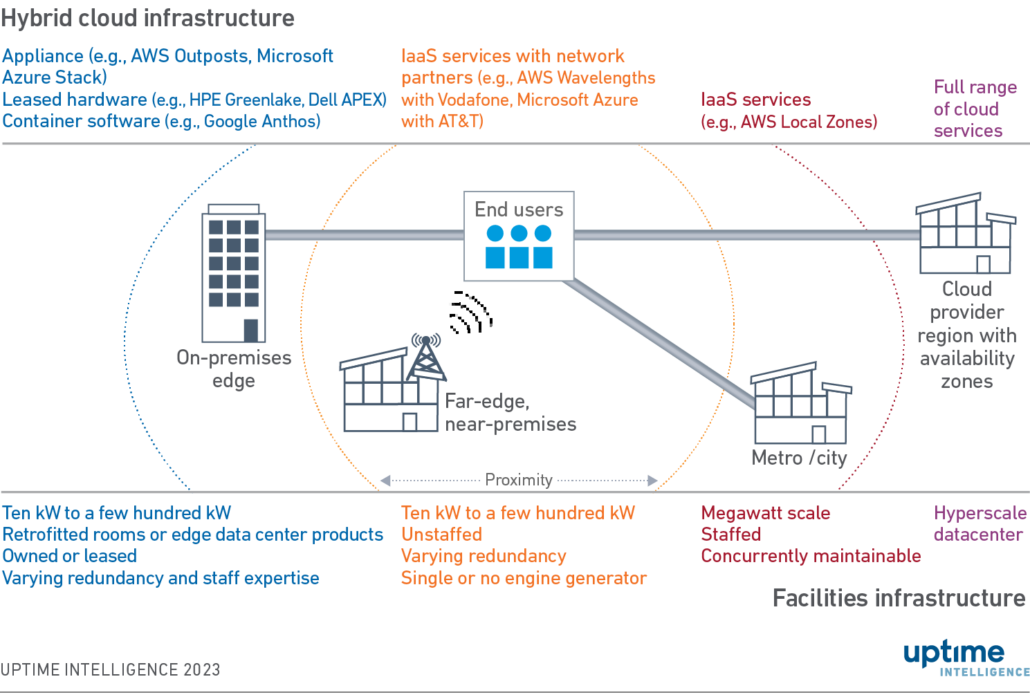

Cloud providers offer a range of edge services to meet the demand for low latency. This Update explains what cloud providers offer to deliver edge capabilities. Figure 1 shows a summary of the key products and services available.

Figure 1. Key edge services offered by cloud providers

In the architectures shown in Figure 1, public cloud providers generally consider edge and on-premises locations as extensions of the public cloud (in a hybrid cloud configuration), rather than as isolated private clouds operating independently. The providers regard their hyperscale data centers as default destinations for workloads and propose that on-premises and edge sites be used for specific purposes where a centralized location is not appropriate. Effectively, cloud providers see the edge location as a tactical staging post.

Cloud providers don’t want customers to view edge locations as similarly feature-rich as cloud regions. Providers only offer a limited number of services at the edge to address specific needs, in the belief that the central cloud region should be the mainstay of most applications. Because the edge cloud relies on the public cloud for some aspects of administration, there is a risk of data leakage and loss of control of the edge device. Furthermore, the connection between the public cloud and edge is a potential single point of failure.

Infrastructure as a service

In an infrastructure as a service (IaaS) model, the cloud provider manages all aspects of the data center, including the physical hardware and the orchestration software that delivers the cloud capability. Users are usually charged per resource consumed per period.

The IaaS providers use the term “region” to describe a geographical area that contains a collection of independent availability zones (AZs). An AZ consists of at least one data center. A country may have many regions, each typically having two or three AZs. Cloud providers offer nearly all their services that are located within regions or AZs as IaaS.

Cloud providers also offer metro and near-premises locations sited in smaller data centers, appliances or colocation sites nearer to the point of use. They manage these in a similar way to AZs. Providers claim millisecond-level connections between end-users and these edge locations. However, the edge locations usually have fewer capabilities, poorer resiliency, and higher prices than AZs and broader regions that are hosted from the (usually) larger data centers.

Furthermore, providers don’t have edge locations in all areas — they create new locations only where volume is likely to make the investment worthwhile, typically in cities. Similarly, the speed of connectivity between edge sites and end-users depends on the supporting network infrastructure and availability of 4G or 5G.

When a cloud provider customer wants to create a cloud resource or build out an application or service, they first choose a region and AZ using the cloud provider’s graphical user interface (GUI) or application programming interface (API). To the user of GUIs and APIs, metro and near-premises locations appear as options for deployment just as a major cloud region would. Buyers can set up a new cloud provider account and deploy resources in an IaaS edge location in minutes.

Metro locations

Metro locations do not offer the range of cloud services that regions do. They typically offer only compute, storage, container management and load balancing. A region has multiple AZs; a metro location does not. As a result, it is impossible to build a fully resilient application in a single metro location (see Cloud scalability and resiliency from first principles).

The prime example of a metro location is Amazon Web Services (AWS) Local Zones. Use cases usually focus on graphically intense applications such as virtual desktops or video game streaming, or real-time processing of video, audio or sensor data. Customers, therefore, should understand that although edge services in the cloud might cut latency and bandwidth costs, resiliency may also be lower.

These metro-based services, however, may still match many or most enterprise levels of resiliency. Data center infrastructure that supports metro locations is typically in the scale of megawatts, is staffed, and is built to be concurrently maintainable. Connection to end users is usually provided over redundant local fiber connections.

Near-premises (or far edge) locations

Like cloud metro locations, near-premises locations have a smaller range of services and AZs than regions do. The big difference between near-premises and metros is that resources in near-premises locations are deployed directly on top of 4G or 5G network infrastructure, perhaps only a single cell tower away from end-users. This reduces hops between networks, substantially reducing latency and delays caused by congestion.

Cloud providers partner with major network carriers to enable this, for example AWS’s Wavelengths service delivered in partnership with Vodafone, and Microsoft Azure Edge Zone’s service with AT&T. Use cases include, or may include, real-time applications, such as live video processing and analysis, autonomous vehicles and augmented reality. 5G enables connectivity where there is no fixed-line telecoms infrastructure or in temporary locations.

These sites may be cell tower locations or exchanges, operating tens of kilowatts (kW) to a few hundred kW. They are usually unstaffed (remotely monitored) with varying levels of redundancy and a single (or no) engine generator.

On-premises cloud extensions

Cloud providers also offer hardware and software that can be installed in a data center that the customer chooses. The customer is responsible for all aspects of data center management and maintenance, while the cloud provider manages the hardware or software remotely. The provider’s service is often charged per resource per period over an agreed term. A customer may choose these options over IaaS because no suitable cloud edge locations are available, or because regulations or strategy require them to use their own data centers.

Because the customer chooses the location, the equipment and data center may vary. In the edge domain, these locations are: typically 10 kW to a few hundred kW; owned or leased; and constructed from retrofitted rooms or using specialized edge data center products (see Edge data centers: A guide to suppliers). Levels of redundancy and staff expertise vary, so some edge data center product suppliers provide complementary remote monitoring services. Connectivity is supplied through telecom interconnection and local fiber, and latency between site and end user varies significantly.

Increasingly, colocation providers differentiate by directly peering with cloud providers’ networks to reduce latency. For example, Google Cloud recommends its Dedicated Interconnect service for applications where latency between public cloud and colocation site must be under 5ms. Currently, 143 colocation sites peer with Google Cloud, including those owned by companies such as Equinix, NTT, Global Switch, Interxion, Digital Realty and CenturyLink. Other cloud providers have similar arrangements with colocation operators.

Three on-premises options

Three categories of cloud extensions can be deployed on-premises. They differ in how easy it is to customize the combination of hardware and software. An edge cloud appliance is simple to implement but has limited configuration options; a pay-as-you-go server gives flexibility in capacity and cloud integration but requires more configuration; finally, a container platform gives flexibility in hardware and software and multi-cloud possibilities, but requires a high level of expertise.

Edge cloud appliance

An edge appliance is a pre-configured hardware appliance with pre-installed orchestration software. The customer installs the hardware in its data center and can configure it, to a limited degree, to connect to the public cloud provider. The customer generally has no direct access to the hardware or orchestration software.

Organizations deploy resources via the same GUI and APIs as they would use to deploy public cloud resources in regions and AZs. Typically, the appliance needs to be connected to the public cloud for administration purposes, with some exceptions (see Tweak to AWS Outposts reflects demand for greater cloud autonomy). The appliance remains the property of the cloud provider, and the buyer typically leases it based on resource capacity over three years. Examples include AWS Outposts, Azure Stack Hub and Oracle Roving Edge Infrastructure.

Pay-as-you-go server

A pay-as-you-go server is a physical server leased to the buyer and charged based on committed and consumed resources (see New server leasing models promise cloud-like flexibility). The provider maintains the server, measures consumption remotely, proposes capacity increases based on performance, and refreshes servers when appropriate. The provider may also include software on the server, again charged using a pay-as-you-go model. Such software may consist of cloud orchestration tools that provide private cloud capabilities and connect to the public cloud for a hybrid model. Customers can choose their hardware specifications and use the provider’s software or a third party’s. Examples include HPE GreenLake and Dell APEX.

Container software

Customers can also choose their own blend of hardware and software with containers as the underlying technology, to enable interoperability with the public cloud. Containers allow software applications to be decomposed into many small functions that can be maintained, scaled, and managed individually. Their portability enables applications to work across locations.

Cloud providers offer managed software for remote sites that is compatible with public clouds. Examples include Google Anthos, IBM Cloud Satellite and Red Hat OpenShift Container Platform. In this option, buyers can choose their hardware and some aspects of their orchestration software (e.g., container engines), but they are also responsible for building the system and managing the complex mix of components (see Is navigating cloud-native complexity worth the hassle?).

Considerations

Buyers can host applications in edge locations quickly and easily by deploying in metro and near-premises locations offered by cloud providers. Where a suitable edge location is not available, or the organization prefers to use on-premises data centers, buyers have multiple options for extending public cloud capability to an edge data center.

Edge locations differ in terms of resiliency, product availability and — most importantly — latency. Latency should be the main motivation for deploying at the edge. Generally, cloud buyers pay more for deploying applications in edge locations than they would in a cloud region. If there is no need for low latency, edge locations may be an expensive luxury.

Buyers must deploy applications to be resilient across edge locations and cloud regions. Edge locations have less resiliency, may be unstaffed, and may be more likely to fail. Applications must be architected to continue to operate if an entire edge location fails.

Cloud provider edge locations and products are not generally designed to operate in isolation — they are intended to serve as extensions of the public cloud for specific workloads. Often, on-premises and edge locations are managed via public cloud interfaces. If the connection between the edge site and the public cloud goes down, the site may continue to operate — but it will not be possible to deploy new resources until the site is reconnected to the public cloud platform that provides the management interface.

Data protection is often cited as a use case for edge, a reason why some operators may choose to locate applications and data at the edge. However, because the edge device and public cloud need to be connected, there is a risk of user data or metadata inadvertently leaving the edge and entering the public cloud, thereby breaching data protection requirements. This risk must be managed.

Dr. Owen Rogers, Research Director of Cloud Computing

Tomas Rahkonen, Research Director of Distributed Data Centers

UI @ 2020

UI @ 2020