Case Study: Italy’s First Tier IV TCCF

System efficiency proves more important than equipment efficiency

By Paolo Barberis, Leonardo Sergardi, and Ferdinando Ciardullo

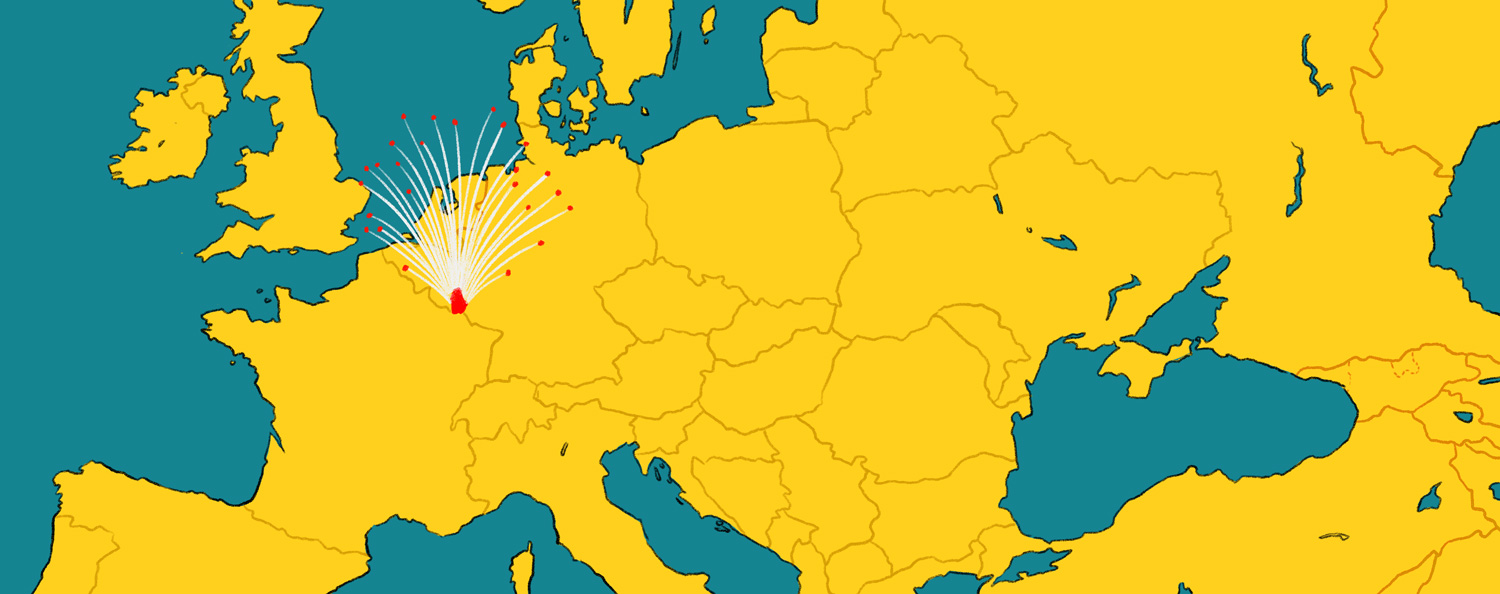

FastWeb is an Italian telecommunications operator, 100% owned by Swisscom, providing ultrabroadband services to the Italian consumer and corporate markets, in which it holds a 35% market share. Since its founding 15 years ago, FastWeb has invested in infrastructure. For instance FastWeb has an optical fiber network of about 38,000 kilometers. This strategy was reconfirmed for 2014/2015 when FastWeb announced its Fiber to the Street project (FTTS), a two-year plan to provide ultrabroadband services to 30% of the Italian population, an increase from 20%. Similarly, FastWeb has invested in data centers. At present, the company owns two data centers, with a total area of 6,000 square meters (m2) of white space. Today FastWeb has chosen to focus on providing network services and services such as housing, hosting, Cloud, managed services, and managed security.

In order to support these services, FastWeb decided to build a new data center offering the highest level of security possible to its clients. This goal led to the decision to achieve Italy’s first Tier IV Certification of Constructed Facilities (TCCF) with quality construction and energy efficiency also in mind. After examining the various options, FastWeb decided to site the data center within an owned, existing facility built in 1937.

FastWeb’s choice to build its new data center within its headquarters alongside the existing data rooms and offices was the main constraint in developing the project. As a result the design approach was reversed. Every effort was made to build the best infrastructure possible to meet FastWeb’s performance requirements, without disrupting the existing functions and the surrounding environment. In practice, it was necessary to identify the parts of FastWeb’s entire business plan that could be carried out on site optimally and conform to the plan itself.

During the preliminary design phases, a major effort was made to locate the optimal solution, which made it possible to develop the project details in a very short time, with- out requiring any changes to the drawings. The challenge was integrating and holistically adapting the spaces available to the space requirements of the white space and sup- porting equipment, the volumetric plan of the facility, the requirements of Tier IV, and the efficiency goals. As a result of the detailed preliminary planning, time was recovered during the detailed design and execution phases, which had practically no surprises.

Finally, the acoustic pollution needed to be managed in line with the local standards because of the downtown location of the site. FastWeb carried out an acoustic audit to identify any mitigation measures that would be needed. On the basis of the results the mitigation interventions were decided. Acoustics were tested twice afterwards: once during commissioning and then again after the data center went live. The issue of acoustic pollution was fully addressed by specifying super-silenced chillers and engine-generator sets and positioning them in the courtyard and installing appropriate gear to make them super silent. These steps did not affect the loads in any way.

Because the site was already part of FastWeb’s network, the new data center ben- efits from all the robustness and redundancy of connectivity of the network at low cost. In addition, the company’s Technological Department is located on the site, which gives the facility access to the highest technical skills available. Moreover, from a market viewpoint, key clients like being hosted in central Milano, which is a capital of finance and industry and near many of their headquarters.

The facility comprises a 600-m2 data room with 80 m2 of ancillary space, 930 m2 of machine rooms, and 650 m2 of external technological areas, all located in the same building on multiple adjacent floors (see Figures 1 and 2). The facility’s white space houses (162) 42U racks using Hot Aisle containment technology (see Figure 3), with 1,250 kilowatts (kW) available for IT loads (an average of 7.5 kW/rack and power density of 2.1 kW/m2). The facility can also accommodate a few high-density (20 kW/rack) islands. The data center is the first one built in Italy to achieve TCCF from Uptime Institute.

FastWeb’s data center includes a double-path, always-on scheme, both for electric

as well as mechanical systems, including two feeds from the external utility. Utility service to the facility is 5 megavolt-amperes (MVA), with two 2.4 megawatt (MW) engine-generator sets (N+N) (continuous-rated power as per ISO 8528) and four very high yield, scalable modular static 800 kVA UPSs (N+N),capable of operating in voltage double conversion, line interactive, or off-line modes to limit losses, producing annual efficiencies of 98% (see Figure 4). Total cooling capacity is 3.340 kW.

PUE as measured during commissioning was 1.25. The FastWeb facility includes both a building management system (BMS) (see Figure 5) and Schneider Electric’s StruxureWare Data Center Infrastructure Management (DCIM) that help it manage energy use and achieve its energy goals. Together these monitor or control about 10,000 points and variables.

In order to ensure the most rapid and easiest flexibility of change, power distribution and data cabling have been made on site above the racks. Power is distributed through double-path busbars. Power to the racks can be completely changed without affecting the others, because feeds from the busbar are through extractable boxes equipped with switches. This system has eliminated the need for electric distribution panels bringing a noticeable reduction of losses related to electrical distribution.

The data center required about 50,000 m of power cables and about 50,000 m of auxiliary and control cables plus 900 manual and automatic valves on the cooling circuits. Two distinct 40 Gbit fiberoptic backbones link the facility to the external mains network.

COOLING

In order to reach FastWeb’s high energy efficiency goals, the cooling system

has been designed to reduce the energy required for pumps as allowed by the existing building. The mechanical systems are sized to ensure the data room has a C1 class microclimate, as defined by ASHRAE’s Thermal Guidelines. Design temperatures at the server input are 77°F (25°C), which can be raised to 86°F (30°C) in case of future installation of new-generation servers.

The choice of cooling equipment was made with overall facility efficiency in mind, considering the real operational parameters of the system (temperatures, loads,

etc.) and the specific designed setting logic. In other words, simply picking the most efficient equipment does not guarantee the most efficient system. This thinking led to solutions where the highest efficiency equipment was not chosen for the project.

It was gratifying then when operating experience confirmed that most efficient system was achieved by matching the main equipment to the specific operating conditions expected in the facility. This outcome was particularly noticeable in the performance of chiller units and in-row units.

Four high-efficiency chillers provide N+N cooling to the facility. The chillers are leading edge in terms of energy efficiency, internal redundancy, reliability, occupancy and noise; they are equipped with oil-free magnetic levitation compressors and are specified to be super silenced. Both primary cooling loops are equipped with inertial refrigerated water storage tanks to ensure operation of the data room for 15 minutes in case of blackout. Secondary distribution of cooling fluids is achieved using double-ring distributions, each powered by one of the two chiller plants. The energy efficiency ratio (EER) coefficient of 9.0 (50% of rated load for each chiller) demonstrates the energy efficiency of the system.

A dedicated control system ensured that the chillers operate as efficiently as possible, balancing their output ac- cording to the load present. The same system also identifies maintenance actions—in addition to routine maintenance operations—that could improve efficiency.

In the white space, in-row cooling units ensure an N+N redundancy factor for each aisle. During normal operation, each aisle is cooled separately thanks to modulating two-way valves and fans activated by inverters.

The in-row technology was chosen since it has the following advantages respect to the traditional perimeter computer room air handlers CRAH units:

- High efficiency

- No mixing of cold and hot air

- No by pass phenomena

- Proximity to cold and hot air flows allows for minimal loss and good airflow management

- Minimizes the need for humidification or dehumidification

- Supports modular design

- Ease of installation

The high temperature of the chilled water (15–20°C) used in the facility means that air can’t be dehumidified in the summer. As a result, the facility includes two direct expansion generator sets operating with heat pumps to treat the fresh air. Two cascaded heat recovery systems give the units particularly high overall efficiency.

FastWeb utilizes a DCIM system (see Figure 6) and a double-path BMS to control the facility , which accounts for energy use to the PDUs and to the in-row cooling units.

The BMS and DCIM integrate control of the facility and the IT infrastructure, correctly managing in real time the operating variations of the servers due to the use of cloud tools as well as those due to changes and hardware and software physical integrations. The DCIM system has been equipped with specific modules dedicated to the management of colocation facilities with various users and companies present in the same room.

FIRE PREVENTION

Fire prevention in the facility is particularly advanced. The entire system combines a traditional smoke detection system, combined with a high-sensitivity smoke detection system (HSSD) for early detection. An inert gas IG01 (argon) system can be deployed for active fire protection. The system is centralized with a double set of cylinders (main and reserve), to protect the data room, the tape library, UPS rooms, batteries, electric transformers, and the engine generators.

FINAL RESULT

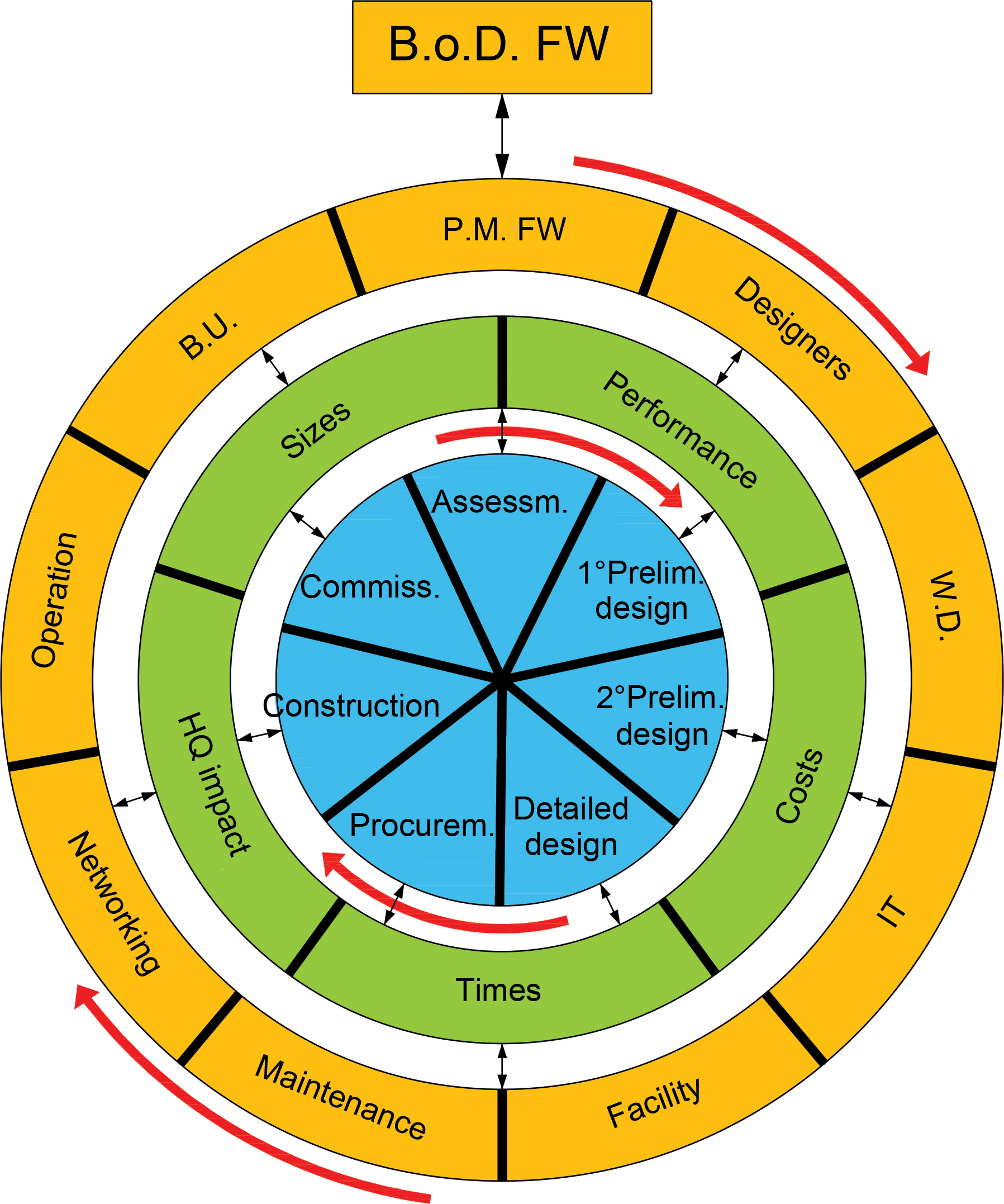

Design work proceeded through the following phases:

• Site assessment

• First preliminary stage

• Second preliminary stage in which FastWeb’s needs were matched to the features of the site

• Detailed design, with a high level of definition (see Figure 7)

All the phases included cost estimates, timing, and impact on the existing facilities.

The whole design and construction process (from kick off to commissioning) required a high level of communication between all the people involved in project implementation, with continuous sharing of choices, decisions, and changes in order to achieve the owner’s desired goals. In addition, each phase of design was developed to the greatest degree compatible with that given phase. Creating detailed drawings and documents reduced miscommunication and confusion on the project.

A team comprising FastWeb stakeholders, including the project leader, IT, networking, business unit, operation, facility, maintenance, purchasing managers, as well as design engineers and the director of works managed the design, procurement, and construction processes. The team, through meetings held at least weekly, constantly checked and managed the whole construction process, sharing and reporting results and decisions at

the end of each phase to the board of directors. Additional communication took place at all critical moments of the process. This team approach ensured that the construction would meet budgets (with a 5% tolerance) and deadlines, while providing a facility that meets FastWeb’s needs.

Paolo Barberis is the manager of the Department of Technology at FastWeb S.p.a., a telecommunications company operating landline and mobile networks in Italy. He graduated in electronic engineering in 1989 at the Politecnico of Milano and is a member of the Charter of Engineers of the Province of Sondrio. Mr. Barberis has over 25 years of experience in designing and managing Telecommunication and Information Technology services with landline and mobile operators having mission critical services. He has been entrusted with the design, construction, and management of six data centers.

Leonardo Sergardi is a partner and the cofounder of the engineering and design company AS ingg. He has a degree in Electrotechnical Engineering from the Politecnico di Milano in 1978. Mr. Sergardi is an Accredited Tier Designer, Certified Data Centre Professional, Certified Data Centre Energy Professional, and a member of the Charter of Engineers of the Province of Milano. He has completed more than 100 projects and 20 data centers, with more than 35 years experience designing systems and project management for advanced tertiary buildings, data centers, and mission critical facilities.

Ferdinando Ciardullo works at the engineering and design company AS ingg. He has a degree in Mechnical Engineering at the Politecnico di Milano. Mr. Ciardullo is a CDCDP and a member of the Charter of Engineers of the Province of Milano. He has completed more than 100 projects and 15 data centers, with more than 35 years experience designing systems and project management for advanced tertiary buildings, data centers, and mission critical facilities.

Getty Images

Getty Images