Accredited Tier Designer Profiles: Adel Rizk, Gerard Thibault and Michael Kalny

Three data center design professionals talk about their work and Uptime Institute’s ATD program.

By Kevin Heslin, Uptime Institute

Uptime Institute’s Accredited Tier Designer (ATD) program and its Tier Certification program have affected data center design around the world, raised standards for construction, and brought a new level of sophistication to facility owners, operators, and designers everywhere, according to three far-flung professionals who have completed the ATD program. Adel Rizk of Saudia Arabia’s Edarat, Gerard Thibault, senior technical director, Design and Construction division of Digital Realty (DLR) in the U.K., and Michael Kalny, head of Metronode Engineering, Leighton Telecommunications Group, in Australia, have applied the concepts they learned in the ATD program to develop new facilities and improve the operation of legacy facilities while also aggressively implementing energy-efficiency programs. Together, they prove that high reliability and energy efficiency are not mutually exclusive goals. Of course, they each work in different business environments in different countries, and the story of how they achieve their goals under such different circumstances makes interesting reading.

In addition to achieving professional success, the ATDs each noted that Tier certification and ATD programs had helped them innovate and develop new approaches to data center design and operations while helping market facilities and raise the standards of construction in their countries.

More than that, Rizk, Thibault, and Kalny have followed career arcs with some similarities. Each developed data center expertise after entering the field from a different discipline, Rizk from telephony and manufacturing, Thibault from real estate, and Kalny from building fiber transmission networks. They each acknowledge the ATD program as having deepened their understanding of data center design and construction and having increased their ability to contribute to major company initiatives. This similarity has particular significance in the cases of Rizk and Kalny, who have become data center experts in regions that often depend on consultants and operators from around the globe to ensure reliability and energy efficiency. It is in these areas, perhaps, that the ATD credential and Tier certification have their greatest impact.

On the other hand, the U.K, especially London, has been the home of many sophisticated data center operators and customers for years, making Thibault’s task of modifying Digital Realty’s U.S. specification to meet European market demands a critical one.

On the technology front, all three see continued advances in energy efficiency, and they all see market demand for greater sustainability and energy efficiency. Kalny and Thibault both noted increased adoption of higher server air supply temperatures in data centers and the use of outside air. Kalny, located in Australia, noted extreme interest in a number of water-saving technologies.

Hear from these three ATDs below:

ADEL RIZK

Just tell me a little about yourself.

Just tell me a little about yourself.

I’m a consulting engineer. After graduating from a civil engineering program in 1998 and working for a few years on public projects for the Public Switched Telephone Network (PSTN) Outside Plant (OSP), I decided in 2000 to change my career and joined a manufacturer of fast-moving consumer goods. During this period, I also pursued my MBA.

After gaining knowledge and experience in IT by enhancing and automating the manufacturer’s operations and business processes, I found an opportunity to start my own business in IT consulting with two friends and colleagues of mine and co-founded Edarat Group in 2005.

As a consultant working in Edarat Group, I also pursued professional certifications in project management (PMP) and business continuity (MBCI) and was in charge of implementing the Business Continuity Management Program for telecom and financial institutions in Saudi Arabia.

How did you transition from this IT environment to data centers?

One day, a customer who was operating a strategic and mission-critical data center facility asked me to help him improve the reliability of his MEP infrastructure. I turned his problem into an opportunity and ventured into the data center facility infrastructure business in 2008.

In 2009-2010, Edarat Group, in partnership with IDGroup, a leading data center design company based out of Boston, developed the design for two Tier IV and two Tier III data centers for a telecom operator and the smart cities being built in Riyadh by the Public Pension Agency. In 2010, I got accredited as a Tier Designer (ATD) by the Uptime Institute, and all four facilities achieved Tier Certification of Design Documents (TCDD).

What was the impact of the Tier certification?

Once we succeeded in achieving the Tier Certification, it was like a tipping point.

We became the leading company in the region in data center design. Saudi Arabia values certifications very highly. Any certification is considered valuable and even considered a requirement for businesses, as well as for professionals. By the same token, the ATD certificate positioned me as the lead consultant at that time.

Since that time, Edarat has grown very rapidly, working on the design and construction supervision of Tier III, Tier IV, and even Tier II facilities. Today, we have at least 10 facilities that received design Tier-certifications and one facility that is Tier III Certified as a Constructed Facility (TCCF).

What has been your personal involvement in projects at Edarat?

I am involved in every detail in the design and construction process. I have full confidence in these facilities being built, and Uptime Institute Certifications are mere evidence of these significant successful achievements.

What is Edarat doing today?

Currently, we are involved in design and construction. In construction, we review material submittals and shop drawings and apply value engineering to make sure that the changes during construction don’t affect reliability or Tier certification of the constructed facility. Finally, we manage the integrated testing and final stages of commissioning and ensure smooth handover to the operations team.

Are all your projects in Saudi Arabia?

No. We also obtained Tier III certification for a renowned bank in Lebanon. We also have done consultancy work for data centers in Abu Dhabi and Muscat.

What stimulates demand for Tier certification in Saudi Arabia?

Well, there are two factors: the guarantee of quality and the show-off factor due to competition. Some customers have asked us to design and build a Tier IV facility for them, though they can tolerate a long period of downtime and would not suffer great losses from a business outage.

Edarat Group is vendor-neutral, and as consultants, it is our job to educate the customer and raise his awareness because investing in a Tier IV facility should be justifiable when compared to the cost of disruption.

My experience in business continuity enables me to help customers meet their business requirements. A data center facility should be fit-for-purpose, and every customer is unique, each having different business, regulatory, and

operational requirements. You can’t just copy and paste. Modeling is most important at the beginning of every data center design project.

Though it may seem like hype, I strongly believe that Uptime Institute certification is a guarantee of reliability and high availability.

What has the effect of the ATD been on the data center industry in Saudi Arabia?

Now you can see other players in the market, including systems integrators, getting their engineers ATD certified. Being ATD certified really helps. I personally always refer to the training booklet; you can’t capture and remember everything about Tiers after just three days of training.

What’s unique about data centers in Saudi Arabia?

Energy is cheap; telecom is also cheap. In addition, Saudi Arabia is a gateway from Europe to Asia. The SAS1 cable connects Europe to India through Saudi Arabia. Energy-efficient solutions are difficult to achieve. Free cooling is not available in the major cities, and connectivity is not yet available in remote areas where free cooling is available for longer periods during the year. In addition, the climate conditions are not very favorable to energy-efficient solutions; for example, dust and sand make it difficult to rely on solar power. In Riyadh, the cost of water is so high that it makes the cost of investing in cooling towers unjustifiable compared to air-cooled chillers. It could take 10 years to get payback on such a system.

Budget can sometimes be a constraint on energy efficiency because, as you know, green solutions have high capex, which is unattractive because energy is cheap in Saudi Arabia. If you use free cooling, there are limited hours, plus the climate is sandy, which renders maintenance costs high. So the total cost of ownership for a green solution is not really justifiable from an economic perspective, and the government so far does not have any regulations on carbon emissions and so forth.

Therefore, in the big cities, Riyadh, Dammam, and Jeddah, we focus primarily on reliability. Nevertheless, some customers still want to achieve LEED Gold.

What’s the future for Edarat?

We are expanding geographically and expanding our services portfolio. After design and building, the challenge is now in the operation. As you already know, human errors represent 70 percent of the causes for downtime. Customers are now seeking our help to provide consultancy in facility management, such as training, drafting SOPs, EOPs, capacity management, and change management procedures.

MICHAEL KALNY

Tell me a little about yourself.

Tell me a little about yourself.

I received an honors degree in electrical engineering in the 1980s and completed a postgraduate diploma in communications systems a couple of years later. In conjunction with practical experience working as a technical officer and engineer in the telecommunications field, I gained a very sound foundation on which to progress my career in the ICT space.

My career started with a technical officer position in a company called Telecom, the monopoly carrier operating in Australia. I was there about 14 years and worked my way through many departments within the company including research, design, construction and business planning. It was a time spent learning much about the ICT business and applying engineering skills and experience to modernize and progress the Telecom business. Around 1990 the Australian government decided to end the carrier monopoly, and a company by the name of Optus emerged to compete directly with Telecom. Optus was backed by overseas carriers Bellsouth (USA) and Cable and Wireless (UK). There were many new exciting opportunities in the ICT carrier space when Optus began operations. At this point I left Telecom and started with Optus as project manager to design and construct Optus’ national fiber and data center network around Australia.

Telecom was viewed by many as slow to introduce new technologies and services and not competitive compared to many overseas carriers. Optus changed all that. They introduced heavily discounted local and overseas calls, mobile cellular systems, pay TV, point-to-point high-capacity business network services and a host of other value-added services for business and residential customers. At the time, Telecom struggled to develop and launch service offerings that could compete with Optus, and a large portion of the Australian population embraced the growth and service offerings available from Optus.

I spent 10 years at Optus, where I managed the 8500-kilometer rollout of fiber that extended from Perth to Adelaide, Melbourne, Canberra, Sydney and Brisbane. I must have done a good job on the build, as I was promoted to the role of Field/National Operations manager to manage all the infrastructure that was built in the first four years. Maybe that was the punishment in some way? I had a workgroup of some 300-400 staff during this period and gained a great deal of operational experience.

The breadth of knowledge, experience and networks established during my time at Optus was invaluable and led me to my next exciting role in the telecommunications industry during 2001. Nextgen Networks was basically formed to fill a void in the Australian long-haul, high-capacity digital transmission carrier market, spanning all mainland capital cities with high-speed fiber networks. Leighton Contractors was engaged to build the network and maintain it. In conjunction with transmission carriage services, Nextgen also pre-empted the introduction and development of transmission nodes and data center services. Major rollouts of fiber networks, associated transmission hubs and data centers were a major undertaking, providing exciting opportunities to employ innovation and new technologies.

My new role within Leighton Telecommunications Group to support and build the Nextgen network had several similarities with Optus. Included were design activities and technical acceptance from the builder of all built infrastructure, including transmission nodes and data centers. During 2003 Nextgen Networks went into administration as the forecast demand for large amounts of transmission capacity did not eventuate. Leighton Telecommunications realized the future opportunities and potential of the Nextgen assets and purchased the company. Through good strategic planning and business management, the Nextgen business has continued to successfully expand and grow. It is now Australia’s third largest carrier. This was indeed a success story for the Leighton Telecommunications Group.

Metronode was established as a separate business entity to support the Nextgen network rollout by way of providing capital city transmission nodes for the longhaul fiber network and data center colo space. Metronode is now one of the biggest data center owner/operators in Australia, with the largest coverage nationally.

I’ve now been with the Leighton Telco Group for 12 years and have worked in the areas of design, development, project management and operations. Much of the time was actually spent in the data center area of the business.

For the last three years I have headed Metronode’s Engineering Group and have been involved in many exciting activities including new technology assessment, selection and data center design. All Metronode capital city data centers were approaching full design capacity a couple of years ago. In order to continue on a successful growth path for data center space, services and much improved energy efficiency, Metronode could no longer rely on traditional data center topologies and builds to meet current and future demands in the marketplace. After very careful consideration and planning, it was obvious that any new data center we would build in Australia would have to meet several important design criteria. These included good energy efficiency (sub 1.2 PUE), modular construction, quick to build, high availability and environmental sustainability. Formal certification of the site to an Uptime Institute Tier III standard was also an important requirement.

During 2011 I embarked on a mission to assess a range of data center offerings and technologies that would meet all of Metronode’s objectives.

So your telecom and fiber work set you up to be the manager of operations at Metronode, but where did you learn the other data center disciplines?

During the rollout of fiber for the Optus network, capital city “nodes” or data centers were built to support the transmission network. I was involved with the acceptance of all the transmission nodes and data centers, and I guess that’s where I got my first exposure to data centers. Also, when Nextgen rolled out its fiber network, it was also supported by nodes and data centers in all the capital cities.

I was primarily responsible for acceptance of the fiber network, all regenerator sites (basically mini data centers) and the capital city data centers that the fiber passed through. I wasn’t directly involved with design, but I was involved with commissioning and acceptance, which is where I got my experience.

Do you consider yourself to be more of a network professional or facilities professional?

A portion of both, however, stronger affinity with the data center side of things. I’m an electrical engineer and relate more closely to the infrastructure side of data centers and transmission regeneration sites–they all depend on cooling, UPS, batteries, controlled environments, high levels of redundancy and all that sort of thing.

Prior to completion of any data center build (includes fiber transmission nodes), a rigorous commissioning and integrated services testing (IST) regime is extremely important. A high level of confidence in the design, construction and operation is gained after the data center is subjected to a large range of different fault types and scenarios to successfully prove resilience. My team and I always work hard to cover all permutations and combinations of fault/operational scenarios during IST to demonstrate resilience of the site before handing it over to our operations colleagues.

What does the Australian data center business look like? Is it international or dependent on local customers?

Definitely dependent on both local and international customers. Metronode is a bit different from most of the competition, in that we design, build, own and operate each of our sites. We specialize in providing wholesale colo services to the large corporations, state and federal government departments and carriers. Many government departments in Australia have embarked on plans to consolidate and migrate their existing owned, leased and “back of office” sites into two or more modern data centers managed and operated by experienced operators with a solid track record. Metronode recently secured the contract to build two new major data centers in NSW to consolidate and migrate all of government’s requirements.

Can you describe Metronode’s data centers?

We have five legacy sites that are designed on traditional technologies and builds comprising raised floor, chilled-water recirculation, CRACs on floor, under-floor power cabling from PDUs to racks and with relatively low-power density format. About three years ago, capacity was reaching design limits and the requirement to expand was paramount. I was given the task of reviewing new and emerging data center technologies that would best fit Metronode’s business plans and requirements.

It was clear that a modular data center configuration would provide significant capital savings upfront by way of only expanding when customer demand dictated. The data center had to be pre-built in the factory, tested and commissioned and proven to be highly resilient under all operating conditions. It also had to be able to grow in increments of around 800kW of IT load up to a total 15 MW if need be. Energy efficiency was another requirement, with a PUE of less than 1.2 set as a non-negotiable target.

BladeRoom technology from the UK was the technology chosen. Metronode purchased BladeRoom modules from the U.K. They were shipped to Australia and assembled on-site. We also coordinated the design of plant rooms to accommodate site HV intake, switchgear, UPS and switchboards to support the BladeRoom modules. The first BladeRoom deployment of 1.5 MW in Melbourne took around nine months to complete.

BladeRoom uses direct free air and evaporative cooling as the primary cooling system. It uses an N+1 DX cooling system as a backup. The evaporative cooling system was looked at very favorably in Australia mainly because of the relatively high temperatures and low humidity levels throughout the year in most capital cities.

To date, we have confirmed that free air/evaporative cooling is used for 95-98% of the year, with DX cooling systems used for the balance. Our overall energy usage is very low compared to any traditional type sites.

We were the first data center owner/operator to have a fully Uptime Institute-certified Tier III site in Australia. This was another point of differentiation we used to present a unique offering in the Australian marketplace.

In Australia and New Zealand, we have experienced many other data center operators claiming all sorts of Tier ratings for their sites, such as Tier IV, Tier IV+, etc. Our aim was to formalize our tier rating by gaining a formal accreditation that would stand up to any scrutiny.

The Uptime Institute Tier ratings appealed to us for many reasons, so we embarked on a Tier III rating for all of our new BladeRoom sites. From a marketing perspective, it’s been very successful. Most customers have stopped asking many questions relating to concurrent maintainability, now that we have been formally certified. In finalizing the design for our new generation data centers, we also decided on engineering out all single points of failure. This is over and above the requirement for a Tier III site, which has been very well received in the marketplace.

What is the one-line of the BladeRoom electrical system?

The BladeRoom data hall comprises a self-contained cooling unit, UPS distribution point and accommodation space for all the IT equipment.

Support of the BladeRoom data hall requires utility power, a generator, UPS and associated switchgear to power the BladeRoom data hall. These components are built into what we call a “duty plant room.” The design is based on a block-redundant architecture and does not involve the paralleling of large strings of generators to support the load under utility power failure conditions.

A separate duty plant room is dedicated or assigned to each BladeRoom. A separate “redundant plant room” is part of the block-redundant design. In the case of any duty plant room failure, critical data hall load will be transferred to the redundant plant room via a pair of STS switches. Over here we refer to that as a block-redundant architecture. We calculate that we will achieve better than 5 nines availability.

Our objective was to ensure a simple design in order to reduce any operational complexity.

What’s the current footprint and power draw?

The data center built in Melbourne is based on a BladeRoom data hall with a cooling module on either end, allowing support 760 kW of IT load.

To minimize footprint, we build data halls into a block, comprising four by 760 kW and double-stack them. This provides us with two ground floor data halls and two first floor data halls, with a total IT capacity of 3 MW. Currently, the Melbourne site comprises a half block, which is 1.5 MW; we’re planning to build the next half block by the end of this year. Due to the modularity aspect and the similar design, we simply replicate the block structure across the site, based on client-driven demand. Our Melbourne site has capacity to accommodate five blocks or 15 MW of IT load.

In terms of the half block in operation in Melbourne, we have provisioned about 1 MW of IT load to our clients; however, utilization is still low at around 100 kW. In general, we have found that many clients do not reach their full allocated capacity for some time, possibly due to being conservative about demand forecast and the time it takes for complex migrations and new installations to be completed.

What about power density?

We can accommodate 30 kW per rack. A supercomputer was recently installed that took up six or seven rack spaces. With a future power demand in excess of 200kW, we are getting close to the 30 kW per rack mark.

And PUE?

At our new Melbourne site, we currently support an average IT load of 100 kW across 1.5 MW of IT load capacity,

which approximates to 7% IT load, a very light load which would reflect an unmentionable PUE in a traditionally

designed site! Our monthly PUE is now running at about 1.5. Based on trending over the last three months (which have been summer months in Australia) we are well on target to achieving our design PUE profile. We’re very confident we’ll have a 1.2 annual rolling PUE once we reach 30% load; we should have a sub 1.2 PUE as the load approaches 100%.

What got you interested in the ATD program and what have been its benefits?

During my assessment of new-generation data centers, there was some difficulty experienced in fully understanding the resilience that various data center configurations provided and comparing them against one another. At this point it was decided that some formal standard/certification would be the logical way to proceed, so that data center performance/characteristics could be compared in a like-for-like manner. A number of standards and best practices were reviewed, including those published by IBM, BICSI, AS/NZ Standards, TIA 942, UI, etc.; many of which quoted different rating definitions for each “tier.” The Uptime Institute tiering regime appealed to me the most, as it was not prescriptive in nature and yet provided an objective basis for comparing the functionality, maintainability, redundancy and fault tolerance levels for different site infrastructure topologies.

My view was that formal certification of a site would provide a clear differentiator between ourselves and competition in the marketplace. To further familiarize myself with the UI standards, applications and technical discussions with clients, an ATD qualification was considered a valuable asset. I undertook the ATD course nearly two years ago. Since then, the learnings have been applied to our new designs, in discussions with clients on technical performance, in design reviews with consultants, and for comparing different data center attributes.

I found the ATD qualification very useful in terms of assessing various designs. During the design of our Melbourne data center, our local design consultants didn’t have anyone who was ATD accredited locally, and I found that designs presented did not always comply with the minimum requested requirements for Tier III, and in many cases exceeded the Tier III requirement. This was another very good reason for completing the ATD course in order to keep an eye on consultant designs.

Michael, are you the only ATD at Metronode?

I’m the only Tier accredited designer in Metronode. Our consultants have since had a few people accredited because it looked a little bit odd that the client had an ATD and the consultant didn’t.

What does the future hold?

We won a major contract with the NSW government last year, which involved building two new data centers. The NSW government objective was to consolidate around 200 existing “data centers” and migrate services into the two main facilities. They’re under construction as we speak. There’s one in Sydney and one just south of Sydney near Wollongong. We recently obtained Tier III design certification from Uptime for both sites.

The Sydney data center will be ready for live operations in mid-July this year and the site near Wollongong operational a couple of months later. The facilities in Sydney and near Wollongong have been dimensioned to support an ultimate IT capacity of 9 MW.

Metronode also has a new data center under construction in Perth. It will support an ultimate IT capacity of 2.2 MW, and the first stage will support 760 kW. We hope to obtain Tier III design certification on the Perth site shortly and expect to have it completed and operational before the end of the year.

The other exciting opportunity is in Canberra, and we’re currently finalizing our design for this site. It will be a Tier III site with 6 MW of IT capacity.

With my passion for sustainability and high efficiency, we’re now looking at some major future innovations to further improve our data center performance. We are now looking at new hydrogel technologies where moisture from the data hall exhaust air can be recycled back into the evaporative cooling systems. We are also harvesting rainwater from every square meter of roof at our data centers. Rainwater is stored in tanks and used for the evaporative cooling systems.

Plant rooms containing UPS, switchboards, ATS, etc. in our legacy sites are air conditioned. If you walked into one of these plant rooms, you’d experience a very comfortable 23 or 24 degrees Centrigrade temperature all year round. Plant rooms in our new data centers run between 35-40 degrees Centrigrade, allowing them to be free air-cooled for most of the year. This provides significant energy savings and allows our PUE to be minimized.

We are now exploring the use the hot exhaust air from the data halls to heat the generators, rather than using electrical energy to heat engine water jackets and alternators. Office heating is another area where use of data

hall exhaust is being examined.

GERARD THIBAULT

How did you get started?

How did you get started?

I had quite a practical education up to the point I undertook a degree in electronics and electrical engineering and then came to the market.

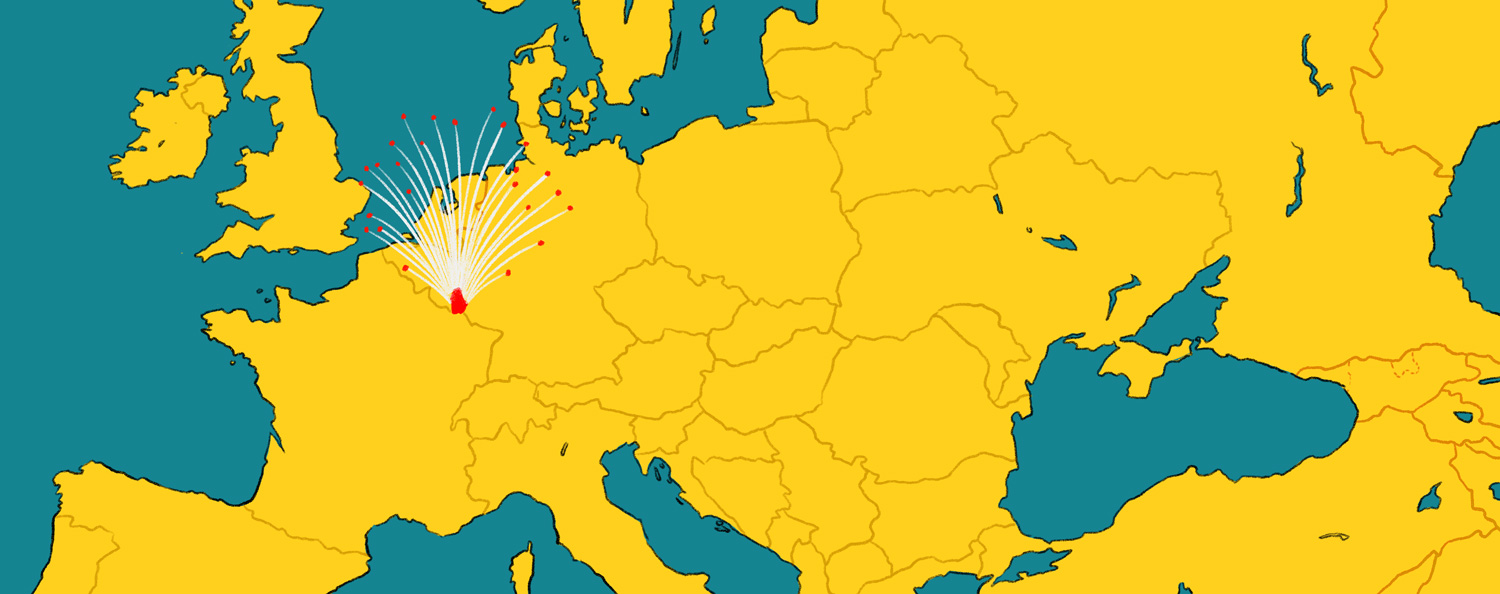

I worked on a number of schemes unconnected with data centers until the point that I joined CBRE in 1998, and it was working with them that I stumbled across the data center market in support of Global Crossing, looking for real estate across Europe trying to build its Pan European network. Within CBRE, I provided a lot of support to the project management of their data center/POP builds as well as consultancy to the CBRE customer base. When Global Crossing got their network established, I joined them to head up their building services design function within the Global Center, the web-hosting portion of Global Crossing. Together with a colleague leading construction, we ran a program of building five major data centers across the tier one cities with two more in design.

After the crash of 2001, I returned to CBRE, working within the technical real estate group. I advised clients such as HSBC, Goldman Sachs and Lehman Brothers about the design and programming and feasibility of potential data centers. So I had a lot of exposure to high capital investment programs, feasibility studies for HSBC, for instance, covering about L220 million investment in the U.K. for a pair of mirrored data centers to replace a pair of dated facilities for a global bank.

During the period, 2005-2006, I became aware of Digital Realty through a number of projects undertaken by out team. I actually left CBRE to work for Digital directly, becoming European employee #4, setting up an embryonic group as part of the REIT that is Digital Realty.

Since then, I’ve been responsible for a number of new builds in Europe, including Dublin and London, driving the design standards that Digital builds to in Europe, which included taking the U.S. guidelines requirements and adapting them to not only European units but also to European data center requirements. That, in a way, led to my current role.

Today, I practice less of the day-to-day development and am more involved with strategic design and how we design and build projects. I get involved with the sales team about how we try to invite people into our portfolio. One of those key tools was working with Uptime Institute to get ATD accreditation so that we could talk authoritatively in assessing customer needs for reliability and redundancy.

What are the European data center requirements, other than units of measure and power differences?

I think it’s more about the philosophy of redundancy. It’s my own view that transactions in the U.S. are very much more reliant on the SLA and how the developer or operator manages his own risk compared to certification within the U.K. End users of data centers, whether it is an opex (rental) or build–to-own requirement, seem to exercise more due diligence for the product they are looking to buy. Part of the development of the design requirement was that we had to modify the electrical arrangements to be more of a 2N system and provide greater resilience in the cooling system to meet the more stringent view of the infrastructure.

So you feel your U.K. customers are going to examine claims of redundancy more carefully, and they’re going to look for higher standards?

Yes. It’s matter of where they see the risks. Historically, we’ve seen that in the U.K. and across Europe too, they’ve sought to eradicate the risks, whereas I think maybe because of the approach to rental in the U.S., the risk is left with the operator. DLR has a robust architecture globally to mitigate our risk, but other operators may adopt different risk mitigation measures. It seems to me that over the years people in the U.K. market want to tick all the boxes and ensure that there is no risk before they take on the SLA, whereas in the U.S., it’s left to the operator to manage.

How has the ATD program played into your current role and helped you meet skepticism?

I think one of the only ways people can benchmark a facility is by having a certain stamp that says it meets a certain specification. In the data center market, there isn’t really anything that gives you a benchmark to judge a facility by, aside from the Uptime Institute ATD and certification programs.

It’s a shame that people in the industry will say that a data center is Tier X, when it hasn’t been assessed or certified. More discerning clients can easily see that a data center that has been certified in-line with the specs of the Uptime Institute, will gives them the assurance they need compared to a facility where they have no visibility of what a failure is going to do to the data center service. Certification, particularly to the Uptime Institute guidance is really a good way of benchmarking and reducing risk. That is certainly what customers are looking for. And perhaps it helps them sleep better at tonight; I don’t know.

Did the standards influence the base design documents of Digital Realty? Or was the U.S. version more or less complete?

I think the standards have affected the document quite a lot within Europe. We were the first to introduce the 2N supply right through the medium voltage supply to the rack supply. In the U.S., we always operated at 2N UPS, but the switchgear requires the skills of the DLR Tech Ops team to make it to concurrently maintainable. Additional features are required to meet the TUI standards that I’ve come to understand from taking the course.

I think we always had looked at achieving concurrent maintainability, but that might be by taking some

additional operational risks. When you sit back and analyze systems using the Uptime philosophy, you can see that having features such as double valving or double electrical isolation gives you the ability to maintain the facility not just by maintaining your N-capacity, or further resilience if you have a Tier IV system, but in in a safe and predictable manner.

We’ve often considered something concurrently maintainable on systems where a pipe freeze could be used to replace a critical valve. Now that might well be an industry-accepted technique, but if it goes wrong, the consequences could be very significant. What I’ve learned from the ATD process is regularizing the approach to a problem in a system and how to make sure that it is fail safe in its operation to either concurrent maintainability or fault tolerant standards if you are at the Tier III or Tier IV level, respectively.

How does Digital deploy its European Product Development strategy?

What we tried to do through product development is offer choice to people. In terms of the buildings, we are trying to build a non-specific envelope that can adapt to multiple solutions and thereby give people the choice to elect to have those data center systems deployed as the base design or upgrade them to meet Tier requirements.

Within a recent report that I completed on product development, the approach has made bringing the facility up to a Tier lever simpler or cheaper to adapt. We don’t by default build everything to a Tier III in every respect, although that’s changing now with DLR’s decision to gain a higher degree of certification. So far, we’ve pursued certification within the U.S. market as required and more frequently on the Asia-Pacific new build sites we have.

I think that difference may be due to the maturity of each market. There are a lot of people building data centers who perhaps don’t have the depth of maturity in engineering that DLR has. So people are looking for the facilities they buy to be certified so they can be sure they are reliable. Perhaps in the U.S. and Europe, they might be more familiar with data centers; they can look at the design themselves and make that assessment.

And that’s why in the European arena, we want to offer not only a choice of system but also to improve higher load efficiencies. The aim is to offer chilled water and then outside air, direct or indirect, or Tier certified designs, all within the same building and all offered as a sort of off-the-shelf product from a catalog of designs.

It would seem that providing customers with the option to certify to Tier would be a lot easier in a facility where you have just one customer.

Yes, but we are used to having buildings where we have a lot of customers and sometimes a number of customers in one data hall. Clearly, the first customer that goes into that space will often determine the infrastructure of that space. You can’t go back when you have shared systems beyond what the specification was when the initial build was completed. It is complicated sometimes, but it is something we’re used to because we do deal in multi-customer buildings.

What is the current state of Digital’s footprint in terms of size?

Within the U.K., we now have properties (ten buildings) in the London metro area, which represents over 1.2 million ft2 of space. This is now our largest metro region outside of the U.S. We have a very strong presence south of central London near Gatwick airport, but that has increased with recently acquired stock. We have two facilities there, one we built for a sole client—it was a build-to-suit option—and then a multi-tenant one. Then we have another multi-tenant building, probably in the region of 8 MW in the southwest of London. To the north in Manchester, we have another facility that is fully leased, and it’s probably in the neighborhood of 4-5 MW.

We’re actually under way with a new development for a client, a major cloud and hosting provider. We are looking to provide a 10-MW data center for them, and we’re going through the design and selection process for that project at the moment.

That’s the core of what we own within the U.K., but we also offer services for design and project management. We actually assisted HSBC with two very, very secure facilities; one to the north of London in the region of 4.5 MW

with 30,000 ft2 raised floor base and another in the north of the U.K.with an ultimate capacity of 14.5 MW and a full 2N electrical system, approaching a Tier IV design but not actually certified.

The most recent of our projects that we finished in Europe has been the first phase of a building for Telefonica in Madrid. This was a project where we we acted as consultants and design manager in a process to create a custom designed Tier IV data center with outside air, and that was done in conjunction with your team and Keith Klesner. I believe that’s one of only nine Tier IV data centers in Europe.

Walk me through the Madrid project.

It’s the first of five planned phases in which we assisted in creating a total of seven data halls, along with a support office block. The data halls are approximately 7,500 ft2 each. We actually advised on the design and fit-out of six of these data halls, and the design is based on an outside-air, direct-cooling system, which takes advantage of the very dry climate. Even though the dry-bulb temperatures are quite high, Madrid, being at high altitude, gives Telefonica the ability to get a good number of free-cooling hours within the year, driving down their PUE and running costs.

Each of the data centers has been set to run at four different power levels. The initial phase is at 1,200 kW per data hall, but the ultimate capacity is 4.8 MW per hall. All of it is supported by a full concurrently maintainable and fault-tolerant Tier IV-certified infrastructure. On the cooling side, the design was based on N+2 direct-air cooling units on the roof. Each unit is provided with a chilled water circuit for cooling in recirculation mode when outside air free-cooling is not available. There are two independent chilled-water systems in physically separate support buildings, separated from the main data center building.

The electrical system is based on a full 2N+1 UPS system with transformerless UPS to help the power efficiency and reduce the losses within the infrastructure. Those are based around the Schneider Galaxy 7000 UPS. Each of those 2N+1 UPS systems and the mechanical cooling systems were supported by a mains infrastructure at medium voltage, with a 2N on-site full continuous duty-rated backup generation system.

Is the PUE for a fully built out facility or partially loaded?

Bear with me, because people will focus on that. The approximate annualized PUEs based on recorded data at 100% were 1.25; 75%, 1.3; 50%, 1.35; and not surprisingly, as you drop down the curve, at 25% it rose to about 1.5.

What do you foresee for future development?

In the last few years, there has been quite a significant change in how people look at the data center and how people are prepared to manage the temperature parameters. Within the last 18 months I would say, the desire to adopt ASHRAE’s 2011 operating parameters for servers has been fairly uniform. Across the business, there has been quite a significant movement, which has been brought to a head by a combination of a lot of new cooling technologies going forward. So now you have ability to use outside air direct with full mechanical backup or outside air indirect where there is a use of evaporative cooling, but in the right climates, of course.

I think there is also an extreme amount of effort looking at various liquid-cooled server technology. From my standpoint, we still see an awful lot of equipment that wants to be cooled by air because that is the easiest presentation of the equipment, so it may be a few years before liquid rules the day.

There’s been a lot of development mechanically and I think we’re sort of pushing the limits of what we can

achieve with our toolkit.

In the next phase of development, there’s got to be ways to improve the electrical systems’ efficiencies, so I think there is going to be a huge pressure on UPS technology to reduce the losses and different types of voltage distribution, whether that be direct current or elevated ac voltages. All these ideas have been around before but may not have been fully exploited. The key thing is the potential, provided you are not operating on recirc for lot of the time, with outside air direct you’ve got PUEs approaching 1.2 and closing in on 1.15 in the right climate. At that level of PUE, the UPS and the electrical infrastructure can score a significant part of the remaining PUE uplift, with the amount of waste that currently exists. So I think one of the issues going forward, will be addressing the efficiency issues on the electrical side of the equation.

Kevin Heslin is senior editor at the Uptime Institute. He served as an editor at New York Construction News, Sutton Publishing, the IESNA, and BNP Media, where he founded Mission Critical, the leading publication dedicated to data center and backup power professionals. In addition, Heslin served as communications manager at the Lighting Research Center of Rensselaer Polytechnic Institute. He earned the B.A. in Journalism from Fordham University in 1981 and a B.S. in Technical Communications from Rensselaer Polytechnic Institute in 2000.

Kevin Heslin is senior editor at the Uptime Institute. He served as an editor at New York Construction News, Sutton Publishing, the IESNA, and BNP Media, where he founded Mission Critical, the leading publication dedicated to data center and backup power professionals. In addition, Heslin served as communications manager at the Lighting Research Center of Rensselaer Polytechnic Institute. He earned the B.A. in Journalism from Fordham University in 1981 and a B.S. in Technical Communications from Rensselaer Polytechnic Institute in 2000.