A Surge of Innovation

Data center operators (and enterprise IT) are generally cautious adopters of new technologies. Only a few (beyond hyperscale operators) try to gain a competitive advantage through their early use of technology. Rather, they have a strong preference toward technologies that are proven, reliable and well-supported. This reduces risks and costs, even if it means opportunities to jump ahead in efficiency, agility or functionality are missed.

But innovation does occur, and sometimes it comes in waves, perhaps triggered by the opportunity for a significant leap forward in efficiency, the sudden maturing of a technology, or some external catalyst. The threat of having to close critical data centers to move workloads to the public cloud may be one such driver; the need to operate a facility without staff during a weather event, or a pandemic crisis, may be another; the need to operate with far fewer carbon emissions may be yet another. Sometimes one new technology needs another to make it more economic.

The year 2021 may be one of those standouts in which a number of emerging technologies begin to gain traction. Among the technologies on the edge of wider adoption are:

- Storage-class memory – A long-awaited class of semiconductors with ramifications for server performance, storage strategies and power management.

- Silicon photonics – A way of connecting microchips that may revolutionize server and data center design.

- ARM servers – Low-powered compute engines that, after a decade of stuttering adoption, are now attracting attention.

- Software-defined power – A way to unleash and virtualize power assets in the data center.

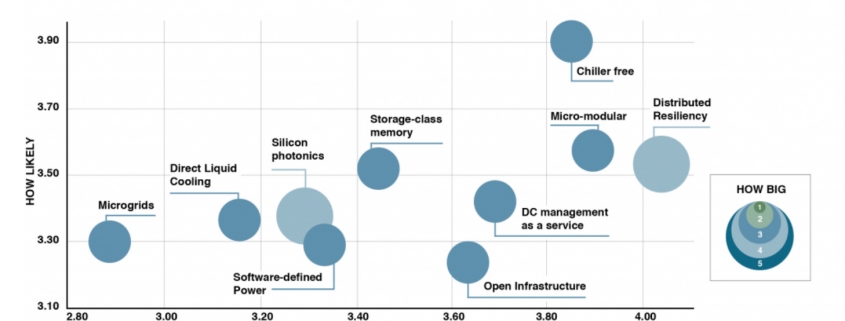

All of these technologies are complementary; all have been much discussed, sampled and tested for several years, but so far with limited adoption. Three of these four were identified as highly promising technologies in the Uptime Institute/451 Research Disrupted Data Center research project summarized in the report Disruptive Technologies in the Datacenter: 10 Technologies Driving a Wave of Change, published in 2017. As the disruption profile below shows, these technologies were clustered to the left of the timeline, meaning they were, at that time, not yet ready for widespread adoption.

Now the time may be coming, with hyperscale operators particularly interested in storage-class memory and silicon photonics. But small operators, too, are trying to solve new problems — to match the efficiency of their larger counterparts, and, in some cases, to deploy highly efficient, reliable, and powerful edge data centers.

Storage-class memory

Storage-class memory (SCM) is a generic label for emerging types of solid-state media that offer the same or similar performance as dynamic random access memory or static random access memory, but at lower cost and with far greater data capacities. By allowing servers to be fitted with larger memories, SCM promises to heavily boost processing speeds. SCM is also nonvolatile or persistent — it retains data even if power to the device is lost and promises greater application availability by allowing far faster restarts of servers after reboots and crashes.

SCM can be used not just as memory, but also as an alternative to flash for high-speed data storage. For data center operators, the (widespread) use of SCM could reduce the need for redundant facility infrastructure, as well as promote higher-density server designs and more dynamic power management (software-defined power is discussed below).

However, the continuing efforts to develop commercially viable SCM have faced major technical challenges. Currently only one SCM exists with the potential to be used widely in servers. That memory was jointly developed by Intel and Micron Technology, and is now called Optane by Intel, and 3D XPoint by Micron. Since 2017, it has powered storage drives made by Intel that, although far faster than flash equivalents, have enjoyed limited sales because of their high cost. More promisingly, Intel last year launched the first memory modules powered by Optane.

Software suppliers such as Oracle and SAP are changing the architecture of their databases to maximize the benefits of the SCM devices, and major cloud providers are offering services based on Optane used as memory. Meanwhile a second generation of Optane/3D XPoint is expected to ship soon, and by reducing prices is expected to be more widely used in storage drives.

Silicon photonics

Silicon photonics enables optical switching functions to be fabricated on silicon substrates. This means electronic and optical devices can be combined into a single connectivity/processing package, reducing transceiver/switching latency, costs, size and power consumption (by up to 40%). While this innovation has uses across the electronics world, data centers are expected to be the biggest market for the next decade.

In the data center, silicon photonics allows components (such as processors, memory, input/output [I/O]) that are traditionally packaged on one motherboard or within one server to be optically interconnected, and then spread across a data hall — or even far beyond. Effectively, it has the potential to turn a data center into one big computer, or for data centers to be built out in a less structured way, using software to interconnect disaggregated parts without loss of performance. The technology will support the development of more powerful supercomputers and may be used to support the creation of new local area networks at the edge. Networking switches using the technology can also save 40% on power and cooling (this adds up in large facilities, which can have up to 50,000 switches).

Acquisitions by Intel (Barefoot Networks), Cisco (Luxtera, Acacia Communications) and Nvidia (Mellanox Networking) signal a much closer integration between network switching and processors in the future. Hyperscale data center operators are the initial target market because the technology can combine with other innovations (as well as with Open Compute Project rack and networking designs). As a result, we expect to see the construction of flexible, large-scale networks of devices in a more horizontal, disaggregated way.

ARM servers

The Intel x86 processor family is one of the building blocks of the internet age, of data centers and of cloud computing. Whether provided by Intel or a competitor such as Advanced Micro Devices, almost every server in every data center is built around this processor architecture. With its powerful (and power-hungry) cores, its use defines the motherboard and the server design and is the foundation of the software stack. Its use dictates technical standards, how workloads are processed and allocated, and how data centers are designed, powered and organized.

This hegemony may be about to break down. Servers based on the ARM processor design — the processors used in billions of mobile phones and other devices (and soon, in Apple MacBooks) — are now being used by Amazon Web Services (AWS) in its proprietary designs. Commercially available ARM systems offer dramatic price, performance and energy consumption improvements over current Intel x86 designs. When Nvidia announced its (proposed) $40 billion acquisition of ARM in early 2020, it identified the data center market as its main opportunity. The server market is currently worth $67 billion a year, according to market research company IDC (International Data Corporation).

Skeptics may point out that there have been many servers developed and offered using alternative, low-power and smaller processors, but none have been widely adopted to date. Hewlett Packard Enterprise’s Moonshot server system, initially launched using low-powered Intel Atom processors, is the best known but, due to A variety of factors, market adoption has been low.

Will that change? The commitment to use ARM chips by Apple (currently for MacBooks) and AWS (for cloud servers) will make a big difference, as will the fact that even the world’s most powerful supercomputer (as of mid-2020) uses an ARM Fujitsu microprocessor. But innovation may make the biggest difference. The UK-based company Bamboo Systems, for example, designed its system to support ARM servers from the ground up, with extra memory, connectivity and I/O processors at each core. It claims to save around 60% of the costs, 60% of the energy and 40% of the space when compared with a Dell x86 server configured for the same workload.

Software-defined power

In spite of its intuitive appeal and the apparent importance of the problems it addresses, the technology that has come to be known as “software-defined power” has to date received little uptake among operators. Software-defined power, also known as “smart energy,” is not one system or single technology but a broad umbrella term for technologies and systems that can be used to intelligently manage and allocate power and energy in the data center.

Software-defined power systems promise greater efficiency and use of capacity, more granular and dynamic control of power availability and redundancy, and greater real-time management of resource use. In some instances, it may reduce the amount of power that needs to be provisioned, and it may allow some energy storage to be sold back to the grid, safely and easily.

Software-defined power adopts some of the architectural designs and goals of software-defined networks, in that it virtualizes power switches as if they were network switches. The technology has three components: energy storage, usually lithium-ion (Li-ion) batteries; intelligently managed power switches or breakers; and, most importantly, management software that has been designed to automatically reconfigure and allocate power according to policies and conditions. (For a more detailed description, see our report Smart energy in the data center).

Software-defined power has taken a long time to break into the mainstream — and even 2021 is unlikely to be the breakthrough year. But a few factors are swinging in its favor. These include the widespread adoption of Li-ion batteries for uninterruptible power supplies, an important precondition; growing interest from the largest operators and the biggest suppliers (which have so far assessed technology, but viewed the market as unready); and, perhaps most importantly, an increasing understanding by application owners that they need to assess and categorize their workloads and services for differing resiliency levels. Once they have done that, software-defined power (and related smart energy technologies) will enable power availability to be applied more dynamically to the applications that need it, when they need it.

The full report Five data center trends for 2021 is available to members of the Uptime Institute community which can be found here.

Getty

Getty

UI @2021

UI @2021 Ui 2021

Ui 2021