Artificial Intelligence in the Data Center: Myth versus Reality

It is still very early days, but it is clear that artificial intelligence (AI) is set to transform the way data centers are designed, managed and operated — eventually. There has been a lot of misrepresentation and hype around AI, and it’s not always clear how it will be applied, and when. The Uptime Institute view is that it will be rolled out slowly, with initially conservative and limited use cases now and for the next few years. But its impact will grow.

There have been some standout applications to date — for example, predictive maintenance and peer bench-marking — and we expect there will be more as suppliers and large companies apply AI to analyze a wider range of relationships and patterns among a vast range of variables, including resource use, environmental impacts, resiliency and equipment configurations.

Today, however, AI is mostly being used in data centers to improve existing functions and processes. Use cases are focused on delivering tangible operational savings, such as cooling efficiency and alarm suppression/rationalization, as well as predicting known risks with greater accuracy than other technologies can offer.

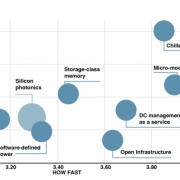

Artificial Intelligence is currently being applied to perform existing well-understood and defined functions and processes faster and more accurately. In other words, not much new, just better. The table below is taken from the new Uptime Intelligence report “Very smart data centers: How artificial intelligence will power operational decisions” (available to Uptime Institute Network Members) and shows AI functions/services that are being offered or that are in development; with a few exceptions, they are likely to be familiar to data center managers, particularly those that have already deployed data center infrastructure management (DCIM) software.

So where might AI be applied beyond these examples? We think it is likely AI will be used to anticipate failure rates, as well as to model costs, budgetary impacts, supply-chain needs and the impact of design changes and configurations. Data centers not yet built could be modeled and simulated in advance, for example, to compare the operational and/or performance profile and total cost of ownership of a Tier II design data center versus a Tier III design.

Meanwhile, we can expect more marketing hype and misinformation, fueled by a combination of AI’s dazzling complexity, which only specialists can deeply understand, and by its novelty in most data centers. For example:

Myth #1: There is a best type of AI for data centers. The best type of AI will depend on the specific task at hand. Simpler big-data approaches (i.e., not AI) can be more suitable in certain situations. For this reason, new “AI-driven” products such as data center management as a service (DMaaS) often use a mix of AI and non-AI techniques.

Myth #2: AI replaces the need for human knowledge. Domain expertise is critical to the usefulness of any big-data approach, including AI. Human data center knowledge is needed to train AI to make reasonable decisions/recommendations and, especially in the early stages of a deployment, to ensure that any AI outcome is appropriate for a particular data center.

Myth #3: Data centers need a lot of data to implement AI. While this is true for those developing AI, it is not the case for those looking to buy the technology. DMaaS and some DCIM systems use pre-built AI models that can provide limited but potentially useful insights within days.

The advent of DMaaS, which first became commercialized in 2016, is likely to drive widespread adoption of AI in data centers. With DMaaS, large sets of monitored data about equipment and operational environments from different facilities (and different customers) are encrypted, pooled in data lakes, and analyzed using AI, anomaly detection, event-stream playback and other approaches.

Several suppliers now offer DMaaS, a service that parallels the practice of large data center operators who use internal data from across their portfolios to inform decision-making and optimize operations. DCIM suppliers are also beginning to embed AI functions into their software.

Data center AI is here today and is available to almost any facility. The technology has moved beyond just hyper-scale facilities and will move beyond known processes and functions — but probably not for another two or three years.

——————————————————————————–

For more information on Artificial Intelligence and how it is already being applied in the data center, along with a wealth of other research consider becoming part of the Uptime Institute Network community. Members of this owner-operator community enjoy a continuous stream of relevant and actionable knowledge from our analysts and share a wealth of experiences with their peers from some of the largest companies in the world. Membership instills a primary consciousness about operational efficiency and best practices which can be put into action everyday. For membership information click here.

2019, Getty

2019, Getty

2020

2020

Getty

Getty Uptime Institute

Uptime Institute