Direct liquid cooling bubbles to the surface

Conditions will soon be ripe for widespread use of direct liquid cooling (DLC) — a collection of techniques that uses fluid to remove heat from IT electronics instead of air — and it may even become essential.

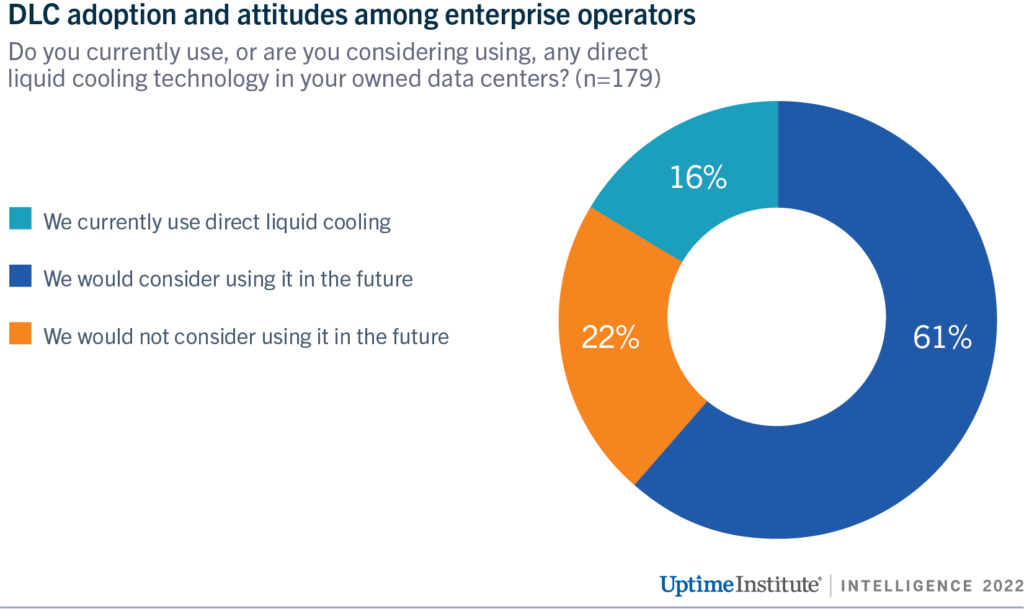

Currently, air cooling is still dominant with DLC remaining a niche option — around 85% of enterprise IT operations do not use it at all, according to Uptime Institute Intelligence’s recent Direct Liquid Cooling Survey of nearly 200 enterprises. About 7% of respondents to our survey report some application of DLC, such as in mainframes or a few racks, and this leaves only 9% that use DLC in more substantial ways.

Despite the current low uptake, Uptime Intelligence expects this view to shift markedly in favor of DLC in the coming years. In Uptime Intelligence’s survey, nearly two-thirds of enterprise IT users would consider it as a future option (see Figure 1).

Why would operators consider such a major change beyond select use cases? A likely key factor is silicon power density: the heat flux and temperature limits of some next-generation server processors and accelerators coming to market in 2022 / 2023 will push air cooling to its limits. Processors with thermal ratings of 300 watts and above — some with steeply lowered temperature limits — are on the short-term roadmap. To keep up with future advances in silicon performance, while also meeting cost and sustainability goals, air cooling alone could become inadequate surprisingly soon.

Industry body ASHRAE has already issued a warning in the form of adding a new H1 class of high-density IT equipment in its most recent update to its data center thermal guidelines. (See New ASHRAE guidelines challenge efficiency drive.) Class H1 equipment, classified as such by the server maker, require inlet temperatures of under 22°C (71.6°F), well under the recommended upper limit of 27°C (80.6°F) for general IT equipment. Many modern data center designs operate at temperatures above 22°C (71.6°F) to lower cooling energy and water consumption by minimizing the use of compressors and evaporation. Lowering temperatures (which may not be possible without a major overhaul of cooling systems) to accommodate for class H1 equipment would frustrate these objectives.

Collectively, the data center industry considers the use of DLC to be not a question of if, but when and how much. Most enterprises in our survey expect at least 10% of their cabinets to have DLC within five years. A previous Uptime Institute survey supports this data, showing that for data centers with an IT load of 1 megawatt or greater, the industry consensus for mass adoption of DLC is around seven years. Only a few respondents think that air cooling will remain dominant beyond 10 years. (See Does the spread of direct liquid cooling make PUE less relevant?)

Today, there is no single DLC approach that ticks all boxes without compromises. Fortunately for data center operators, there has been rapid development in DLC products since the second half of the 2010s, and vendors now offer a wide range of choice with very different trade-offs. Our survey confirms that cold plates using water chemistries (for example, water that is purified, deionized or a glycol mix) is the most prevalent category. However, there are still significant concerns around leakage among survey participants, which can curb an operator’s appetite for this type of DLC when it comes to large-scale installations.

Other options include:

- Cold plates that circulate a dielectric fluid (either single-phase or two-phase) to reduce the risks associated with a leak.

- Chassis-based immersion systems that are self-contained (single-phase).

- Pool immersion tanks (also either single-phase or two-phase).

Nonetheless, major hurdles remain. There are some standardization efforts in the industry, notably from the Open Compute Project Foundation, but these are yet to bear fruit in the form of applicable products. This can make it difficult and frustrating for operators to implement DLC in their data centers, let alone in a colocation facility — for example, different DLC systems use different, often incompatible, coolant distribution units. Another challenge is the traditional division between IT and facilities teams. In a diverse and large-scale environment, DLC requires close collaboration between the two teams (including IT and facility equipment vendors) to resolve mechanical and material compatibility issues, which are both still common.

Despite the challenges, Uptime Institute maintains a positive view of DLC. As the demands on cooling systems grow with every generation of silicon, operators will find the advantages of DLC harder to ignore. We will explore various DLC options and their trade-offs in a subsequent Uptime Intelligence report (due to be published later in 2022).