Server efficiency increases again — but so do the caveats

Early in 2022, Uptime Intelligence observed that the return of Moore’s law in the data center (or, more accurately, the performance and energy efficiency gains associated with it) would come with major caveats (see Moore’s law resumes — but not for all). Next-generation server technologies’ potential to improve energy efficiency, Uptime Intelligence surmised at the time, would be unlocked by more advanced enterprise IT teams and at-scale operators that can concentrate workloads for high utilization and make use of new hardware features.

Conversely, when servers built around the latest Intel and AMD chips do not have enough work, energy performance can deteriorate due to higher levels of idle server power. Following the recent launch of new server processors, performance data confirms this, which suggests that a rethink on the traditional assumptions relating to server refresh cycles is needed.

Server efficiency back on track

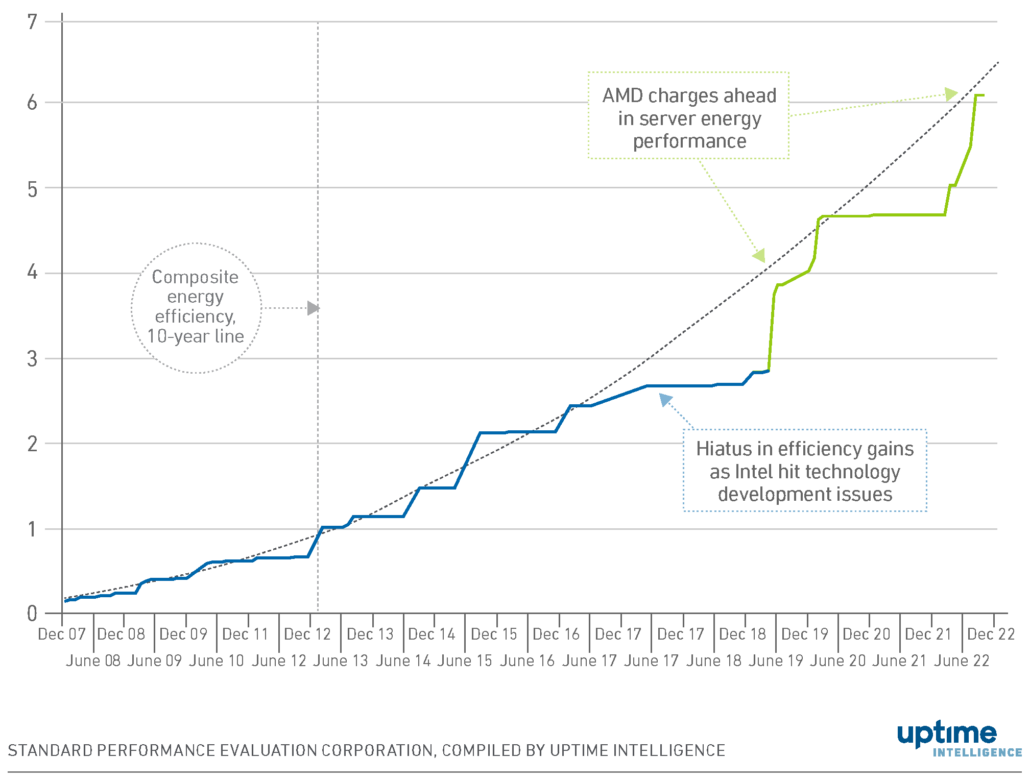

First, the good news: new processor benchmarks confirm that best-in-class server energy efficiency is back in line with historical trends, following a long hiatus in the late 2010s.

The latest server chips from AMD (codenamed Genoa) deliver a major jump in core density (they integrate up to 192 cores in a standard dual-socket system) and energy efficiency potential. This is largely due to a step change in manufacturing technology by contract chipmaker Taiwan Semiconductor Manufacturing Company (TSMC). Compared with server technology from four to five years ago, this new crop of chips offers four times the performance at more than twice the energy efficiency, as measured by the Standard Performance Evaluation Corporation (SPEC). The SPEC Power benchmark simulates a Java-based transaction processing logic to exercise processors and the memory subsystem, indicating compute performance and efficiency characteristics. Over the past 10 years, mainstream server technology has become six times more energy efficient based on this metric (Figure 1).

This brings server energy performance back onto its original track before it was derailed by Intel’s protracted struggle to develop its then next-generation manufacturing technology. Although Intel continues to dominate server processor shipments due to its high manufacturing capacity, it is still fighting to recover from the crises it created nearly a decade ago (see Data center efficiency stalls as Intel struggles to deliver).

Now, the bad news, too, is that server efficiency is once again following historical trends. Although the jump in performance with the latest generation of AMD server processors is sizeable, the long-term slowdown in performance improvements continues. The latest doubling of server performance took about five years. In the first half of the 2010s it took about two years, and far less than two years between 2000 and 2010. Advances in chip manufacturing have slowed for both technical and economic reasons: the science and engineering challenges behind semiconductor manufacturing are extremely difficult and the costs are huge.

An even bigger reason for the drop in development pace, however, is architectural: diminishing returns from design innovation. In the past, the addition of more cores, on-chip integration of memory controllers and peripheral device controllers all radically improved chip performance and overall system efficiency. Then server engineers increased their focus on energy performance, resulting in more efficient power supplies and other power electronics, as well as energy-optimized cooling via variable speed fans and better airflow. These major changes have reduced much of the server energy waste.

One big-ticket item in server efficiency remains: to tackle the memory (DRAM) performance and energy problems. Current memory chip technologies don’t score well on either metric — DRAM latency worsens with every generation, while energy efficiency (per bit) barely improves.

Server technology development will continue apace. Competition between Intel and AMD is energizing the market as they vie for the business of large IT buyers that are typically attracted to the economics of performant servers carrying ever larger software payloads. Energy performance is a definitive component of this. However, more intense competition is unlikely to overcome the technical boundaries highlighted above. While generic efficiency gains (lacking software-level optimization) from future server platforms will continue, the average pace of improvement is likely to slow further.

Based on the long-term trajectory, the next doubling in server performance will take five to six years, boosted by more processor cores per server, but partially offset by the growing consumption of memory power. Failing a technological breakthrough in semiconductor manufacturing, the past rates of server energy performance gains will not return in the foreseeable future.

Strong efficiency for heavy workloads

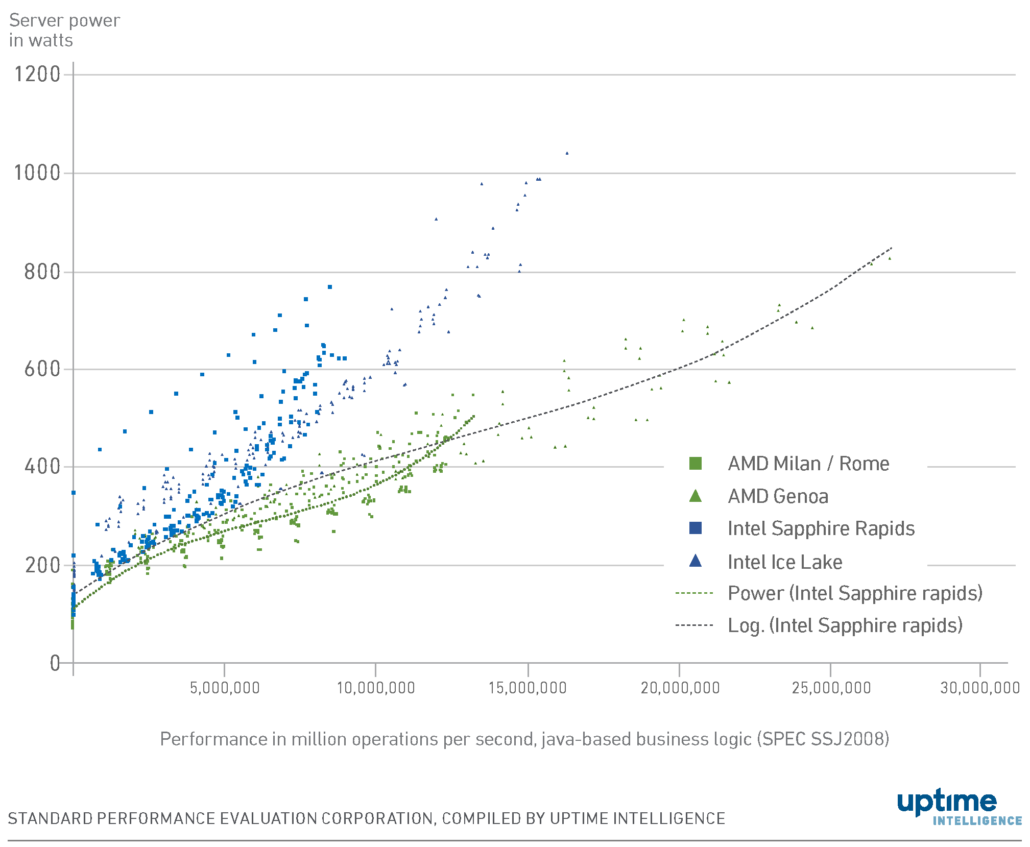

As well as the slowdown in overall efficiency gains, the profile of energy performance improvements has shifted from benefiting virtually all use cases towards favoring, sometimes exclusively, larger workloads. This trend began several years ago, but AMD’s latest generation of products offers a dramatic demonstration of its effect.

Based on the SPEC Power database, new processors from AMD offer vast processing capacity headroom (more than 65%) compared with previous generations (AMD’s 2019 and 2021 generations codenamed, respectively, Rome and Milan). However, these new processors use considerably more server power, which mutes overall energy performance benefits (Figure 2). The most significant opportunities offered by AMD’s Genoa processor are for aggressive workload consolidation (footprint compression) and running scale-out workloads such as large database engines, analytics systems and high-performance computing (HPC) applications.

If the extra performance potential is not used, however, there is little reason to upgrade to the latest technology. SPEC Power data indicates that the energy performance of previous generations of AMD-based servers is often as good — or better — when running relatively small workloads (for example, where the application’s scaling is limited, or further consolidation is not technically practical or economical).

Figure 2 also demonstrates the size of the challenge faced by Intel: at any performance level, the current (Sapphire Rapids) and previous (Ice Lake) generations of Intel-based systems use more power — much more when highly loaded. The exception to this rule, not captured by SPEC Power, is when the performance-critical code paths of specific software are heavily optimized for an Intel platform, such as select technical, supercomputing and AI (deep neural network) applications that often take advantage of hardware acceleration features. In these specific cases, Intel’s latest products can often close the gap or even exceed the energy performance of AMD’s processors.

Servers that age well

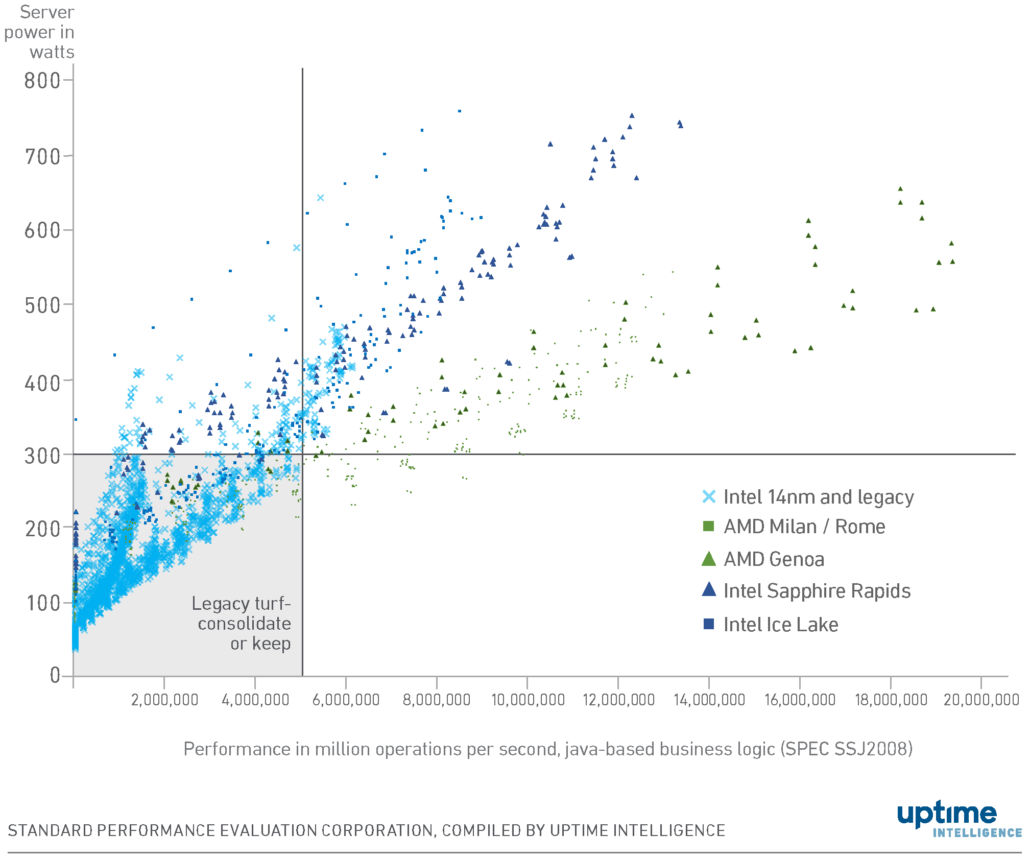

The fact that the latest generation of processors from Intel and AMD is not more efficient than the previous generation is less of a problem. The greater problem is that server technology platforms released in the past two years do not offer automatic efficiency improvements over the enormous installed base of legacy servers, which are often more than five or six years old.

Figure 3 highlights the relationship between performance and power where, without a major workload consolidation (2:1 or higher), the business case to upgrade old servers (see blue crosses) remains dubious. This area is not fixed and will vary by application, but approximately equals the real-world performance and power envelope of most Intel-based servers released before 2020. Typically, only the best performing, highly utilized legacy servers will warrant an upgrade without further consolidation.

For many organizations, the processing demand in their applications is not rising fast enough to benefit from the performance and energy efficiency improvements the newer servers offer. There are many applications that, by today’s standards, only lightly exercise server resources, and that are not fully optimized for multi-core systems. Average utilization levels are often low (between 10% and 20%), because many servers are sized for expected peak demand, but spend most of their time in idle or reduced performance states.

Counterintuitively, perhaps, servers built with Intel’s previous processor generations in the second half of the 2010s (largely since 2017, using Intel’s 14-nanometer technology) tend to show better power economy when used lightly, than their present-day counterparts. For many applications this means that, although they use more power when worked hard, they can conserve even more in periods of low demand.

Several factors may undermine the business case for the consolidation of workloads onto fewer, higher performance systems: these include the costs and risks of migration, threats to infrastructure resiliency, and incompatibility (if the software stack is not tested or certified for the new platform). The latest server technologies may offer major efficiency gains to at-scale IT services providers, AI developers and HPC shops, but for enterprises the benefits will be fewer and harder to achieve.

The regulatory drive for data center sustainability will likely direct more attention to IT’s role soon — the lead example being the proposed EU’s recast of the Energy Efficiency Directive. Regulators, consultants and IT infrastructure owners will want a number of proxy metrics for IT energy performance, and the age of a server is an intuitive choice. Data strongly indicates this form of simplistic ageism is misplaced.

2020

2020