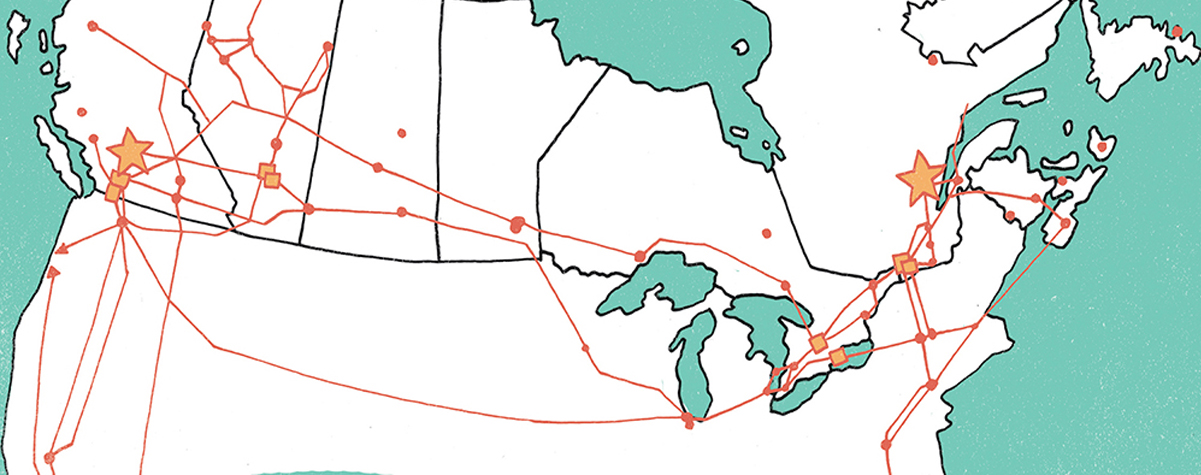

TELUS SIDCs provide support to widespread Canadian network

TELUS SIDCs provide support to widespread Canadian network. SIDCs showcase best-in-class innovation and efficiency

By Pete Hegarty

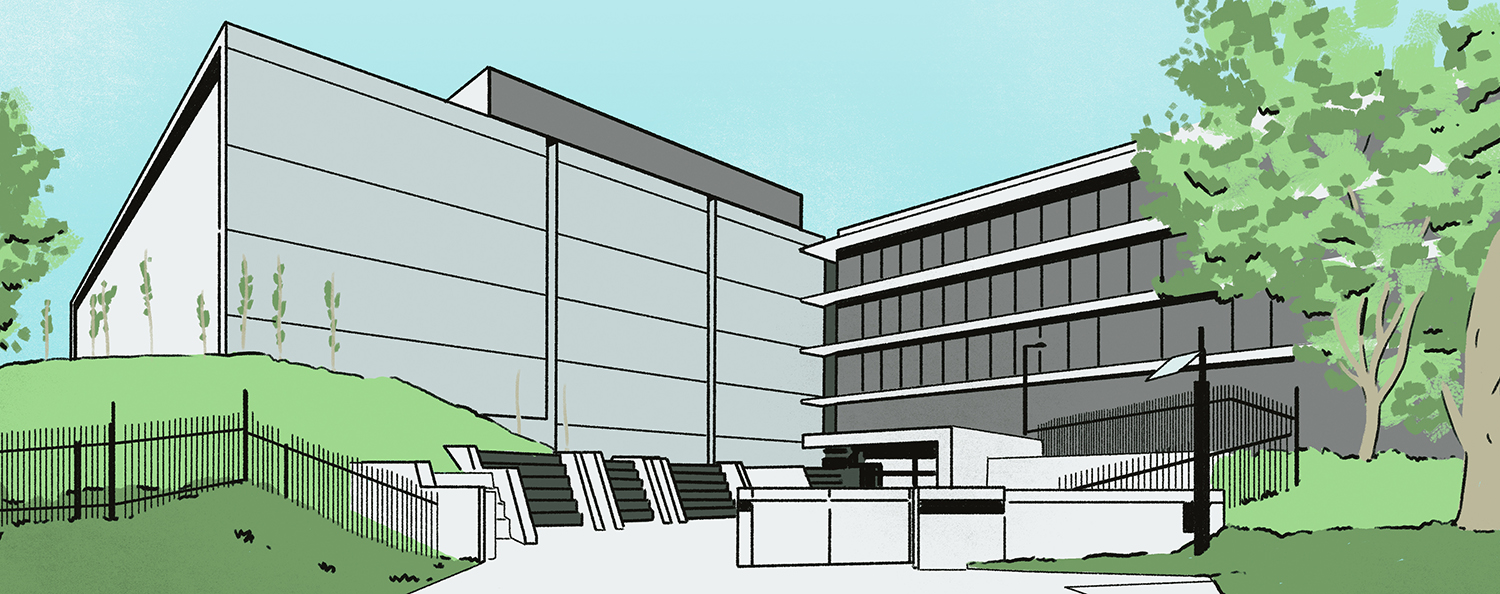

TELUS built two Super Internet Data Center (SIDC) facilities as part of an initiative to help its customers reap the benefits of flexible, reliable, and secure IT and communication solutions. These SIDCs, located in Rimouski, Quebec, and Kamloops, British Columbia, contain the hardware required to support the operations and diverse database applications of TELUS’ business customers, while simultaneously housing and supporting the company’s telecommunications network facilities and services (see Figures 1 and 2). Both facilities required sophisticated and redundant power, cooling, and security systems to meet the organization’s environmental and sustainability goals as well as effectively support and protect customers’ valuable data. A cross-functional project team, led by Lloyd Switzer, senior vice-president of Network Transformation, implemented a cutting-edge design solution that makes both SIDCs among the greenest and most energy-efficient data centers in North America.

TELUS achieved its energy-efficiency goals through a state-of-the-art mechanical design that significantly improves overall operating efficiencies. The modular design supports Concurrent Maintenance, a contractually guaranteed PUE of 1.15, and the ability to add subsequent phases with no disruption to existing operations.

Both SIDCs allow TELUS to provide its customers with next-generation cloud computing and unified communication solutions. To achieve this, teams across the company came together with the industry’s best external partners to collaborate and drive innovation into every aspect of the architecture, design, build, and operations.

Best-in-Class Facilities

The first phase of a seven-phase SIDC plan was completed and commissioned in the fall of 2012. The average cabinet density is 14 kilowatts (kW) per cabinet with the ability to support up to 20 kW per rack. The multi-phased, inherently modular approach was programmed so that subsequent phases will be constructed and added with no disruption to existing operations. Concurrent Maintainability of all major electrical and mechanical systems is achieved through a combination of adequate component redundancy and appropriate system isolation.

Both SIDCs have been constructed to LEED environmental standards and are 80% more efficient than traditional data center facilities.

Other expected results of the project include:

• Water savings: 17,000,000 gallons per year

• Energy savings: 10,643,000 kilowatt-hours (kWh) per year

• Carbon savings: 329 tons per year

The SIDCs are exceptional in many other ways. They both:

• Use technology that minimizes water consumption, resulting in a Water

Usage Effectiveness (WUE) of 0.23 liters (l)/kWh, approximately four times

less than a traditional data center.

• Provide protected power by diesel rotary UPS (DRUPS), which eliminates

the need for massive numbers of lead-acid batteries that are hazardous

elements requiring regular replacement and safe disposal. The use of DRUPS

also avoids the need for large rooms to house UPS and their batteries.

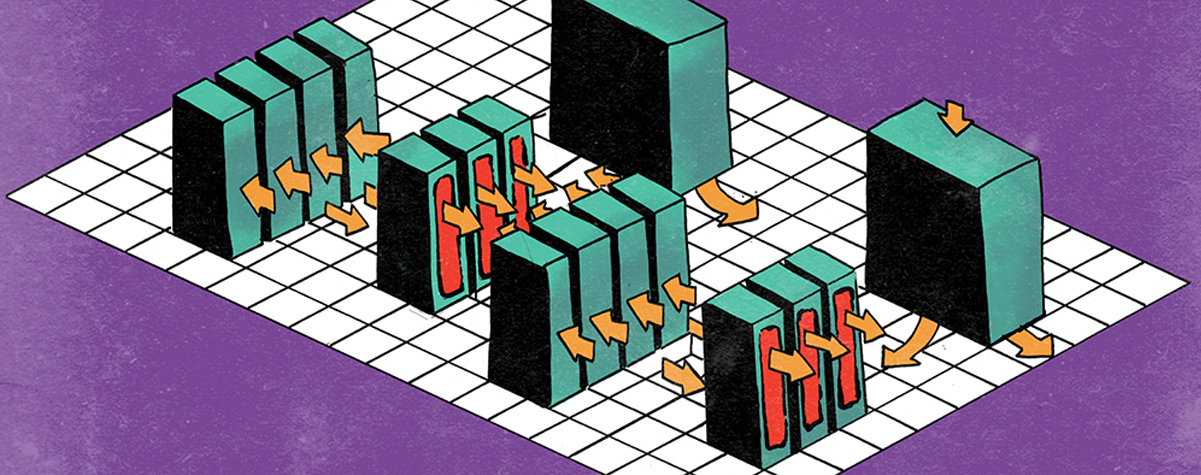

• Use a modular concept that enables rapid expansion to leverage the latest IT,

environmental, power, and cooling breakthroughs providing efficiency gains

and flexibility to meet customers’ growing needs (see Figures 3 and 4).

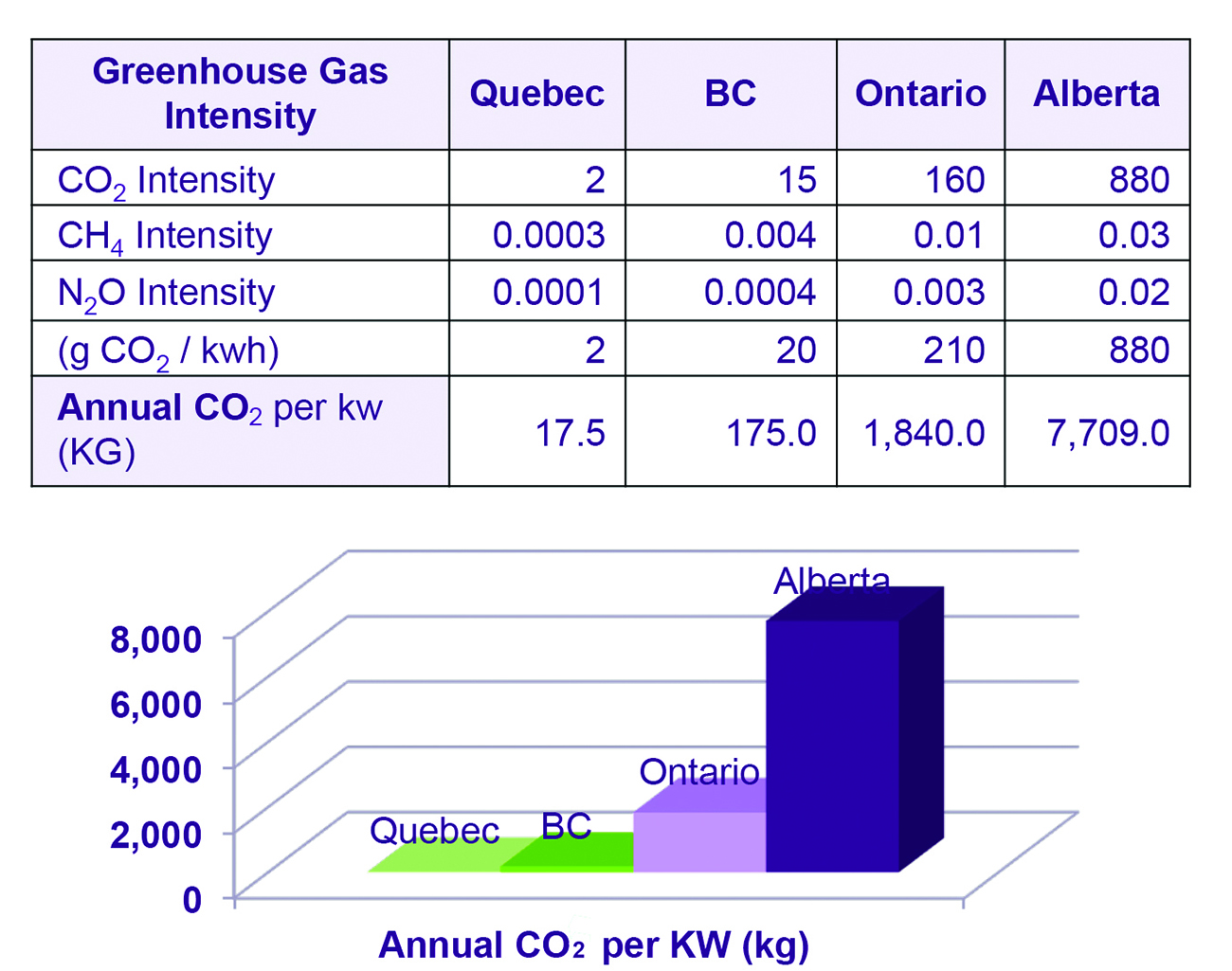

TELUS’ data center in Rimouski is strategically located in a province where more than 94% of the generation plants are hydroelectric; the facility operates in free-cooling mode year-round, with only 40 hours of mechanically assisted cooling required annually. The facility in Kamloops enjoys the same low humidity and benefits from the same efficiencies.

Both SIDCs received Tier III Certification of Design Documents and Tier III Certification of Constructed Facility by the Uptime Institute. The city of Rimouski awarded TELUS the Prix rimouskois du mérite architectural (Rimouski Architectural Award) recognizing the data center for exceptional design and construction, including its environmental integration.

Green Is Good

TELUS teams challenged themselves to develop innovative solutions and new processes that would change the game for TELUS and its customers. Designed around an advanced foundation of efficiency, scalability, reliability, and security, both SIDCs are technologically exceptional in many ways.

Natural cooling: With a maximum IT demand of 16.2 megawatts, the facilities will require168 gigawatt-hours per year. To reduce the environmental impact of such consumption, TELUS chose to place both facilities in areas well known for their green power generation and advantageous climates (see Table 1).

When mechanical systems are operating, a Turbocor compressor (0.25 kW/ton) with magnetic bearings provides 4-5ºF ∆T to supplement cooling to the Cold Aisles. Additionally, the compressor has been re-programmed to work at even higher efficiency to operate within the condensing/evaporating temperature ranges of the glycol/refrigerant of this system.

This innovative approach was achieved through collaboration between TELUS and a partner specializing in energy management systems and has been so successful that TELUS is delivering these same dramatic cooling system improvements in some of its existing data centers.

Modular design: The modular concept enables rapid expansion while leveraging the latest IT, environmental, power and cooling breakthroughs. Additional modules can be added in as little as 16-18 weeks and are non-disruptive to existing operations. The mechanical system is completely independent, and the new module “taps” into a centralized critical power spinal system. By comparison, traditional data center capacity expansions can take up to 18 months.

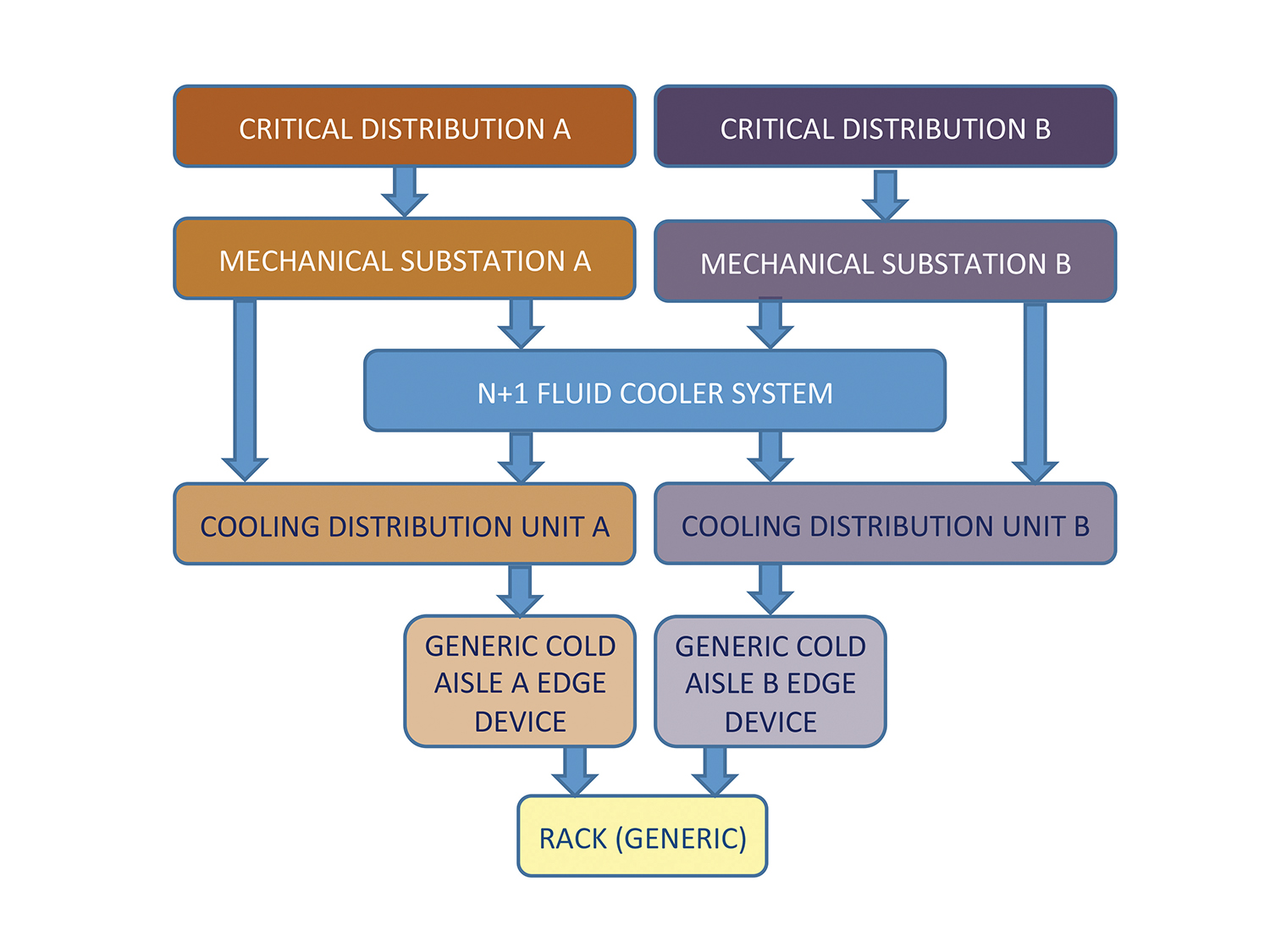

The modular mechanical system provides a dual refrigerant and water-cooled air-conditioning system to the racks. From a mechanical perspective, each pod can provide up to 2N redundancy with the use of two independent, individually pumped refrigerant cooling coil circuits. The two cooling circuits are fully independent of one another and consist of a pump, a condenser, and a coil array (see Figure 5).

The approach uses a pumped refrigerant that utilizes latent heat to remove excess server heat. The use of micro-channelized rear-door coils substantially increases overall coil capacity and decreases the pressure drop across the coil, which improves the energy efficiency.

The individual modules are cooled by closed-circuit fluid coolers that can provide heat rejection utilizing both water and non-water (air-cooled) processes. This innovative cooling solution results in industry-leading energy efficiencies. The modules are completely built off site, then transported and re-assembled on site.

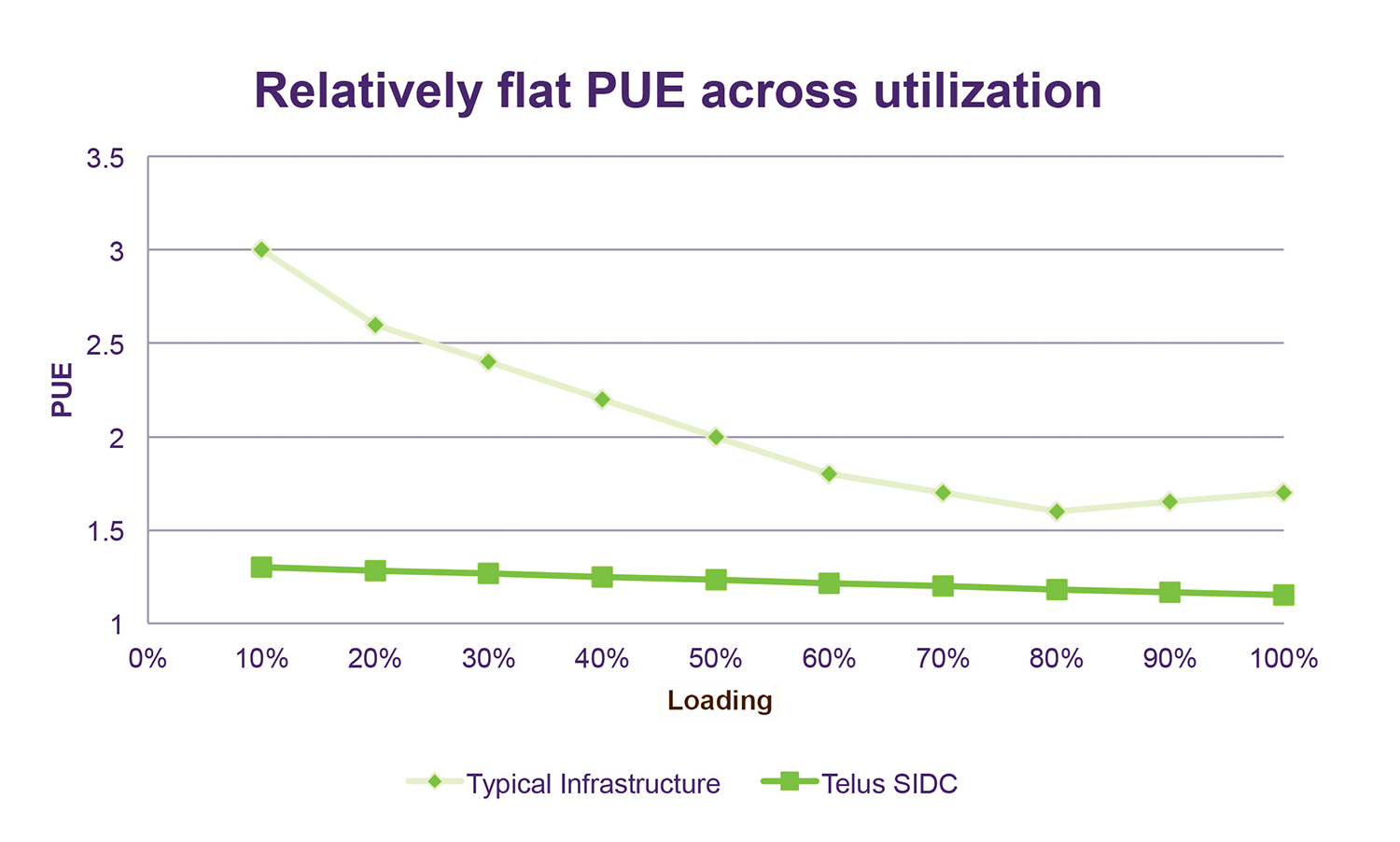

As a result, the efficiency across utilization (measured in terms of PUE) is significantly improved compared to traditional data center designs. While the PUE of a traditionally designed data center can range from as high as 3.0 to as low as 1.6 in a best-case scenario (approximately at 80% usage), both TELUS facilities have a PUE utilization curve that is almost flat, varying from 1.3 (at no load) to 1.15 (at 100% load). (See Table 2).

The Electrical System

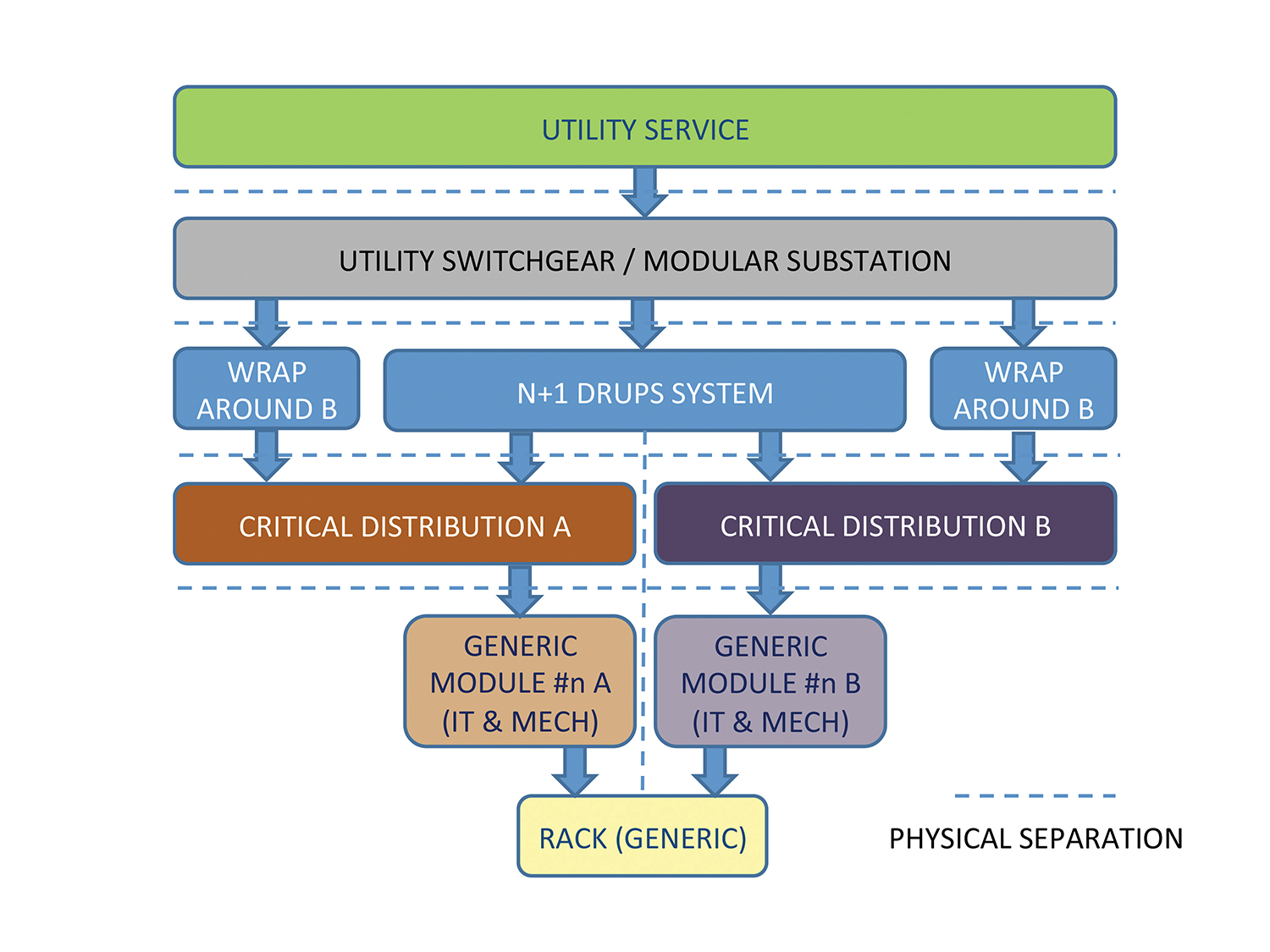

A world-class SIDC runs on solid pillars, with one of them being the electrical system (See Figure 6). This system acts as the foundation of the data center, and consistent reliability was a key component in the planning and design of both TELUS facilities.

The main electrical systems of both SIDCs operate in medium voltage, including the critical distribution systems. Incoming power is conditioned and protected right from the entrance point, so there are no unprotected loads in either facility.

Figure 6. Physical separation of A and B electrical systems ensures that the system meets Uptime Institute Tier III requirements.

Behind the fully protected electrical architecture is a simple design that eliminates multiple distribution systems. In addition, the architecture ensures that the high-density environments in the IT module do not undergo temperature fluctuations—due to loss of cooling—when switching from normal to emergency power. Other important benefits include keeping short-circuit currents and arc flashes to manageable levels.

The DRUPS are located in individual enclosures outside the building, and adding units to increase capacity is a relatively easy and non-intrusive activity. Since the system is designed to be fully N+1, any of its machines can be removed from service without causing any disruption or limitation of capacity on the building operations. Each of these enclosures sits on its own fuel belly tank that stores 72 hours of fuel at full capacity of the diesel engines.

The output of the DRUPS is split and collected into a complete 2N distribution system—A and B. The main components of this A and B system are located in different rooms, keeping fire separation between them. The entire distribution system was designed with the concept of Concurrent Maintainability. This means that the A or B side of the distribution can be safely de-energized without causing any disruption to IT or mechanical loads.

The electrical system uses the same concept as the architectural distribution. Each module connects to the main distribution, and the introduction of new modules is performed without affecting the operation of the existing ones.

In order to do this, each module includes its own set of critical and mechanical substations (A and B in both cases) that deliver low voltage to the loads (RPPs, mechanical CDUs, pod fans) in the form of bus ducts that run along both service corridors. Each rack receives two sets of electrical feeders from the A and B systems, where power is distributed to the servers through intelligent and metered PDUs.

The Mechanical System

If the electrical system is one of the pillars of the SIDCs, the mechanical system is another (see Figure 7). In today’s IT world, server density grows, and because the servers are installed in relatively narrow spaces, keeping a controlled environment where temperature does not undergo large or fast fluctuations and air flows clean and without restriction, is key to the reliability of those servers. As indicated above, the entire cooling system is protected with UPS power and provides the first line of defense against those fluctuations.

The SIDC modules are cooled with a sophisticated, yet, architecturally simple, mechanical system that not only allows for an adequate environment for the IT equipment but is also extremely efficient.

Many technologies today are achieving extremely low PUEs—especially compared to the old days of IDCs with PUEs of 2.0 or worse—but they do that at the expense of significant consumption of water. The TELUS SIDC cooling technology, the first of its kind in the world, not only runs at low PUE levels, but also is extremely frugal in the use of water. For ease of illustration, the system can be divided in its three main components:

1. The elements that capture heat immediately after it’s produced, which increases efficiency to its maximum possible as the hot air does not have to travel far. The facilities achieve this with edge devices—rear door coils or coils situated in the superior part of the Hot Aisle. Multiple fans push the cooled air onto the Cold Aisle to re-enter the IT gear through the front of the racks.

With this arrangement of fully contained Cold Aisles/Hot Aisles, air recirculates with only a minimum contribution of outside air provided by a make-up system. Among many advantages of the system, the need for small quantities of outside air makes it appropriate for installation in virtually any environment as the system is not prone to be affected by pollution, smoke, or any other agent that should not enter the IT space.

2. The CDUs are extremely sophisticated heat exchangers, which ensure the refrigerant lines are always kept within temperature limits. The heat interchange is done at maximum efficiency based on the temperature of the coolant in the return lines. If the temperature of this return is too high and doesn’t allow the refrigerant loop to remain within range, the CDU also has the intelligence and the capability to push this temperature down with the assistance of high-efficiency compressors.

3. The fluid cooler yard, which is situated outside the facilities and is responsible for the final rejection of the heat to the atmosphere. The fluid coolers run in free (and dry) cooling mode year-round except when the wet-bulb temperature exceeds a certain threshold, and then water is sprayed to assist in the rejection.

All of the above mentioned elements are redundant, and the loss of any element does not result in any disruption of the cooling they provide. The fluid coolers have been designed in an N+1 configuration where the CDUs and edge devices are fully 2N redundant, the same scenario as with both refrigerant and coolant loops.

The TELUS Team

The TELUS project team challenged traditional approaches to data center design, construction, and operations. In doing so, it created opportunities to transform its approach to future data center builds and enhance existing facilities. The current SIDCs have helped TELUS develop a data center strategy that will enable it to:

• Achieve low total cost of ownership (TCO)

• Support long-term customer growth

• Maximize the advantages of technology change:

density and efficiency

• Align with environmental sustainability and

climate change strategy

• Enable end-to-end service capabilities: cloud/

network/device

• Provide a robust and integrated physical and

logical security

More than 50 team members from across the organization participated in the SIDC project, which involved a multiple-year process of collaboration across many TELUS departments such as Network Transformation, Network Standards, IT, Operations, and Real Estate.

The completion of the SIDCs represented an ‘above and beyond’ achievement for the TELUS organization. The magnitude of the undertaking was significantly larger than any infrastructure work in the past. In addition to this, the introduction of a completely new infrastructure model and technology, in many ways revolutionary compared to the company’s legacy data centers, introduced additional pressure to ensure the team was choosing the right model and completing the necessary due diligence to ensure it was a good fit for its business.

Many of these industry-leading concepts are now being deployed across TELUS’ existing data centers, such as the use of ultra-efficient cooling systems. In addition, as a result of the knowledge and best practices created through this project, TELUS has established a center of excellence across IT and data infrastructure that empowers teams to continue working together to drive innovation across their solutions, provide stronger governance, and create shared accountability for driving its data center strategy forward.

Overcoming Challenges

A clear objective combined with the magnitude and high visibility of the undertaking, enabled the multi-disciplinary team to achieve an exceptional goal. One of the most important lessons for TELUS is the self-awareness that when teams are summoned for a project, no matter how large it is, they are capable of succeeding, if the scope and timelines are clear.

During construction of the Rimouski SIDC, the team experienced an issue with server racks, specifically with the power bars on the back. Although great in design, they ended up being too large, therefore preventing the removal of the power supplies from the backs of servers. Even though the proper due diligence had been completed, it wasn’t until the problem arose physically that the team was able to identify it. As a result, TELUS modified the design for the Kamloops SIDC and built mock-ups or prototypes to ensure this does not happen again.

Partners

TELUS worked with external partners on some of the project’s key activities, including Skanska (build partner), Inertech (module design and manufacturer), Cosentini (engineering firm), and Callison (architects). These partners were integral in helping TELUS achieve a scalable and sustainable design for its data centers.

Future Applications

Once TELUS realized the operational energy savings achieved by implementing the modular mechanical system, they quickly explored the applicability within existing legacy data centers. In fact, TELUS has recently completed the conceptual design of a retrofit within one of its existing facilities, and an additional feasibility study is currently underway for another of its existing data centers. The team has learned that it can utilize the existing central plant mechanical system with a similar, customized modular rack and cooling system that was installed in TELUS’ new SIDCs to achieve increased (density) capacity and much improved energy efficiency. And of significant importance, all of this can be accomplished without eliminating the legacy mechanical system.

TELUS’ intention is to start applying these highly efficient solutions to some of its large network sites as well. Some of the traditional legacy equipment is rapidly moving towards a much more server-like gear with a small footprint and large power consumption, which translates into more heat rejection. Large telecom rooms can be relatively easily retrofitted to host an almost completely contained “pod” that will be used for the deployment of those new technologies.

An Ongoing Commitment

When TELUS first started planning the SIDCs in Rimouski and Kamloops, it focused on four main pillars: efficiency, reliability, scalability, and security. And all four pillars were integral to achieving overall sustainability. These pillars are the foundation of the SIDCs and ensure they’re able to meet customer needs now and in the future. With that commitment in place, TELUS’ forward-thinking strategy and innovative approach ensure it will be considered a front-runner in data center design for years to come.

Pete Hegarty has acquired a wealth of industry knowledge and experience in his more than 10 years at TELUS Communications, a leading national communications company, and 25 years in the telecommunications industry. His journey with TELUS has taken him through various positions in Technology Strategy, Network Planning, and Network Transformation. In his current role as Director of TELUS’ Data Centre Strategy, Mr. Hegarty leads an integrated team of talented and highly motivated individuals who are driving the transformation of TELUS’ data center infrastructure to provide the most efficient, reliable and secure Information communications technology (ICT) solutions in Canada.

2020

2020