The strong case for power management

ANALYST OPINION

In a recent report on server energy efficiency, Uptime Intelligence’s Dr. Tomas Rahkonen analyzed data from 429 servers and identified five key insights (see Server energy efficiency: five key insights). All were valuable observations for better managing (and reducing) IT power consumption, but one area of his analysis stood out: the efficiency benefits of IT power management.

IT power management holds a strange position in modern IT. The technology is mature, well understood, clearly explained by vendors, and is known to reduce IT energy consumption effectively at certain points of load. Many guidelines (including the 2022 Best Practice Guidelines for the EU Code of Conduct on Data Centre Energy Efficiency and Uptime Institute’s sustainability series Digital infrastructure sustainability — a manager’s guide) strongly advocate for its use. And yet very few operators use it.

The reason for this is also widely understood: operational IT managers make the decision to use IT power management and their main task is to ensure IT processing performance is optimized at all times so that it never becomes a problem. Power management, however, is a technology that involves a trade-off between processing power and energy consumption, and this compromise will almost always affect performance. When it comes to power consumption versus compute power, IT managers will almost always favor compute in order to protect application performance.

This is where Dr. Rahkonen’s study becomes important. His analysis (see below for the key findings) details these trade-offs and shows how performance might be affected. Such analysis, Uptime Intelligence believes, should be part of the discussions between facilities and IT managers at the point of procurement and as part of regular efficiency or sustainability reviews.

Power management — the context

The goal of power management is simple: reduce server energy consumption by applying voltage or frequency controls in various ways. The trick is finding the right approach so that IT performance is only minimally affected. That requires some thought about processor types, likely utilization and application needs.

Analysis shows that power management lengthens processing times and latency, but most IT managers have little or no idea by how much. And because most IT managers are striving to consolidate their IT loads and work their machines harder, power management seems like an unnecessary risk.

In his report, Dr. Rahkonen analyzed the data on server performance and energy use from The Green Grid’s publicly available Server Efficiency Rating Tool (SERT) database and drew out two key findings.

First, that server power management can improve server efficiency — which is based on the SERT server-side Java (SSJ) worklet and defined in terms of the SERT energy efficiency metric (SSJ transactions per second per watt) — by up to 19% at the most effective point in the 25% to 60% utilization range. This is a useful finding, not least because it shows the biggest efficiency improvements occur in the utilization range that most operators are striving for.

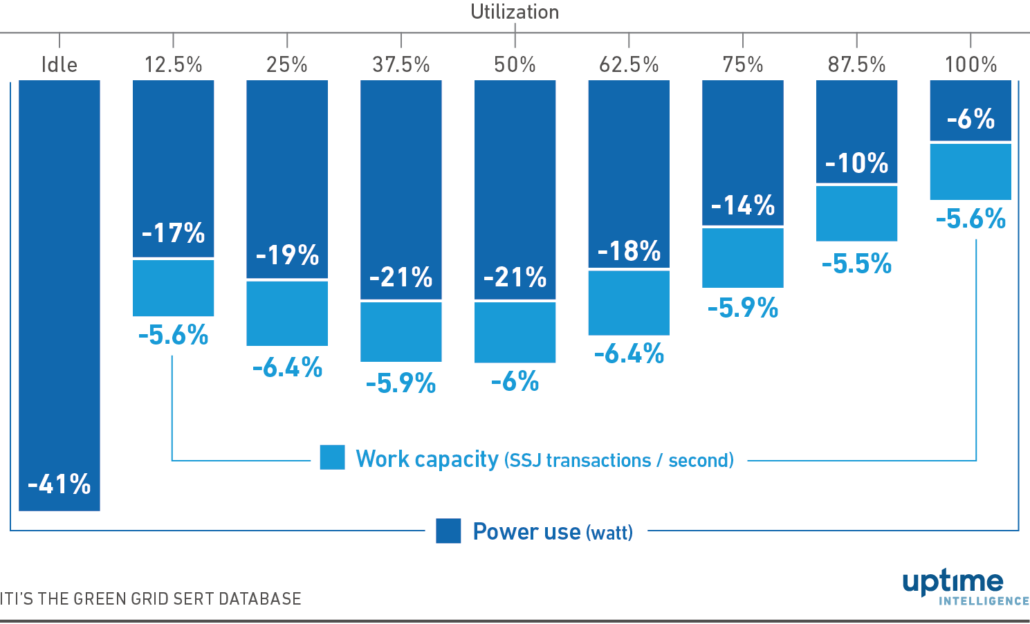

Despite this finding’s importance, many IT managers won’t initially pay too much attention to the efficiency metric. They care more about absolute performance. This is where Dr. Rahkonen’s second key finding (see Figure 1) is important: even at the worst points of utilization, power management only reduced work (in terms of SSJ transactions per second) by up to 6.4%. Power use reductions were more likely to be in the range of 15% to 21%.

Figure 1. Power management reduces server power and work capacity

What should IT managers make of this information? The main payoff is clear: power management’s impact on performance is demonstrably low, and in most cases, customers will probably not notice that it is turned on. Even at higher points of utilization, the impact on performance is minimal, which suggests that there are likely to be opportunities to both consolidate servers and utilize power management.

It is, of course, not that simple. Conservative IT managers may argue that they still cannot take the risk, especially if certain applications might be affected at key times. This soon becomes a more complex discussion that spans IT architectures, capacity use, types of performance measurement and economics. And latency, not just processor performance, is certainly a worry — more so for some applications and businesses than others.

Such concerns are valid and should be taken into consideration. However, seen through the lens of sustainability and efficiency, there is a clear case for IT operators to evaluate the impact of power management and deploy it where it is technically practical — which will likely be in many situations.

The economic case is possibly even stronger, especially given the recent rises in energy prices. Even at the most efficient facilities, aggregated savings will be considerable, easily rewarding the time and effort spent deploying power management (future Uptime Intelligence reports will have more analysis on the cost impacts of better IT power management).

IT power management has long been overlooked as a means of improving data center efficiency. Uptime Intelligence’s data shows that in most cases, concerns about IT performance are far outweighed by the reduction in energy use. Managers from both IT and facilities will benefit from analyzing the data, applying it to their use cases and, unless there are significant technical and performance issues, using power management as a default.

UI 2020

UI 2020 2020

2020

Uptime Institute

Uptime Institute

Getty

Getty