ATD Interview: Christopher Johnston, Syska Hennessy

Industry Perspective: Three Accredited Tier Designers discuss 25 years of data center evolution

The experiences of Christopher Johnston, Elie Siam, and Dennis Julian are very different. Yet, their experiences as Accredited Tier Designers (ATDs) all highlight the pace of change in the industry. Somehow, though, despite significant changes in how IT services are delivered and business practices affect design, the challenge of meeting reliability goals remains very much the same, complicated only by greater energy concerns and increased density. All three men agree that Uptime Institute’s Tiers and ATD programs have helped raise the level of practice worldwide and the quality of facilities. In this installment, SyskaHennessy Group’s Christopher Johnston looks forward by examining current trends. This is the first of three ATD interviews from the May 2014 Issue of the Uptime Institute Journal.

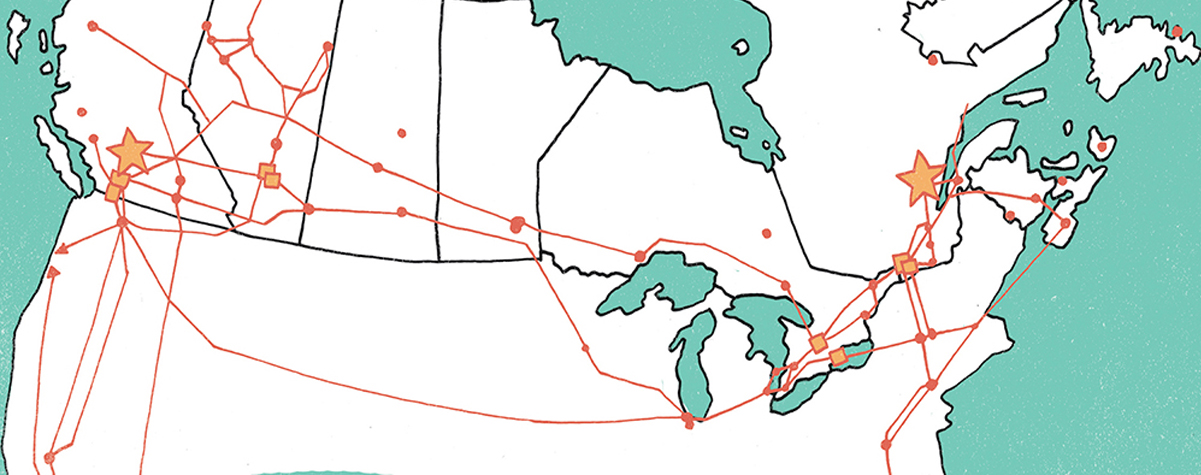

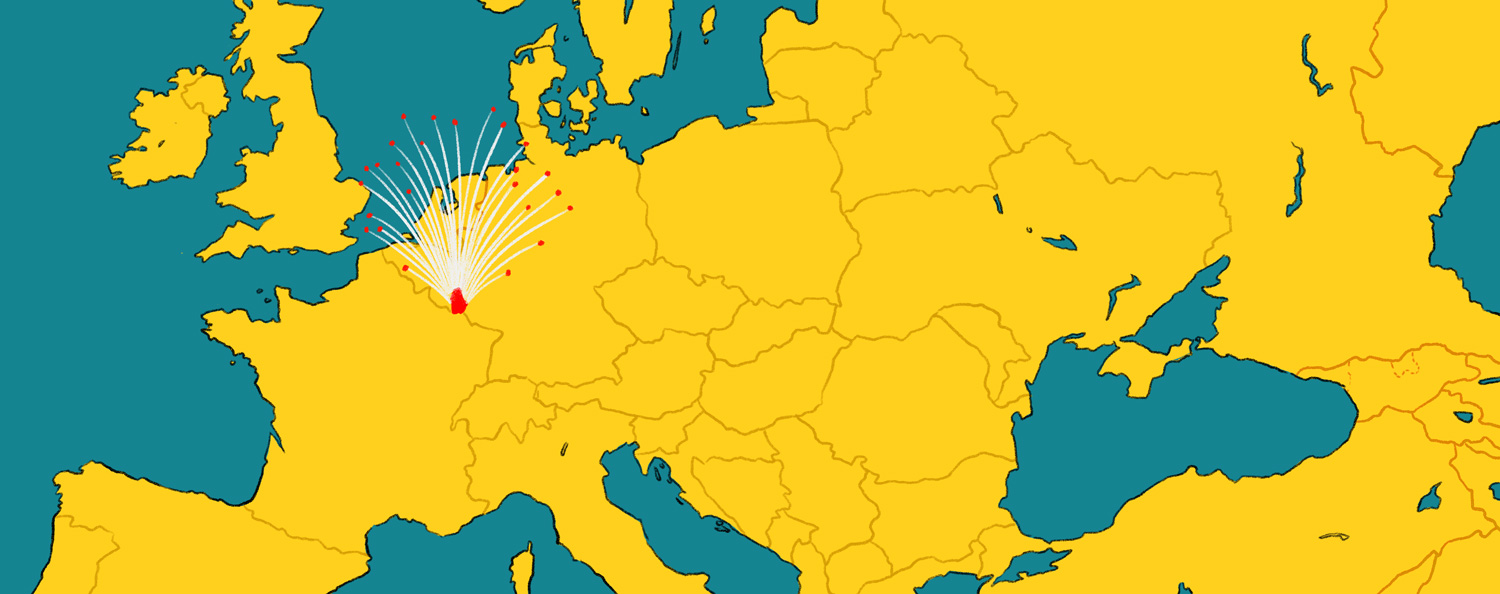

Christopher Johnston is a senior vice president and chief engineer for Syska’s Critical Facilities Team. He leads research and development efforts to address current and impending technical issues (cooling, power, etc.) in critical and hypercritical facilities and specializes in the planning, design, construction, testing, and commissioning of critical 7×24 facilities. He has more than 40 years of engineering experience. Mr. Johnston has served as quality assurance officer and supervising engineer on many projects, as well as designing electrical and mechanical systems for major critical facilities projects. He has been actively involved in the design of critical facility projects throughout the U.S. and internationally for both corporate and institutional clients. He is a regular presenter at technical conferences for organizations such as 7×24 Exchange, Datacenter Dynamics, and the Uptime Institute. He regularly authors articles in technical publications. He heads Syska’s Critical Facilities’ Technical Leadership Committee and is a member of Syska’s Green Critical Facilities Committee.

Christopher, you are pretty well known in this business for your professional experience and prominence at various industry events. I bet a lot of people don’t know how you got your start.

I was born in Georgia, where I attended the public schools and then did my undergraduate training at Georgia Tech, where I earned a Bachelor’s in Electrical Engineering and effectively a minor in Mechanical Engineering. Then, I went to the University of Tennessee at Chattanooga. For the first 18 years of my career, I was involved in the design of industrial electrical systems, water systems, wastewater systems, pumping stations, and treatment stations. That’s an environment where power and controls have a premium.

In 1988, I decided to relocate my practice (Johnston Engineers) to Atlanta and merge it with RL Daniell & Associates. RL Daniell & Associates had been doing data center work since the mid-1970s.

Raymond Daniell was my senior partner, and he, Paul Katzaroff, and Charlie Krieger were three of the original top-level designers in data center design.

That was 25 years ago, Raymond and I parted ways in 2000, and I was at Carlson until 2003. Then, I opened up the Atlanta office for EYP Mission Critical Facilities and moved over to Syska in 2005.

What are the biggest changes since you began practicing as a data center designer?

From a technical standpoint, when I began we were still in the mainframe era, so every shop was running a mainframe, be it Hitachi, Amdahl, NAS, or IBM. Basically we dealt with enterprise-type clients. People working at their desks might have a dumb terminal keyboard and video. Very few had mice, and they would connect into a terminal controller, which would connect to the mainframe.

The next big change was when they went to distributed computing, which were the IBM AS400 and the Digital VAX systems. Then, in the 1990s, we started seeing server-based technologies, which is what we have today.

Of course, we now have virtualized servers that make the servers operate like mainframes, so the technology has gone in a circle. That’s my experience with IT hardware. Of course, today’s hardware is typically more forgiving than the mainframes were.

We’re seeing much bigger data centers today. A 400-kilowatt (kW) UPS system was very sizable. Today, we have data centers with tens of megawatts of critical load.

I’m the Engineer-of-Record now for a data center with 60 megawatts (MW) of critical load. SyskaHennessy Group has a data center design sitting on the shelf with 100 MW of load. I’ve actually done a design for kind of a prototype for a telecom in China that wanted 315 MW of critical load.

There is also much more use of medium voltage because we have to move tremendous amounts of electricity at minimal costs to meet client requirements.

The business of data center design has become commoditized since the late 1980s. Data center design was very much a specialty, and we were a boutique operation. There was not much competition, and we could typically demand three times the fee on a percentage basis than we can now.

Now everything is commoditized; there is a lot of competition. We encounter people who have not done a data center in 5-7 years who consider themselves data center experts. They say to themselves, “Oh data centers, I did one of those 6 or 7 years ago. How difficult can it be? Nothing’s changed.” Well the whole world has changed.

Please tell me more about the China facility. At 315 MW, obviously it has a huge footprint, but does it have the kind of densities that I think you are implying? And, if so, what kind of systems are you looking to incorporate?

It was not extremely dense. It was maybe 200 watts (W)/ft2 or 2,150 W/square meter (m2). The Chinese like low voltage, they like domestically manufactured equipment, and there are no low-voltage domestically manufactured UPS systems in China.

The project basically involved setting up a 1,200-kW building block. You would have two UPS systems for every 1,200-kW block of white space, and then you would have another white space supplied by two more 1,200-kW UPS systems.

It was just the same design repeated over and over again, because that is what fit how the Chinese like to do data centers. They do not like to buy products from outside the country, and the largest unit substation transformer you can get is 2,500 kilovolt-amperes so perhaps you can go up to a 1,600-kW system. It was just many, many, many multiple systems, and it was multiple buildings as well.

I think a lot of people did conceptual designs. We didn’t end up doing the project.

What projects are you doing?

We’ve got a large project that I cannot mention. It’s very large, very high density, and very cutting edge. We also have a number of greenfield data centers on the board. We always have greenfield projects on the board.

You might find it interesting that when I came to Syska, they had done only one greenfield; Syska had always lived in other people’s data centers. I was part of the team that helped corporate leadership develop its critical facilities team to where it is today.

We’re also seeing the beginning of a growing market in the U.S. in upgrading and replacing legacy data center equipment. We’re beginning to see the first generation of large enterprise data centers bumping up against the end-of-usable-life spans of the UPS systems, cooling systems, switchgear, and other infrastructure. Companies want to upgrade and replace these critical components, which, by the way, must be done in live data centers. It’s like doing open-heart surgery on people while they work.

What challenge does that pose?

One of the challenges is figuring out how to perform the work without posing risk to the operating computing load. You can’t shut down the data center, and the people operating the data center want minimal risk to their computers. Let’s say I am going to replace a piece of switchgear. Basically, there are a few ways I can do it. I can pull the old switchgear out, put the new switchgear in its place, connect everything back together, then commission it, and put everything back into service. That’s what we use to call a push pull.

The downside is that the computers are possibly going to be more at risk because you are not going to be able to pull the old switchgear out, put new equipment in, and commission it very quickly. If you are working on a data center with A and B sides and don’t have ability to do a tie, perhaps the A side will be down for 2 weeks, so there is certainly more risk in terms of time there.

If you have unused space in the building where you can put new equipment or if you can put the switchgear in containers or another building, then you can put the new switchboard in, test it, commission it, and make sure everything is fine. Then you just need to have a day or perhaps a couple of days to cut the feeders over. There may be less risk, but it might end up costing more in terms of capital expense.

So each facility has to be assessed to understand its restrictions and vulnerabilities?

Yes, it is not a one-size-fits all. You have to go and look. And an astute designer has got to do the work in his or her head. The worst situation a designer can put the contractor in is to turn out a set of drawings that say, “Here’s the snapshot of the data center today and here’s what we want to look at when we finish. How you get from point A to point B is your problem.” You can’t do that because the contractor may not be able to build it without an outage.

We are looking at data centers that were built in the 1990s up to the dot bomb in 2002-2003 that are getting close to the end, particularly on the UPS systems, of usable life. The problem is not only the technology, but it is also being able to find technicians who can work on the older equipment.

What happens is the UPS manufacturer may say, “I’m not going to make this particular UPS system any more after next year. I’ve got a new model. I’ll agree to support that system normally, for, let’s say, 7 years. I’ll provide full parts and service support.” Past 7 years they don’t guarantee the equipment.

So let’s say that two years before the UPS was obsoleted you bought one and you are planning on keeping it 20 years. You are going to have to live with it for years afterwards. The manufacturer hasn’t trained any technicians, and the technicians are retiring. And, the manufacturer may have repair parts, but then again after 7 years, they may not.

What other changes do you see?

We’re beginning to see the enterprise data centers in the U.S. beginning to settle out into two groups. We are seeing the very, very, very small, 300-500 kW, maybe a 1000 kW, and then we see very big data centers of 50 MW or maybe 60 MW. We’re not seeing many in the middle.

But, we are seeing a tremendous amount of building by colo operators like T5, Digital Realty, and DuPont Fabros. I think they are having a real effect in the market, and I think there are a lot of customers who are tending to go to colo or to cloud colo. We see that as being more of an interest in the future, so the growth of very large data centers is going to continue.

At the same time, what we see from the colos is that they want to master plan and build out capacity as they need it, just in time. They don’t want to spend a lot of money building out a huge amount of capacity that they might not be able to sell, so maybe they build out 6 MW, and after they’ve sold 4 MW, maybe they build 4-6 more MW. So that’s certainly a dynamic that we’re seeing as people look to reduce the capital requirements of data centers.

We’re also seeing the cost per megawatt going down. We have clients doing Uptime Institute Certified Tier III Constructed Facility data centers in the $9-$11 million/MW range, which is a lot less than the $25 million/MW people have expected historically. People are just not willing to pay $25 million/MW. Even the large financials are looking for ways to cut costs, both capital costs and operating costs.

You have been designing data centers for a long time. Yet, you became an ATD just a few years ago. What was your motivation?

December 2010. My ATD foil number is 233. The motivation at the time was that we were seeing more interest in Uptime Institute Certification from clients, particularly in China and the Mideast. I went primarily because we felt we needed an ATD, so we could meet client expectations.

If a knowledgeable data center operator outside goes to China and sets up a data center, the Chinese look at an Uptime Institute Certification as a Good Housekeeping Seal of Approval. It gives them evidence that all is well.

In my view, data center operators in the U.S. tend to be a bit more knowledgeable, and they don’t necessarily feel the need for Certification.

Since then, we got three more people accredited in the U.S. because I didn’t want to be a single point of failure. And, our Mideast office decided to get a couple of our staff in Dubai accredited, so all told Syska has six ATDs.

This article  was written by Kevin Heslin, senior editor at the Uptime Institute. He served as an editor at New York Construction News, Sutton Publishing, the IESNA, and BNP Media, where he founded Mission Critical, the leading publication dedicated to data center and backup power professionals. In addition, Heslin served as communications manager at the Lighting Research Center of Rensselaer Polytechnic Institute. He earned the B.A. in Journalism from Fordham University in 1981 and a B.S. in Technical Communications from Rensselaer Polytechnic Institute in 2000.

was written by Kevin Heslin, senior editor at the Uptime Institute. He served as an editor at New York Construction News, Sutton Publishing, the IESNA, and BNP Media, where he founded Mission Critical, the leading publication dedicated to data center and backup power professionals. In addition, Heslin served as communications manager at the Lighting Research Center of Rensselaer Polytechnic Institute. He earned the B.A. in Journalism from Fordham University in 1981 and a B.S. in Technical Communications from Rensselaer Polytechnic Institute in 2000.